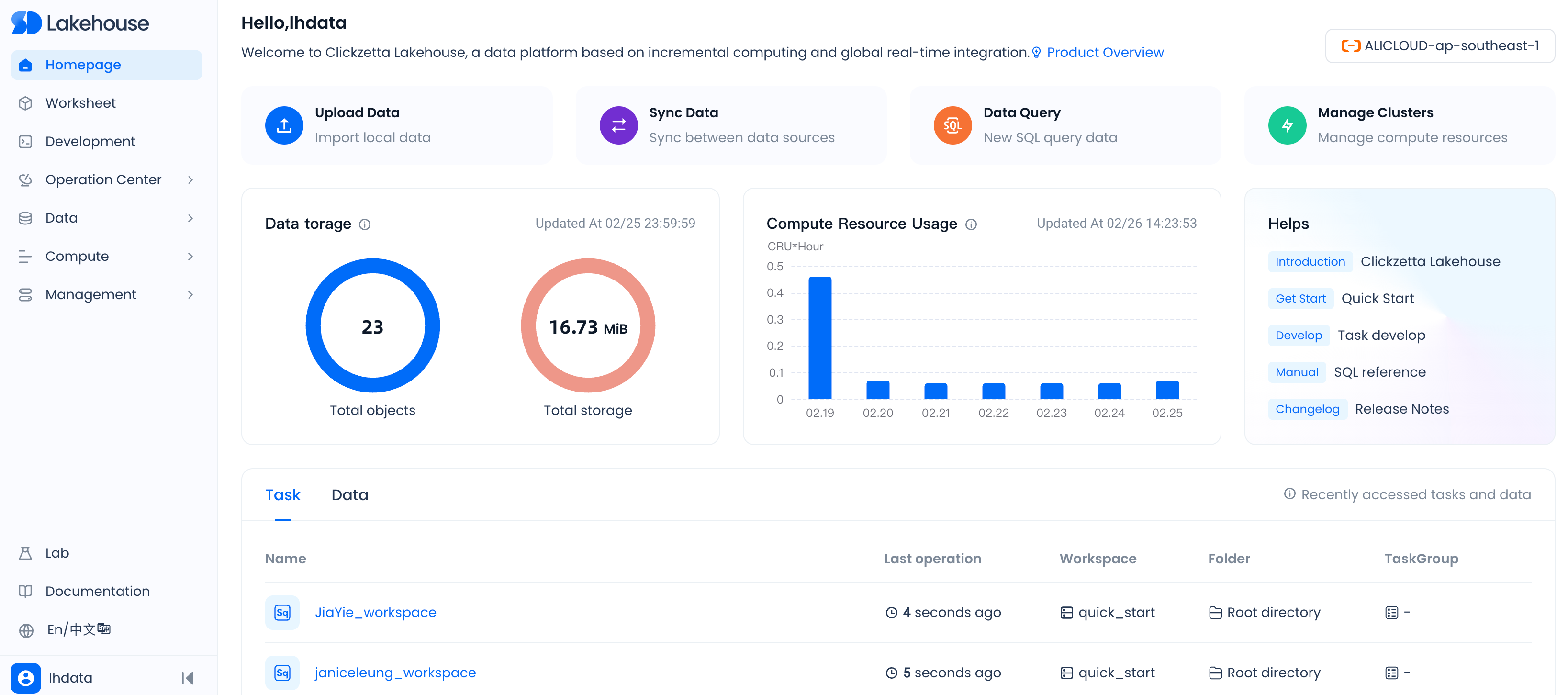

Lakehouse Studio Quick Tour

In Lakehouse Studio, you can perform data analysis and engineering tasks, monitor query, data loading/data synchronization, data transformation and workflow activity, explore your Singdata Lakehouse objects, and administer your Singdata Lakehouse, including managing the cost and adding users and roles.

Singdata Lakehouse Studio lets you

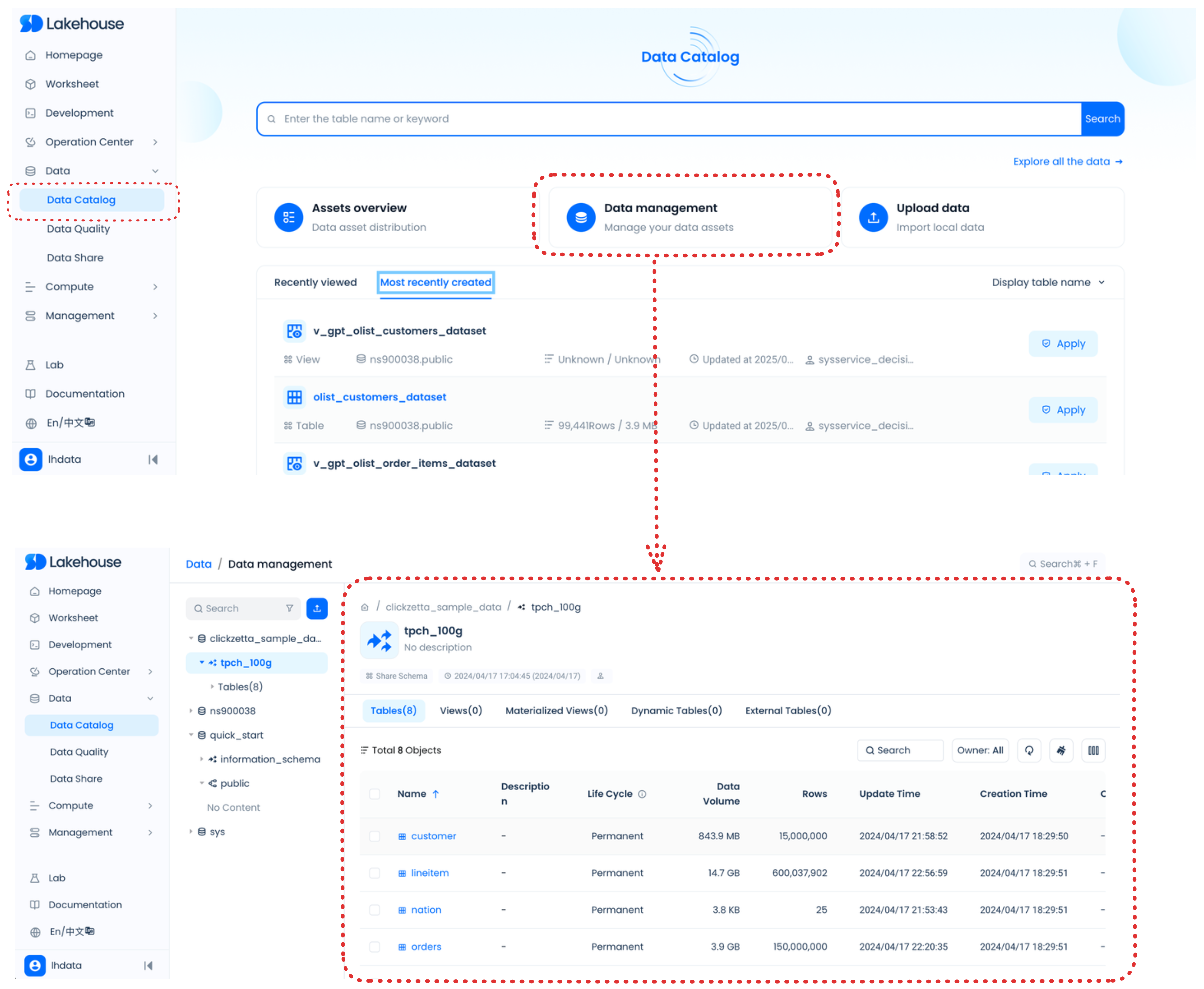

- Data Management, Create and manage datalake/database objects such as databases, tables, dynamic tables, and more.

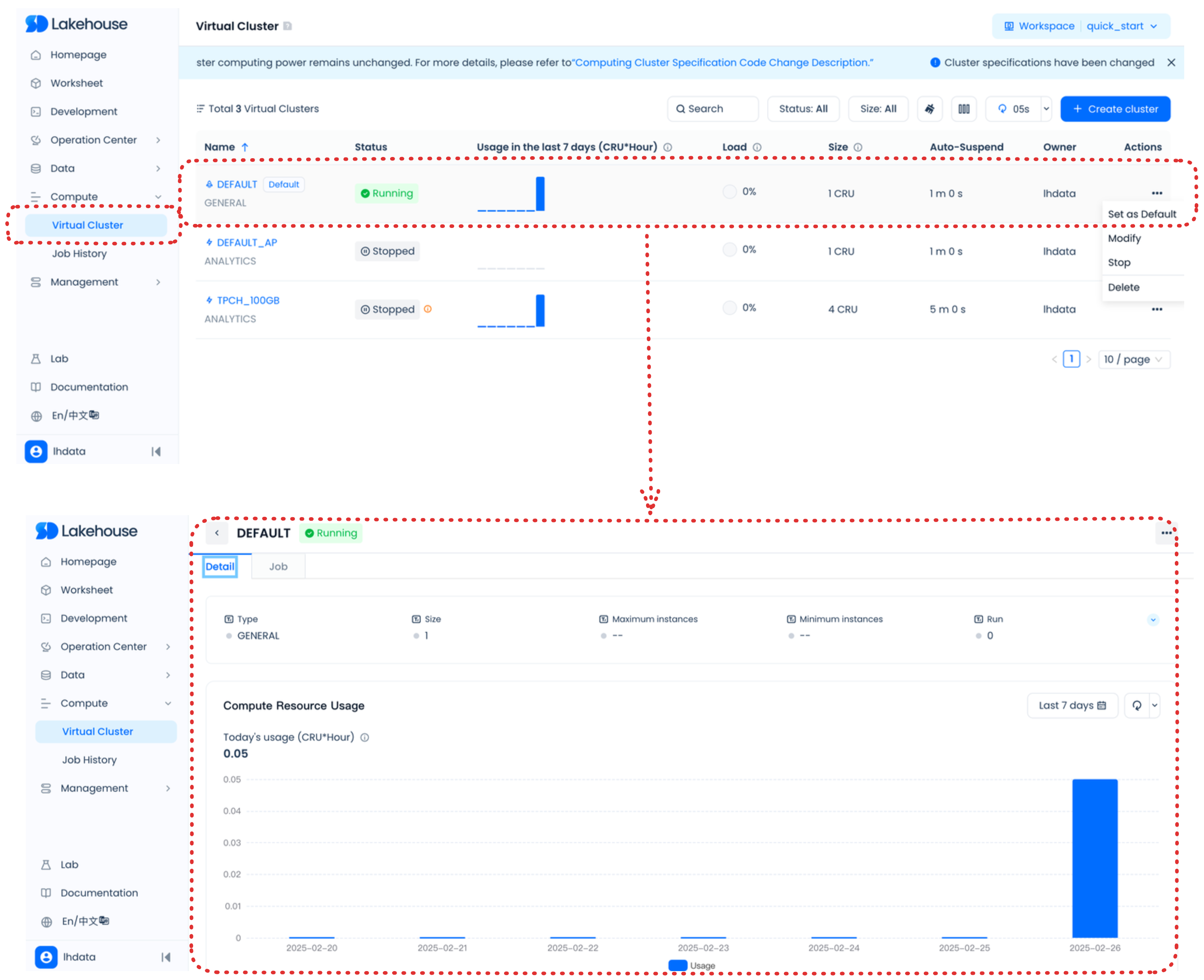

- Computing Resouce Management, Create and manage computing resouce such as virtual cluster, job history

- ELT Pipeline Development and Management,Create and manage data ELT pipeline objects such as data sources, pipeline definations, tasks(Extract task, Transform tasks,etc), workflows, alerts and more. Tasks support as below:

- Data synchronization Tasks, ingest data from data sources such as database/data warehouse/data lake into Singdata Lakehouse,and export data from Singdata Lakehouse to other data sources.

- SQL Task,Write SQL queries and code to data ingest/discovery/clean/transform, and take advantage of autocomplete for database objects and SQL functions in worksheets.

- Python Task, Build, test, and deploy SQLAlchemy/Zettapark Python Worksheets.

- JDBC Task,Build, test, and deploy JDBC(operator data in data sources via JDBC connection) Worksheets.

- Organize Task, Organize worksheets into task folders and task group.

- Share job profile, Share job profile with other users.

- Data Profile ExplorationVisualize SQL worksheet results as data profile exploration.

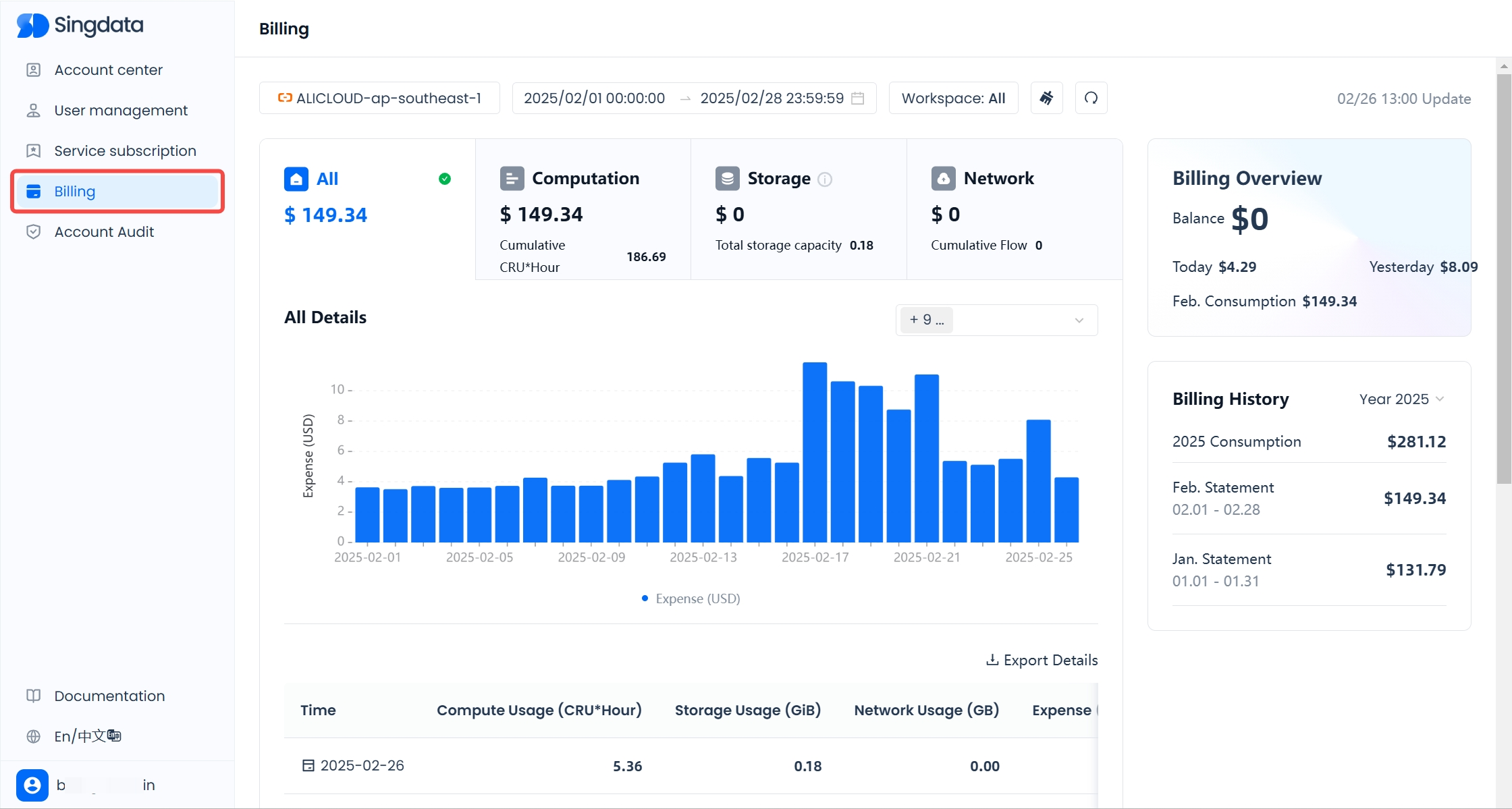

- Manage usages and costs.

- Job history, Review query history and data loading history.

- Workflow DAG,See workflow graphs and run history.

- Data backfilling, Debug and rerun task graphs.

- Monitor dynamic table, Monitor dynamic table graphs and refreshes.

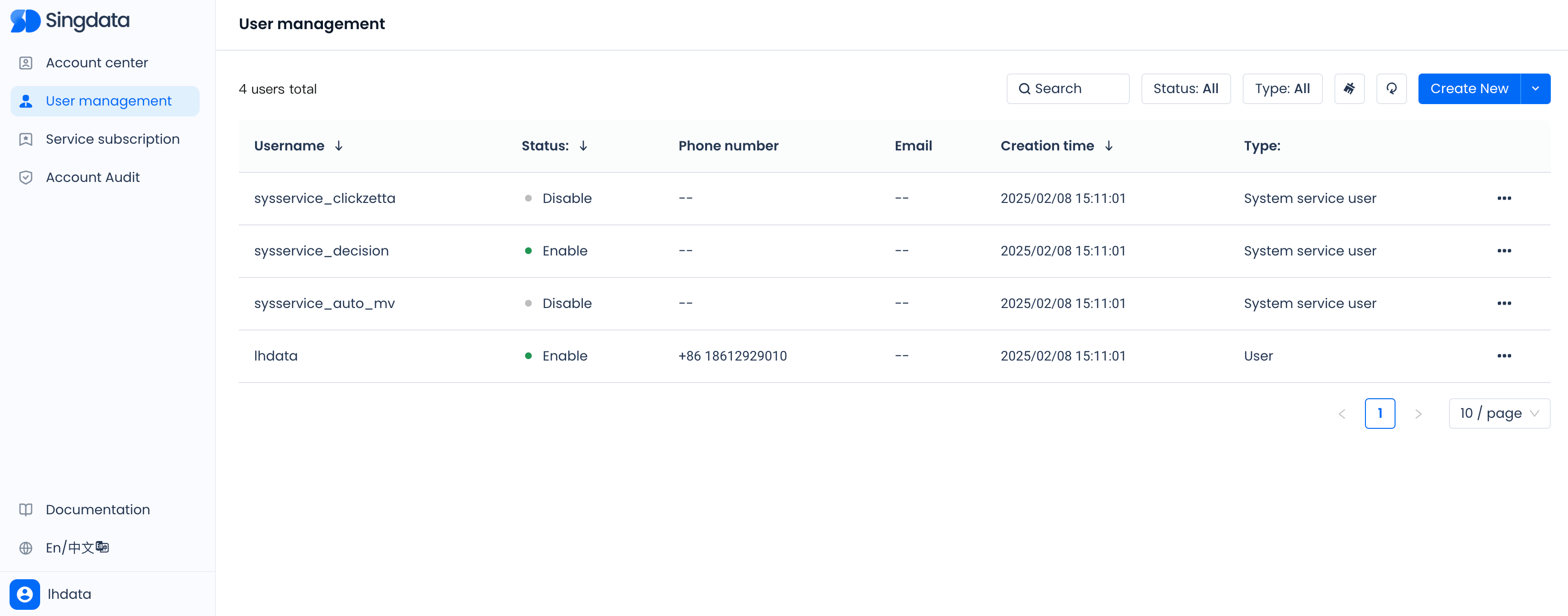

- User/Roles Management, Manage and create Singdata Lakehouse users and roles.

- Data Quality Management, Scrub, refine, and enhance vast data sets, increasing their value density to more effectively meet business objectives..

For more information about these and other tasks that you can perform, see Singdata Lakehouse Studio: The Singdata Lakehouse web interface.

Explore and manage your lakehouse objects

You can explore and manage your datalake/database objects in Singdata Lakehouse Studio as follows:

- Explore datalake/database and objects, including tables, views, and more using the data object explorer.

- Create objects like schemas, tables, and more.

- Search within the object explorer to browse database objects across your account.

- Preview the contents of database objects like tables, and view the files uploaded to a volume.

- Load files to an existing table, or create a table from a file so that you can start working with data in Singdata Lakehouse faster.

To learn more, see:

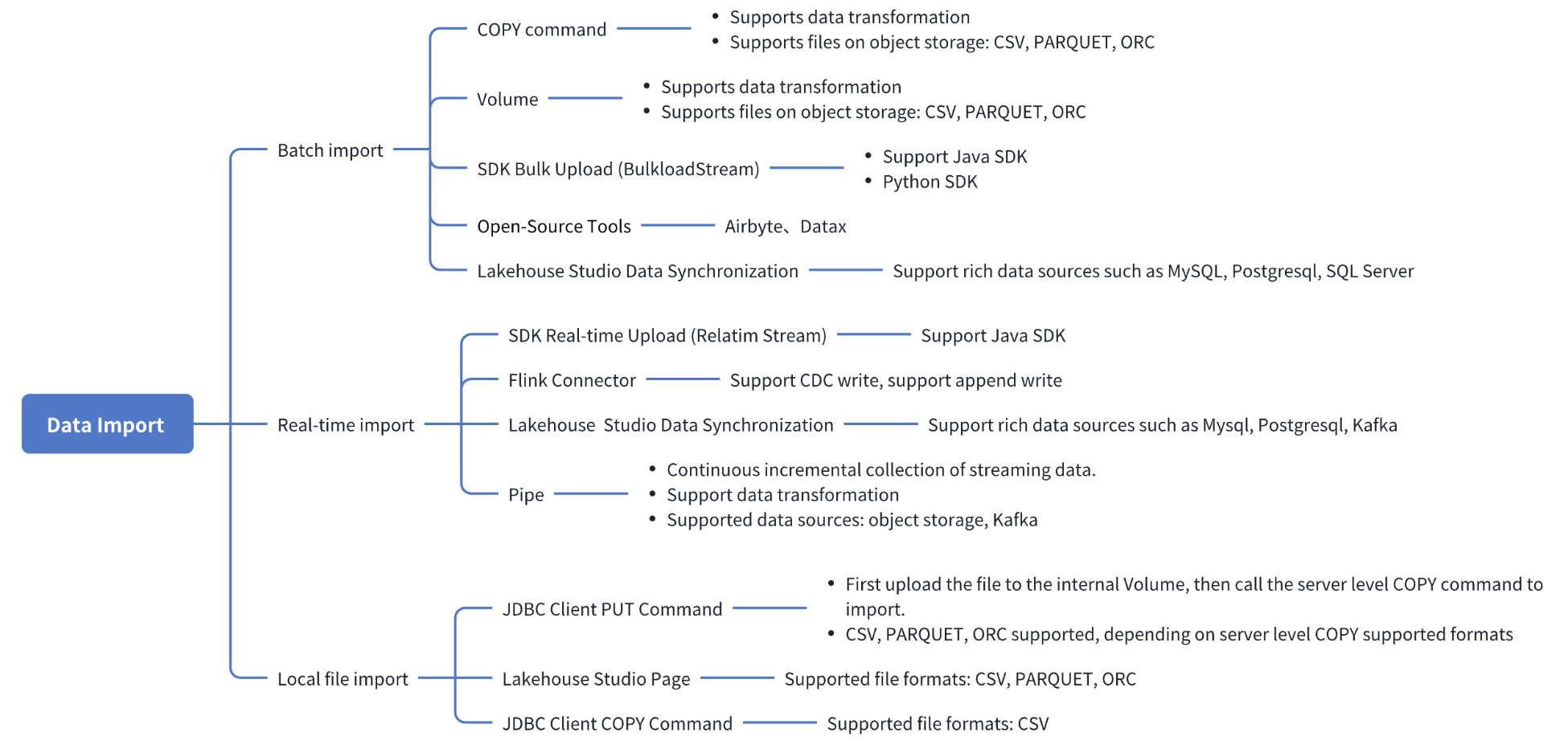

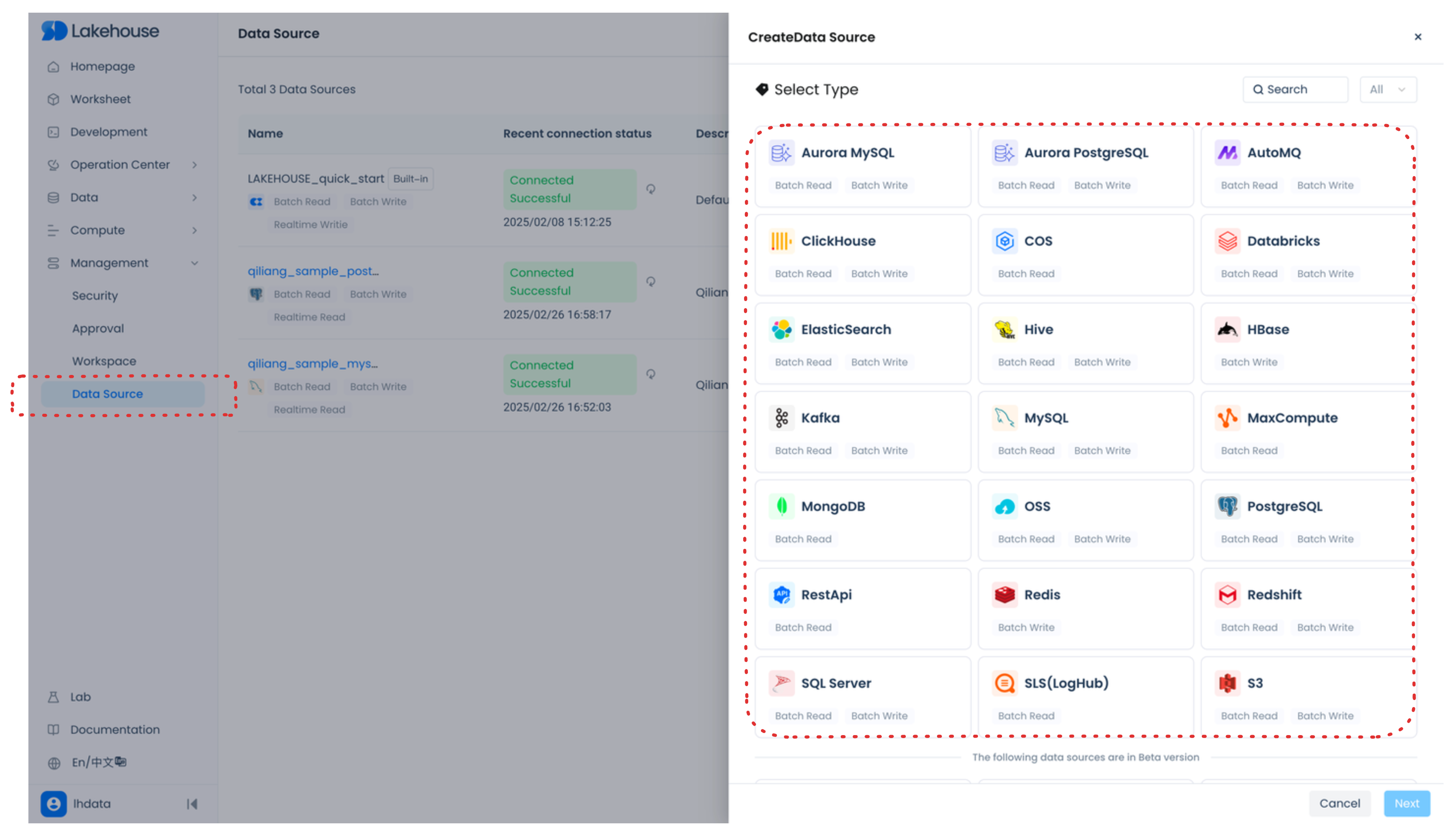

Data synchronization Tasks

Data synchronization is a seamless data integration feature embedded within Singdata Lakehouse, facilitating the movement of data between a multitude of sources. It empowers users to automate synchronization tasks via a robust scheduling system. Leveraging this functionality, you can effortlessly import data into the Lakehouse, export refined data, or harmonize data across disparate sources—no coding required. The process is as straightforward as navigating a user-friendly wizard.

There are various methods to load data into Lakehouse, depending on the data source, data format, loading method, and whether it is batch, streaming, or data transfer. Lakehouse provides multiple import methods, categorized by import method including: supporting user SDK import, supporting SQL command import, supporting client upload data, supporting third-party open-source tools, and supporting Lakehouse Studio visual interface import.

Import Methods Overview

Data Source Supported

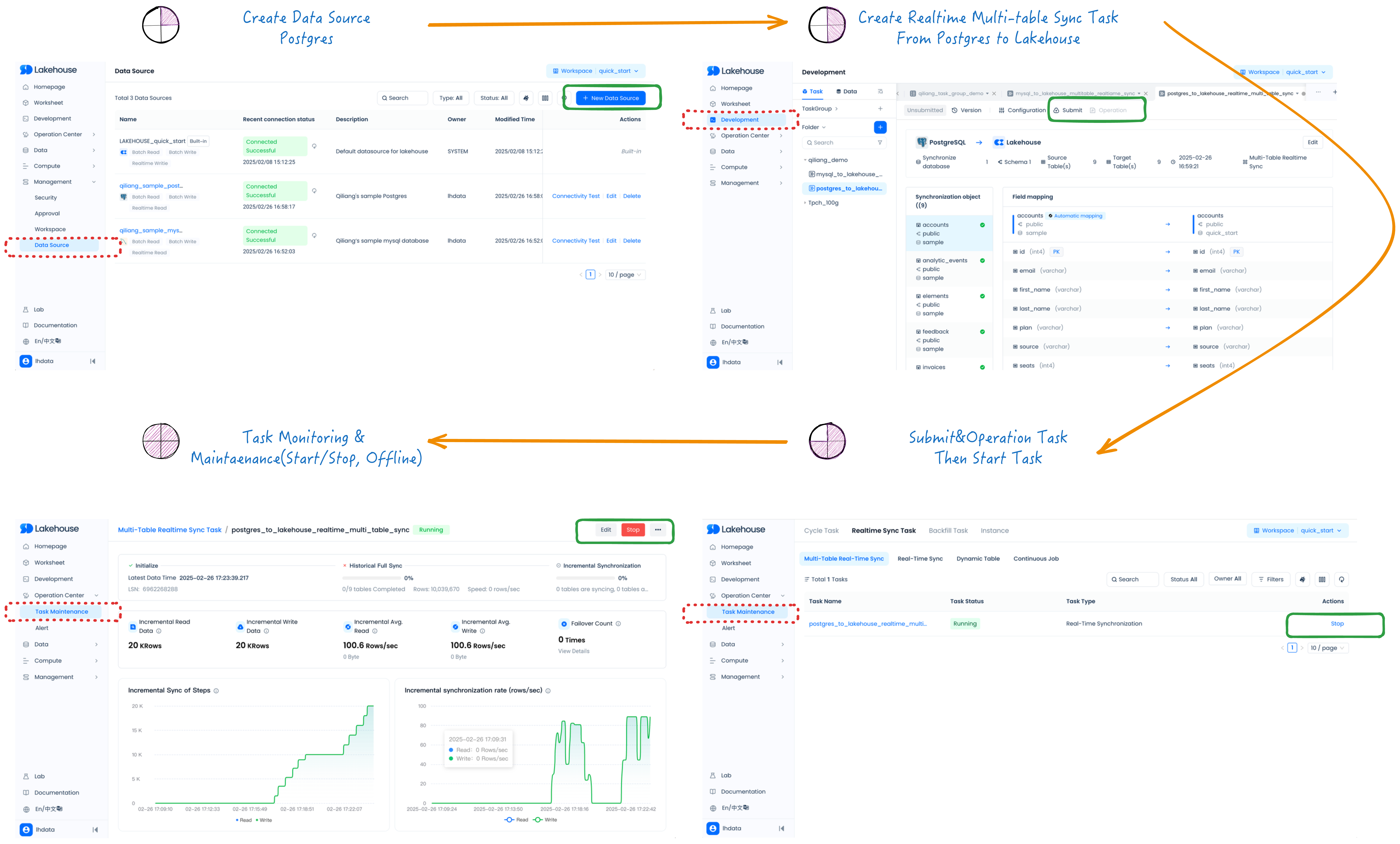

Realtime Multi-table Data synchronization Tasks(CDC)

- Create Data Source(Postgres)

- Create Realtime Multi-table Sync Task From Postgres to Lakehouse

- Submit&Operation Task then Start Task

- Task Monitoring & Maintaenance(Start/Stop, Offline)

For more details, see:

- Data Source Support

- Data Source Management

- Batch Data Sync Tasks

- Multitable Realtime CDC(Change Data Capture) Sync Tasks

- Realtime Data Sync from Kafka/AuotMQ

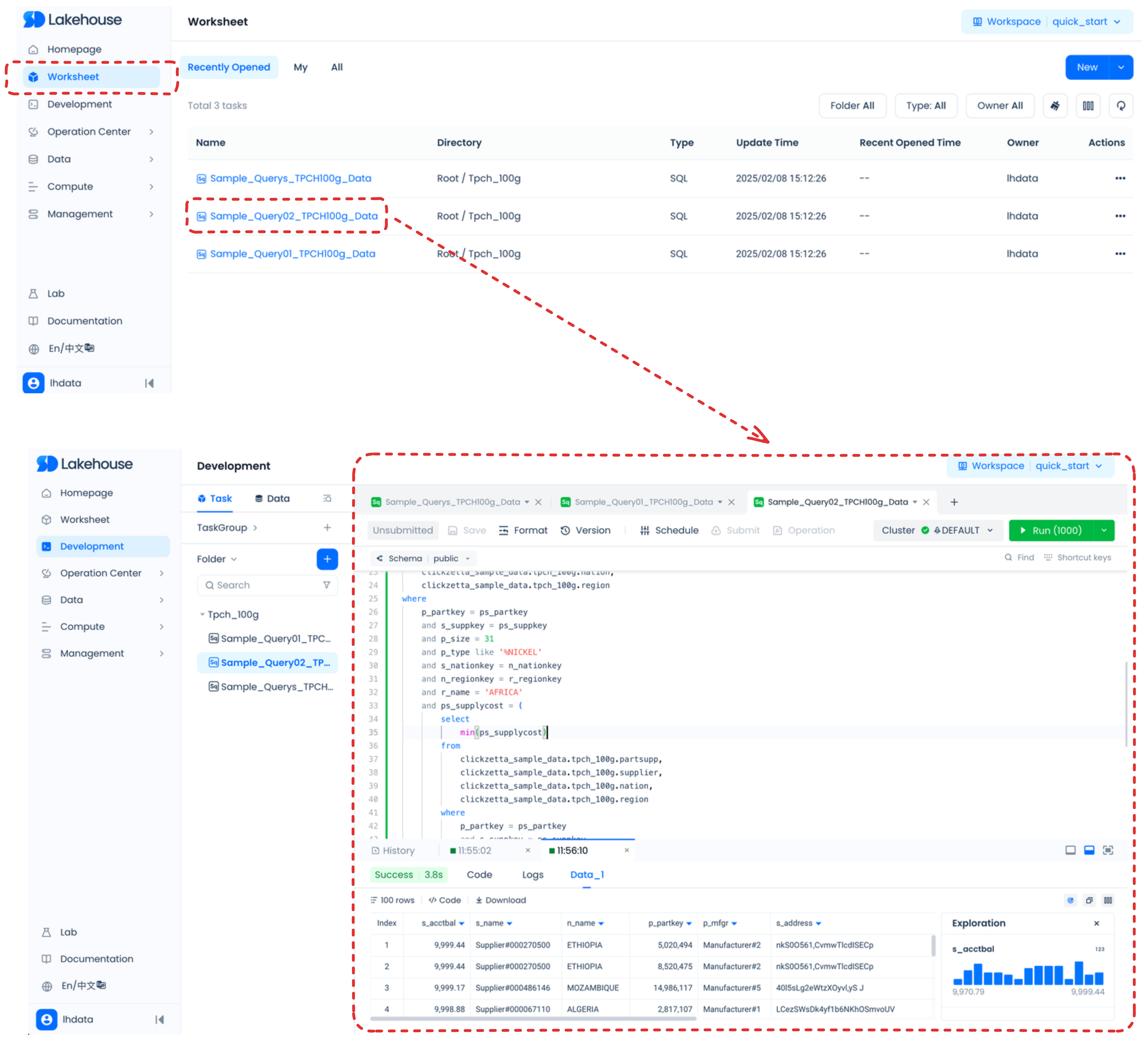

Write SQL and SQLAlchemy/Zettapark Python code in worksheets and workflow orchestration

Worksheets provide a simple way for you to write SQL jobs (DML and DDL), see the results, and schedule with them as tasks. With a worksheet, you can do any of the following:

- Run ad hoc queries and other DDL/DML operations.

- Write SQLAlchemy/Zettapark Python code in a Python worksheet.

- Review the query history and results of queries that you executed.

- Examine multiple worksheets, each with its own separate session.

- Export the results for a selected statement, while results are still available.

- Submit the jobs and schedule with them as tasks

If you select Worksheets in the navigation menu, you see a list of worksheets, and you can select one to view the worksheet contents and update the worksheet.

For more details, see:

- Lakehouse Studio Task Development Overview

- Workflow Orchestration with Lakehouse Studio Task Development and Scheduling

- Lakehouse Studio Task Group

- Writing Python Code in Python Worksheets

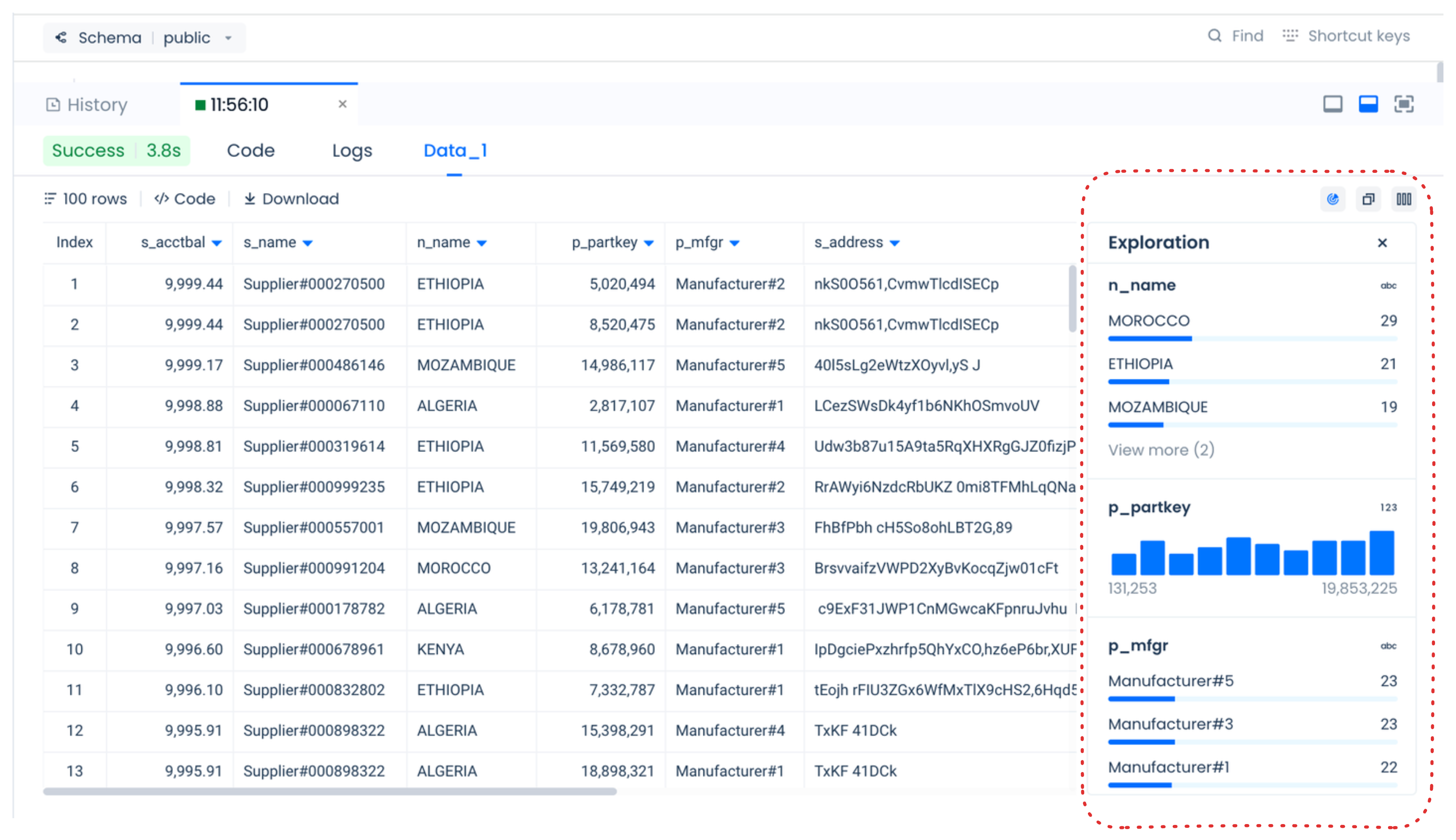

Visualize query results as data profile exploration

When you run a query in Singdata Lakehouse Studio, you can choose to view your results's data profile exploration.

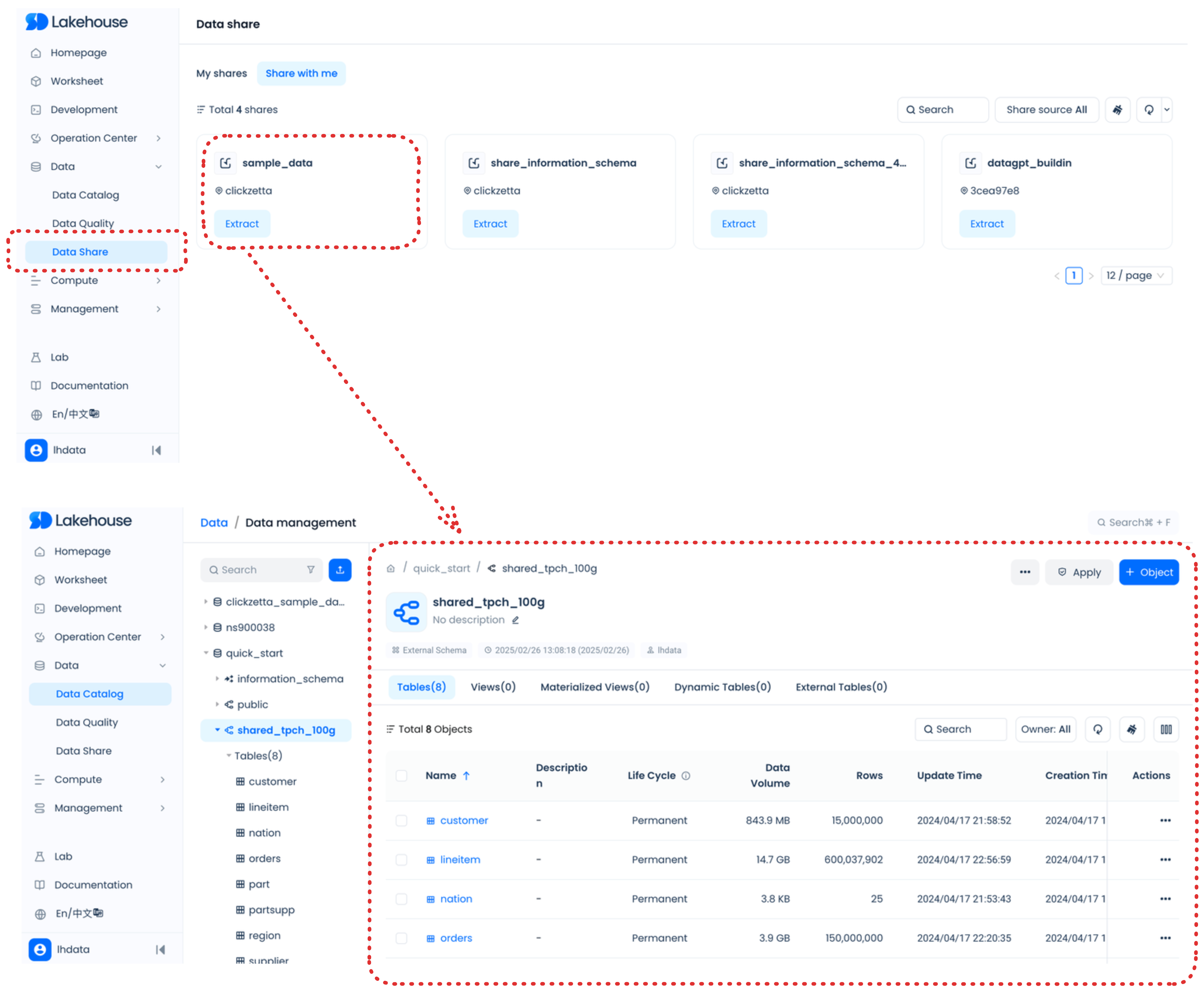

Share data

Collaborate with users in other Singdata Lakehouse accounts by sharing data with them, when you share data with a listing, you can use auto-fulfillment to easily provide your data in same Singdata regions.

As a consumer of data, you can access datasets shared with your account, helping you derive real time data insights without needing to set up a data pipeline or write any code.

For more details, see:

Monitor activity in Singdata Lakehouse Studio

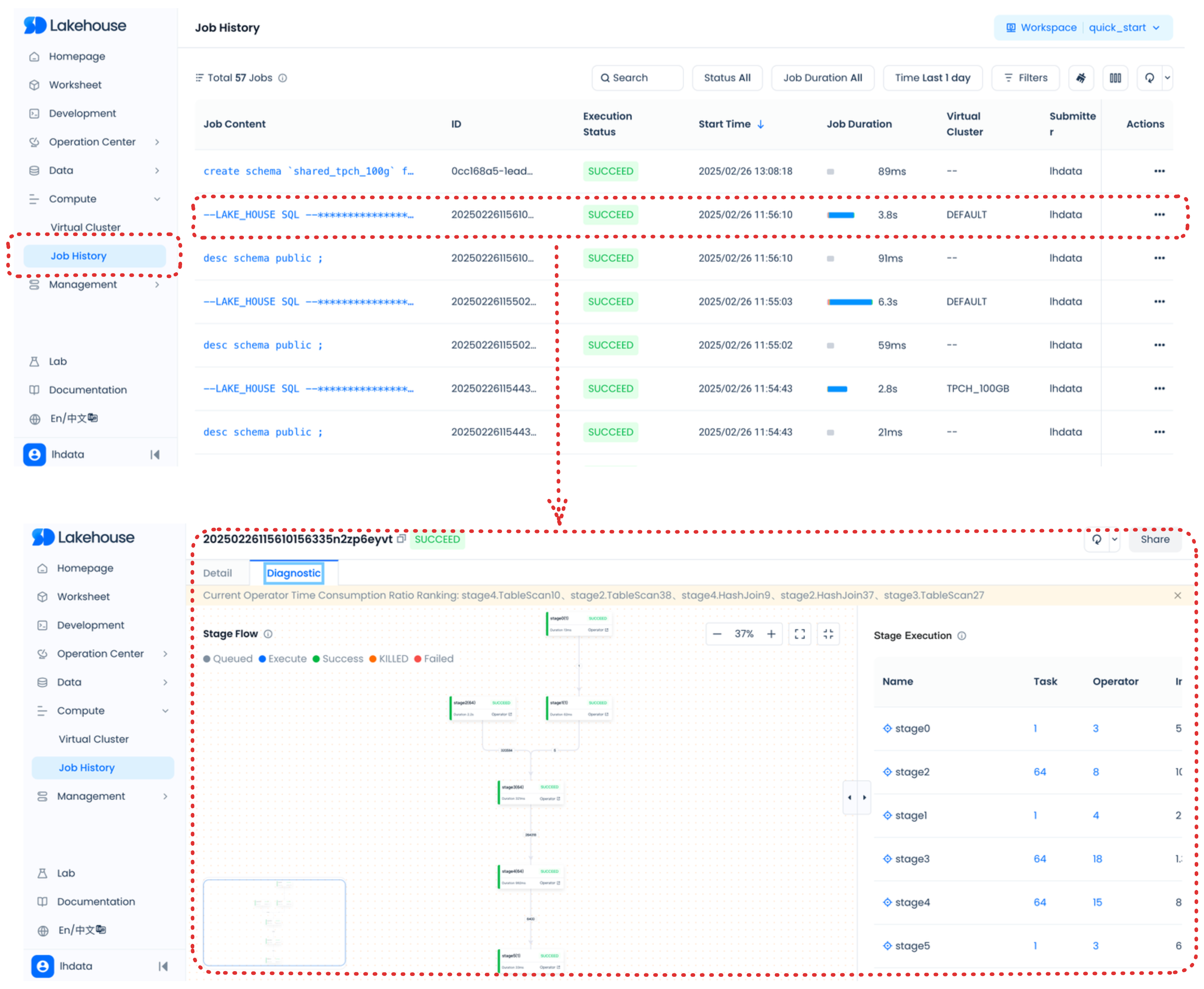

Monitor query activity with Query History

You can monitor and view query details, explore the performance of executed queries, monitor data loading status and errors, review task graphs, and debug and re-run them as needed. You can also monitor the refresh state of your Dynamic Tables and review the various tagging and security policies that you create to maintain data governance.

For more information, see:

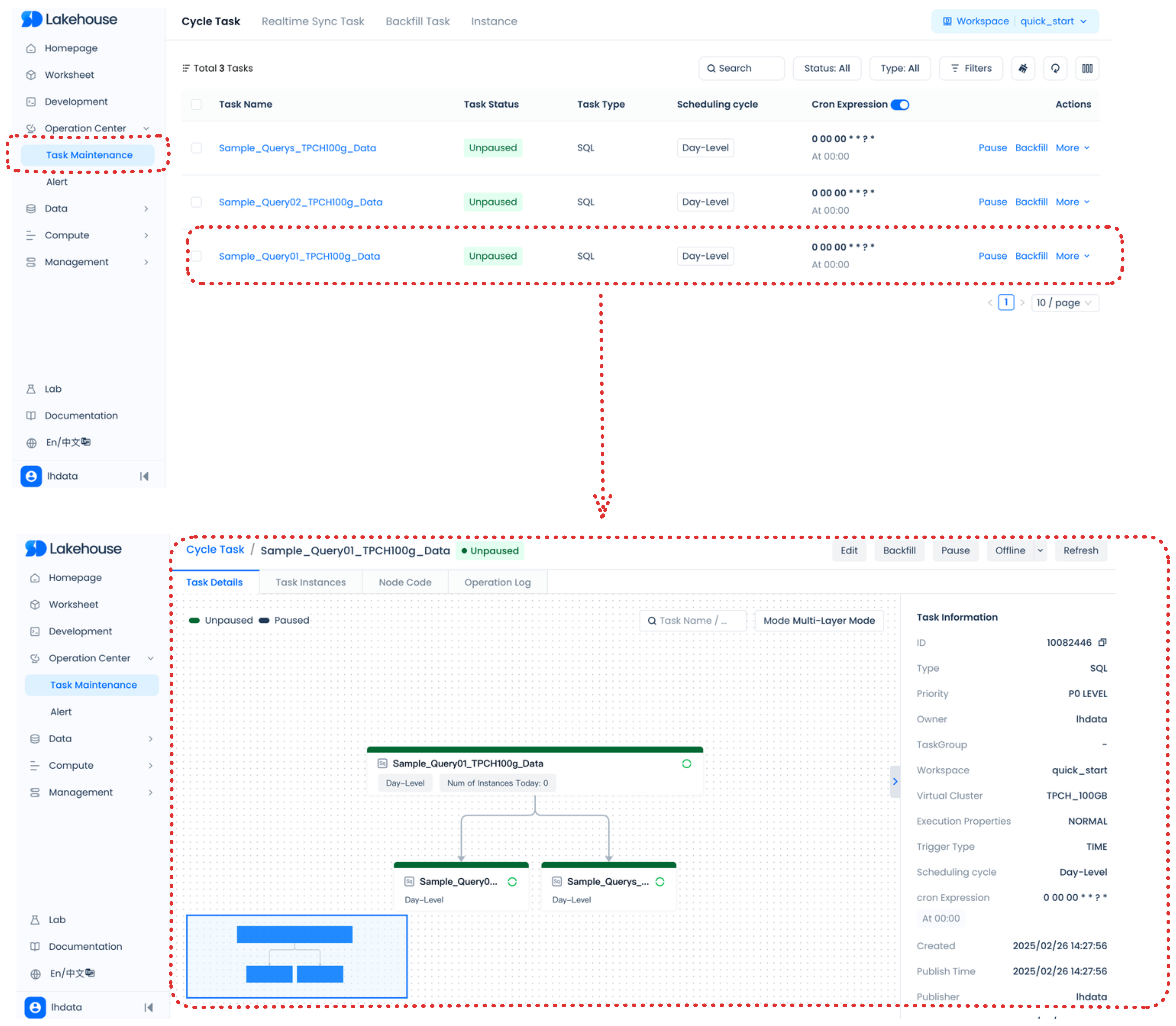

Monitor tasks of workflow with Operations Center

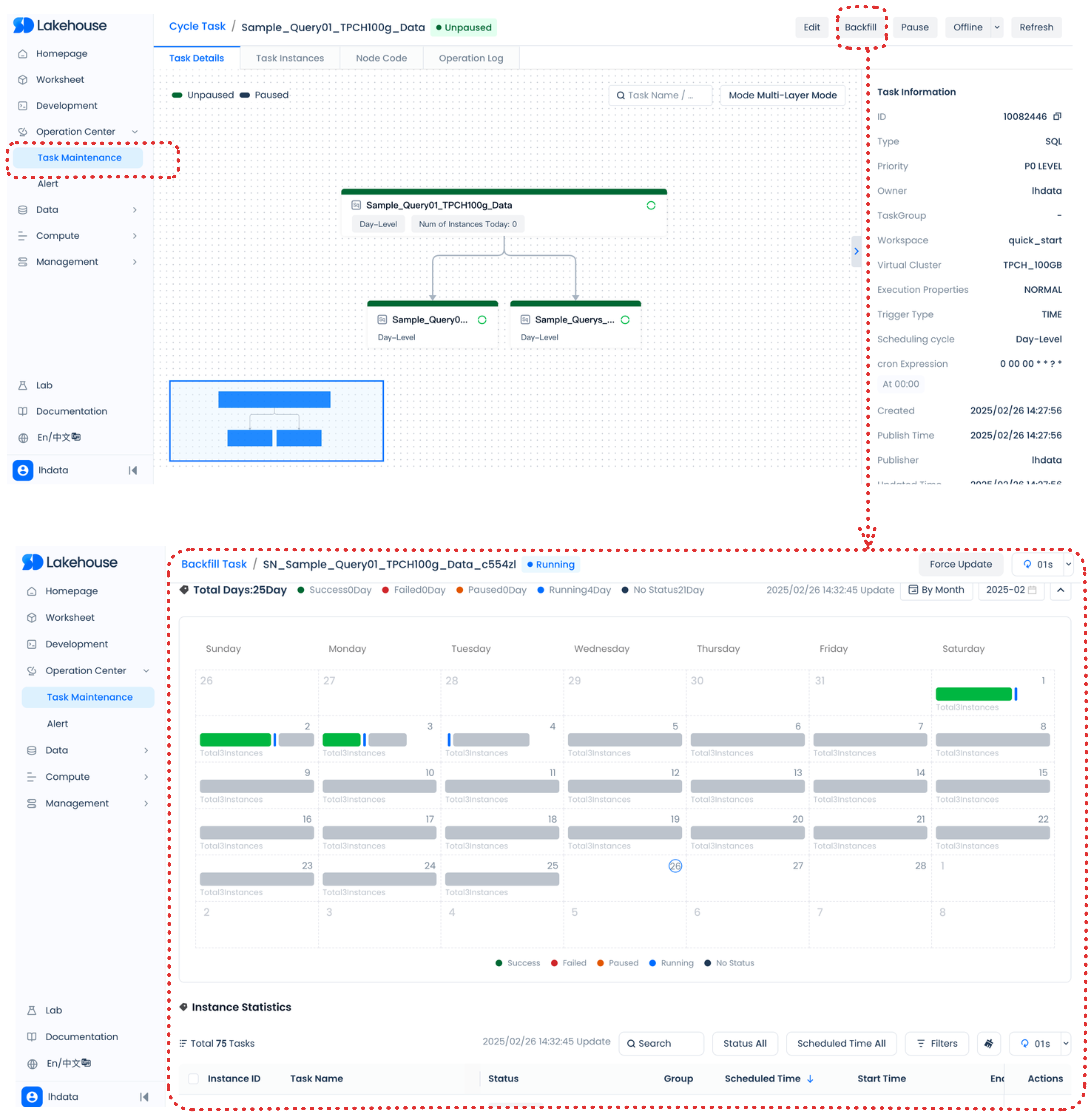

The Operations Center provides management operations for tasks and instances. The tasks(Cycle tasks for ELT, Realtime Sync Tasks for Data Enjestion, Data Backfilling Tasks and Task instances includes) of workflow managed by the Operations Center include manually triggered tasks and periodically scheduled tasks, along with their corresponding instances, for centralized management.

Data backfilling involves supplementing historical or future data over a specific timeframe and writing it to the relevant time partitions. If scheduling parameters are included in the code, they will be automatically populated with the appropriate values based on the selected business time for the data backfill. This, in conjunction with the business logic, ensures that the data for the corresponding time period is written to the designated partition. The partition that is written to and the code logic that is executed are determined by the task definitions within the code.

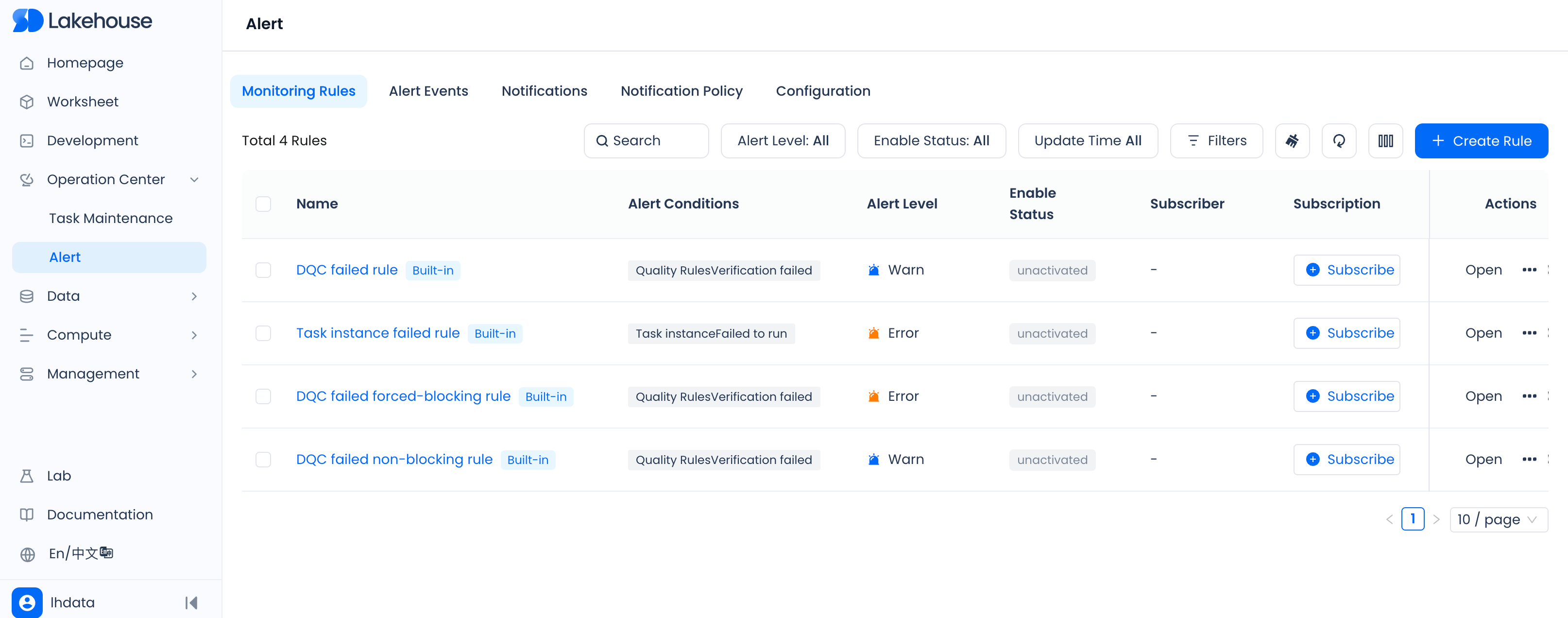

The monitoring feature enables you to keep an eye on abnormal occurrences, such as task execution status, by utilizing built-in rules or custom configuration settings, and it will dispatch alert notifications as needed.

For more information, see:

Perform Computing Resouce Management and admin Singdata Lakehouse Studio

Those pages let you understand Singdata Lakehouse data use, manage virtual clusters, monitor task queues in virtual cluters, manage users and roles, administer Singdata Lakehouse accounts, and more.

You can manage and monitor virtual clusters.

Access users and roles.

Perform usage cost management.

When you log in with an user that has the account administrator role,You can view your account’s usage records under the Billing feature in the Account Center (<your_accounts_name>.accounts.singdata.com/billing) . You can also query detailed costs by SKU type—such as compute, storage, network, or specific SKUs.

For more information, see:

Related videos

From Realtime Data Ingestion to Realtime Data Analytics with Singdata Lakehouse Studio.