Data Quality Management

In the era of big data, data quality management is a key aspect to ensure the correctness, effectiveness, and consistency of data. Through data quality management, we can clean, process, and optimize massive amounts of data, thereby enhancing the value density of the data to better serve business needs. The data quality module provides you with comprehensive monitoring and evaluation of data quality, including six dimensions: completeness, uniqueness, consistency, accuracy, validity, and timeliness. With the data quality module, you can achieve continuous improvement and optimization of data quality.

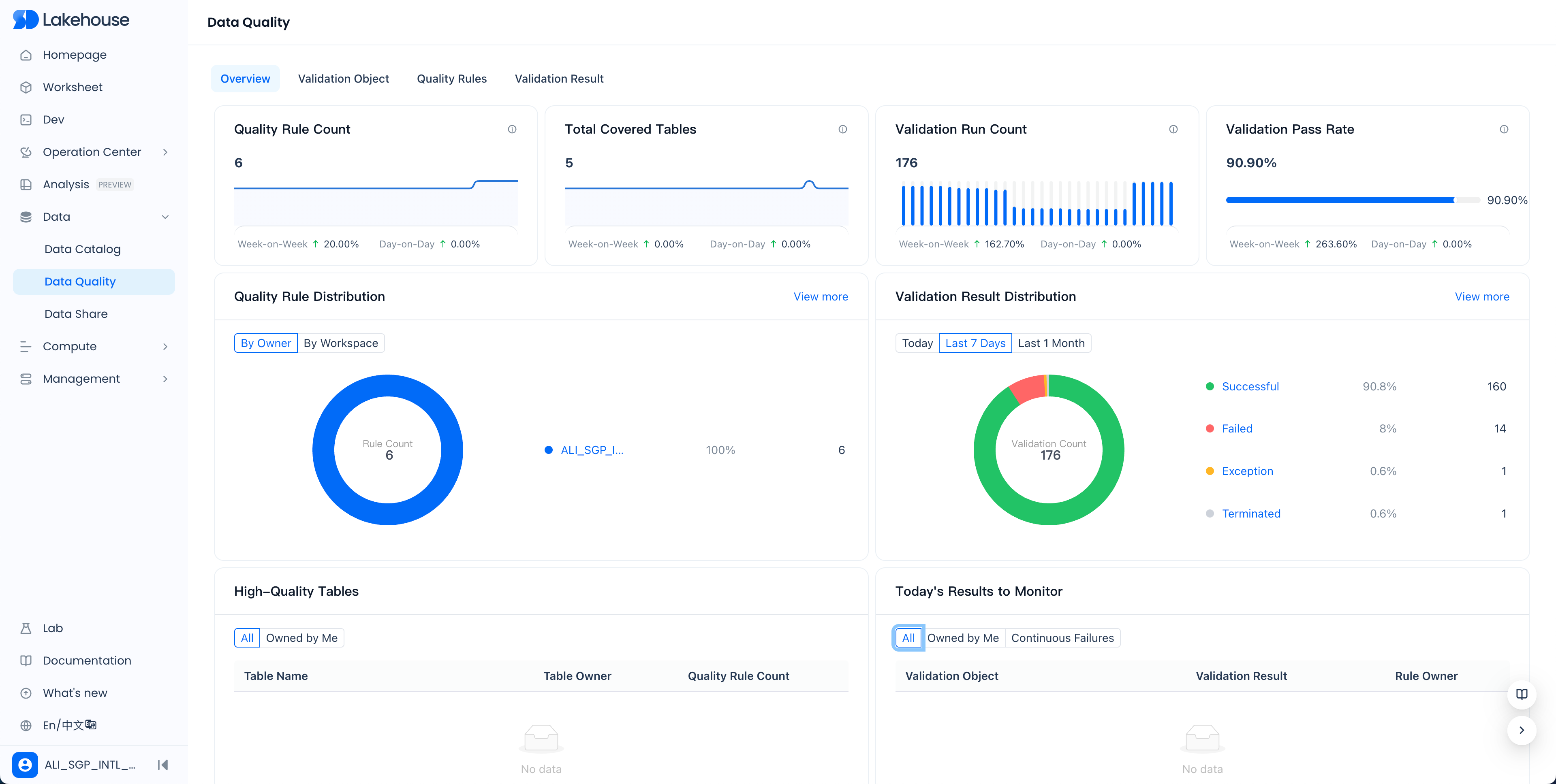

Overview

The data quality overview page provides you with an intuitive data quality monitoring dashboard, making it easy to view the overall situation of quality rules and verification runs. Below are detailed explanations of some indicators:

The definitions of some indicators are as follows:

- Number of Quality Rules: The total number of quality rules configured under all workspaces of the service instance, including rules that are not enabled

- Total Number of Covered Tables: The number of tables with configured quality rules under all workspaces of the service instance

- Number of Detection Runs: The number of quality rule runs in the past month

- Verification Pass Rate: The number of passes in the past month / total number of verifications

- Distribution of Quality Rules: The distribution of the number of quality rules under all workspaces of the service instance, counted by person in charge or workspace

- Distribution of Verification Results: The distribution of the status of verification results of quality rules under all workspaces of the service instance

- High-Quality Tables: Tables with configured quality rules that have passed verification in the past 7 days

- Results Needing Attention Today: Quality rules that failed verification

Quality Rules

The quality rules page displays all the quality rules you have configured in a list format. Through the filtering area at the top, you can quickly find the rules you need.

Through the filtering area at the top, you can perform refined searches.

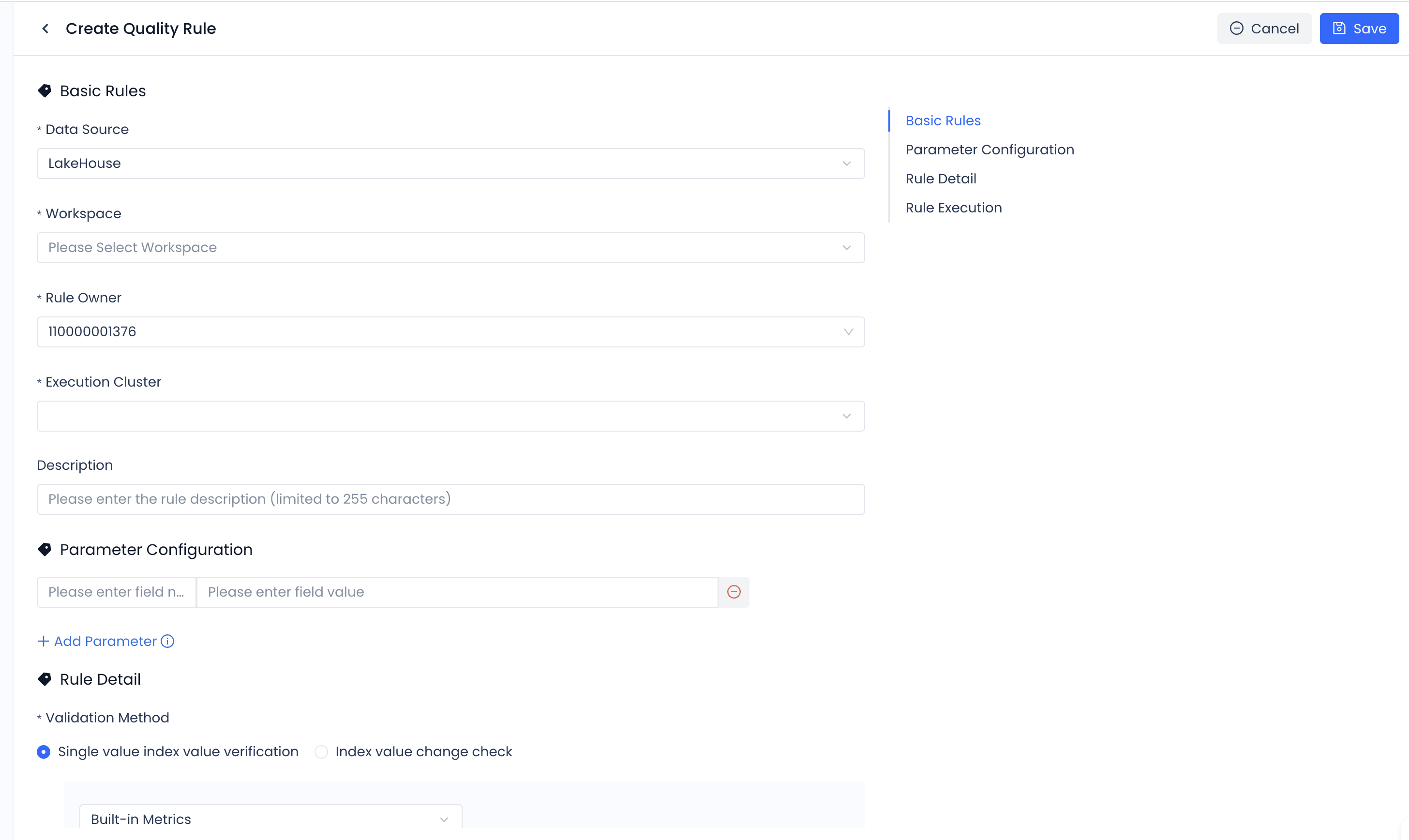

Create New Quality Rule

-

In the quality rules list or in the rule list of the verification object, click the "Create Rule" button to enter the new quality rule page.

-

Fill in the required configuration items, such as data source, workspace, verification object, person in charge, description, parameter configuration, value filtering, verification method, expected result, trigger method, execution cluster, and timeout period.

Configuration Item Configuration Description | Data Source Refers to the type of data source, currently only supports Lakehouse data source Workspace The workspace to which the validation object belongs Validation Object When the validation object is a Lakehouse table, select its Schema and name (table name, view name, etc.) Person in Charge The person responsible for the quality rule, affecting the reception of alerts Description The description defined for the quality rule Parameter Configuration In the quality rule, when calculating the metric value using value filtering and custom SQL, it supports referencing predefined dynamic parameter values. For example, define the following parameter* partition = $[yyyyMMdd] Value Filtering Used to filter the range of objects that need to be validated, such as filtering by partition, supporting parameter references* dt = ${partition} Validation Method - Built-in Metrics System built-in validation metrics, select as needed Validation Method - Custom SQL If the built-in validation metrics do not meet your needs, you can calculate the metric values using custom SQL. *Special Note: The result of the custom SQL must be a single numeric value to be compared. Expected Result Define the expected result of the metric value. Trigger Method Used to configure the way the quality rule is triggered to run. 1. Scheduled Trigger: The system triggers a validation run at the given scheduled time. 2. Periodic Task Trigger: Triggered by the associated periodic task instance, the quality rule runs after the instance runs successfully. For periodic scheduling triggers, there are two scheduling blocking configuration options: A: Strong Blocking Scheduling: If the quality rule validation fails, the associated scheduling task instance will be marked as failed, which will block the downstream instances of the scheduling instance. B: Non-blocking Scheduling: The quality rule runs as a bypass and does not affect the running status of the associated scheduling task instance. 3. Manual Trigger: Manually triggered as needed. Execution Cluster Specify the computing cluster in the workspace where the quality rule runs. Timeout If the quality rule validation is not completed within the set timeout, it will be automatically canceled by the system.

After filling in the required configuration items, click the "Confirm" button to create the rule.

- Example: If you want to check whether the number of records in a table meets expectations, you can select "Record Count" as the built-in metric, set the value filter to a specific partition, set the expected result to a specific value, set the trigger method to scheduled trigger, and set the execution cluster to the computing cluster in your workspace.

Test Run Quality Rule

After creating a quality rule, it is recommended to use the "Test Run" feature to verify the correctness of the configuration. After a successful test run, you can view the test run results to adjust the rule.

View Test Run Results

After clicking "Test Run," follow the prompts to click "View Results" to see the test run validation results.

Configure Monitoring Alerts

To ensure that data quality issues are addressed promptly, you can configure monitoring alerts for quality rules. There are two ways:

-

Enable Global Quality Monitoring Alerts: In the monitoring alert module, search for "Data Quality Check Failure" and enable the system's built-in global quality validation monitoring rule.

-

Configure Custom Quality Monitoring Alerts: Create custom monitoring rules, select "Quality Rule Validation Failure" as the monitoring message, and set filter conditions such as workspace or specific validation object.

Validation Objects

On the validation objects page, you can manage all quality rules by the dimension of the validation object (table). Using the search filter area, you can quickly locate specific rules.

Validation Results

Validation Results List

In the validation results list page, you can view the running status of all quality rules. Through the search and filter area, you can precisely find the required validation results.

Operations on Validation Results

For each validation result, you can perform the following operations:

- Terminate: Cancel the current validation run.

- Set Success/Set Failure: Manually set the validation result to success or failure.

- Revalidate: Trigger the quality validation to run again. Please note that if the rule that failed validation passes upon revalidation, it will not continue to trigger the sending of monitoring alerts.

With the above functions, you can effectively manage and monitor data quality, ensuring that the value of data in business applications is fully realized.