Batch import data from Object Storage(OSS)

This document details how to use the Lakehouse SQL engine to import data from object storage. Currently, Lakehouse supports two main data import modes:

- Import using Volume: Import data by creating and managing Volumes on object storage.

- Import using Copy command: Load data directly from object storage using the SQL COPY command.

Reference Documentation

Application Scenarios

- Quickly read large amounts of data using the performance advantages of the SQL engine, efficiently handling large-scale datasets.

- Support data transformation using SQL during the data import process, achieving ETL (Extract, Transform, Load) processes.

- It is recommended to choose a General Purpose Virtual Cluster when importing data to meet the needs of batch jobs and data loading jobs.

Usage Restrictions

- Object Storage Support: Currently, the target object storage for Volume only supports Alibaba Cloud OSS and Tencent Cloud COS.

- Cost Description: Downloading files from external Volume may incur object storage download fees on the cloud account. Please refer to the billing description of the respective cloud service provider for specific fees. If Lakehouse and object storage are located in the same region, using the internal network ENDPOINT can avoid additional costs.

- Cross-Cloud Import: Currently, cross-cloud service provider data import is not supported (it will be supported in future versions).

Use Cases

Import CSV Data from OSS using Volume

This document details how to use Lakehouse's Volume and COPY command to import the public dataset of Brazilian E-commerce from Alibaba Cloud Object Storage Service (OSS). Alternatively, you can directly download the dataset for this case through this link and then upload it to OSS. This process includes table creation, object storage connection configuration, Volume management, and data loading and status checking.

Prerequisites

- Ensure the target table

brazilian_customerhas been created:

- Have INSERT permission on the target table.

Create CONNECTION and VOLUME for connecting object storage

- Create a STORAGE CONNECTION to store and desensitize the connection information of object storage, which is convenient for reuse and management in Lakehouse.

- Create an EXTERNAL VOLUME to manage the data on OSS.

Using Volume to Load Data

- Specify the files that need to be read from the Volume in the

filesparameter. - You can set parameters for the CSV file in

options, such as the delimiter, etc. Refer to the documentation for specific settings. - In this case, the data will be transformed during loading, excluding records where the city is 'curitiba'.

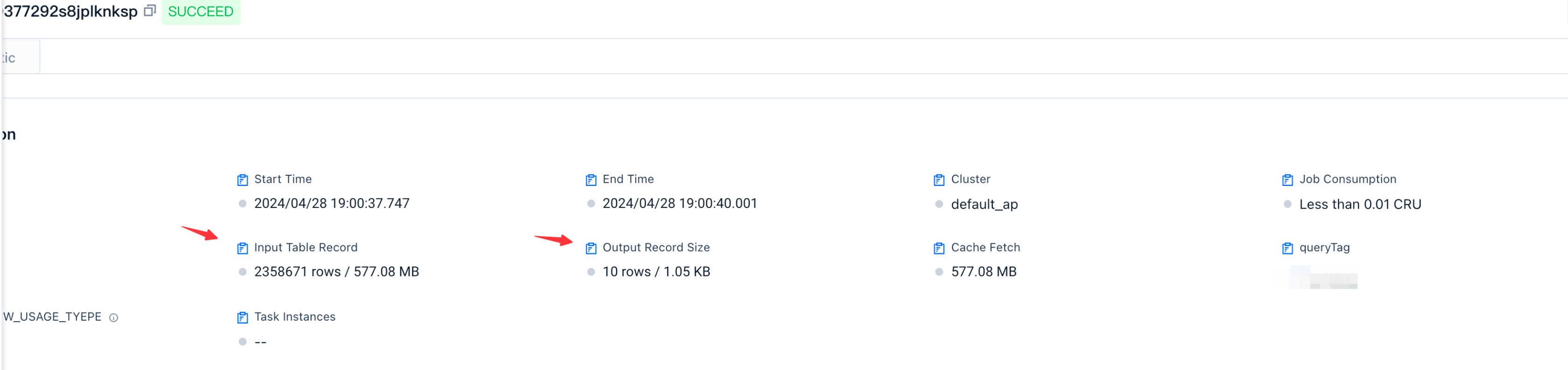

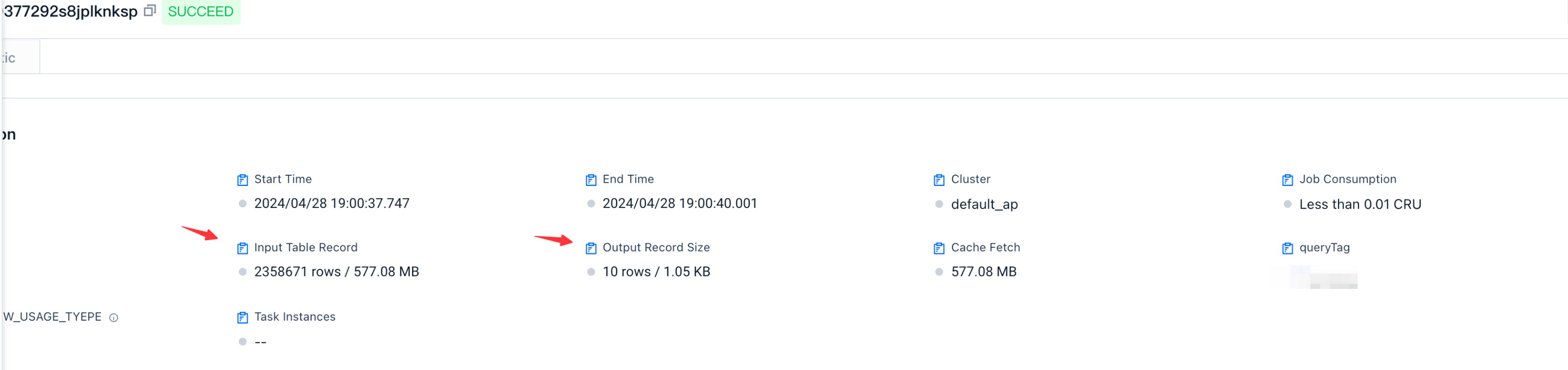

View Import Data Status

Check the job execution status in the job run history of Lakehouse to confirm whether the data import was successful.

Import Data from OSS Using COPY Command

This article introduces how to use Lakehouse's COPY command to import a public dataset of Brazilian E-commerce from Alibaba Cloud Object Storage Service (OSS), or you can directly download the dataset for this case through this link and then upload it to OSS. This process involves creating the data table, connecting and configuring the Volume, as well as loading the data and checking the status.

Prerequisites

- Ensure that the target table

brazilian_geolocationhas been created:

- Have INSERT permission on the target table.

Create CONNECTION and VOLUME for connecting object storage

-

Create a STORAGE CONNECTION to store and desensitize the connection information of the object storage, so that it can be reused and managed multiple times in the Lakehouse.

-

Create an EXTERNAL VOLUME to manage the data on OSS.

-

Using the COPY Command to Load Data into a Table

Use the COPY command to load data from an OSS Volume into the brazilian_customer table.

View Import Data Status

Check the job execution status in the job run history of Lakehouse to confirm whether the data import was successful.