Analysis of Structured and Semi-Structured Data in VOLUME

Syntax:

Parameter Description:

column_name and column_type: Optional, Singdata supports automatic file schema recognition. It is recommended not to fill in, as the column names and types in the specified file need to match the predefined column types in the file.

- Automatic file schema recognition for CSV files will automatically generate fields, with field numbers starting from f0. Currently, the automatically recognized types are int, double, string, and bool.

- For Parquet and ORC formats, the field names and types stored in the file will be automatically recognized. If the number of columns in the specified file is inconsistent, Singdata will attempt to merge them, and if it cannot merge, an error will be reported.

FileFormatParams:

CSV format:

- sep: Column separator, default is ",". Supports a maximum length of 1 character, for example:

'sep'=',' - compression: Configures the file compression format. Supported compression formats are: gzip/zstd/zlib. For example:

'compression'='gzip' - lineSep: Line separator, default value is "\n". Supports a maximum length of 2 characters, for example:

'lineSep'='$' - quote: Sets a single character used to escape quote values. The default value is a double quote

", for example:'quote'='"' - header: Whether to parse the header, default is false. Boolean type, for example:

'header'='true' - timeZone: Configures the time zone, no default value. Used to specify the time zone for the time format in the file. For example:

'timeZone' = 'Asia/Shanghai' - escape: Used to escape quotes within quoted values, default value is

\, for example:'escape'='\'

Parquet, ORC, BSON formats:

- None

FILES: Specify files. For example: files('part-00002.snappy.parquet','part-00003.snappy.parquet')

SUBDIRECTORY: Specify subdirectory. For example: subdirectory 'month=02'

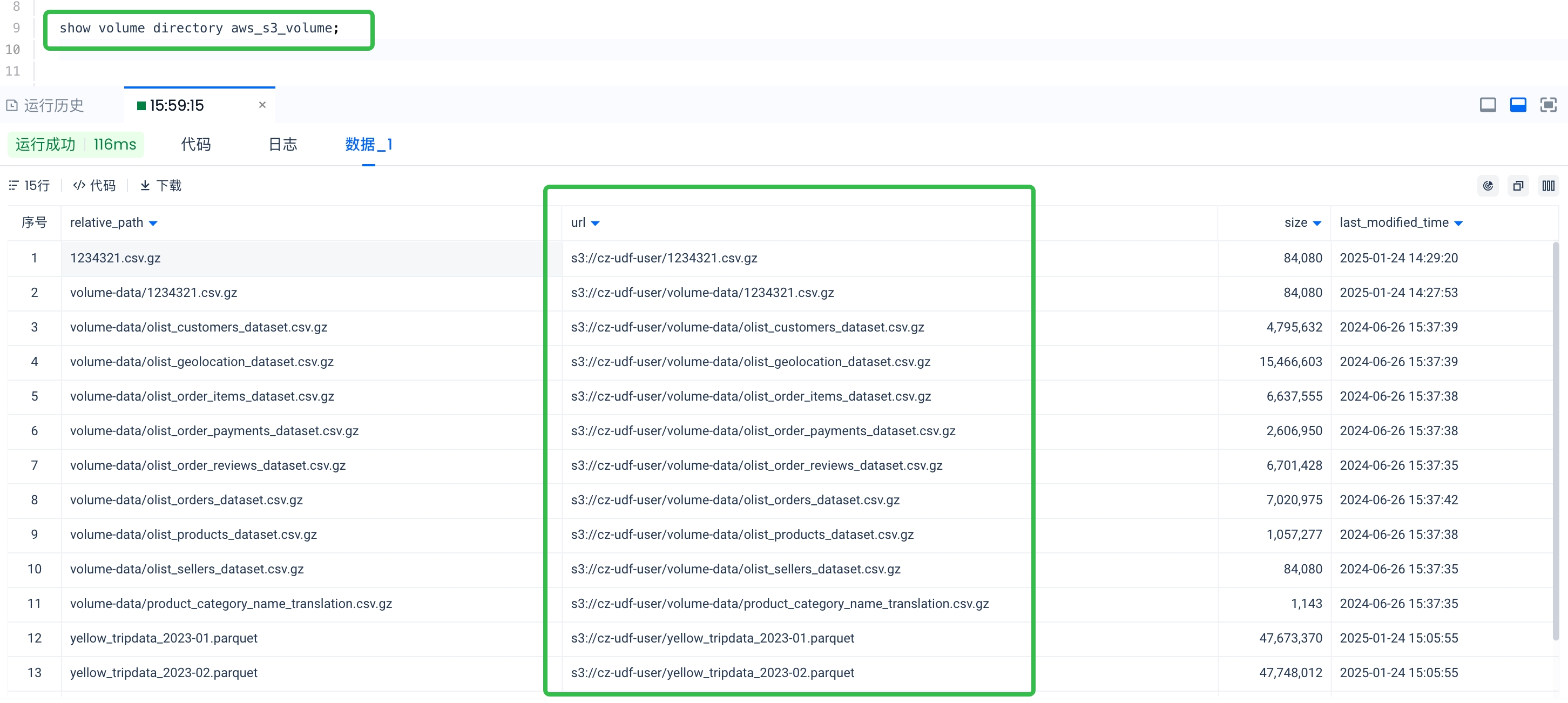

REGEXP <pattern>: Supports using regular expressions to match files. Note that the target of the regular expression match is the complete object storage path of the file (e.g., 's3://cz-udf-user/volume-data/1234321.csv.gz'), not the relative path of the file in the Volume object.

Matching rules:

.- Matches any single character except newline*- Matches the preceding element zero or more times, often used with.. For example,.*combination means matching any character any number of times+- Matches the preceding element one or more times, often used with.. For example,.+means matching any character one or more times?- Matches the preceding element zero or one time[abc]: Matches any one of the characters a, b, or c[abc]: Matches any character except a, b, or c[a-z]: Matches lowercase letters a to z[A-Z]: Matches uppercase letters A to Z[0-9]: Matches digits 0 to 9

For example:

REGEXP '.*.parquet'matches files with the suffixparquetREGEXP '.*yellow_tripdata_202[0-4]-0[23].parquet'matches files containingyellow_tripdata_, with the year from2020to2024, the month being02or03, and ending with.parquet

Example:

Query CSV format file:

Data Preparation:

Create a volume object: hz_csv_volume, bind to Alibaba Cloud OSS path: oss://hz-datalake/csv_files/, the file structure under this path is the CSV files of the Brazilian e-commerce dataset:

Query Example:

Data Import into Lakehouse:

You can import data into Singdata Lakehouse internal tables using the create table as select method:

Query Parquet Format Files:

Data Preparation:

Create a volume object: hz_parquet_volume, bind the Alibaba Cloud OSS path: oss://hz-datalake/yellowtrip-partitioned/. The file structure under this path includes the New York taxi dataset and some other scattered Parquet format files. The purpose is to demonstrate how to use the FILES | SUBDIRECTORY | REGEXP options to query the target data files. The organization of the files is as follows:

Query Example:

Query 1: Query the parquet files of month partitions 1-5

Query 2: Query files with the parquet suffix starting with part-, which contain complex types:

Query 3: Use the files parameter to include specific files, complex type query:

Query ORC Format Files:

Data Preparation:

Create a volume object: hz_orc_volume, bind the Alibaba Cloud OSS path: oss://hz-datalake/orcfiles/, the file structure under this path is: