What CTOs Miss When Evaluating Need-Based Personalized Recommendations Capabilities

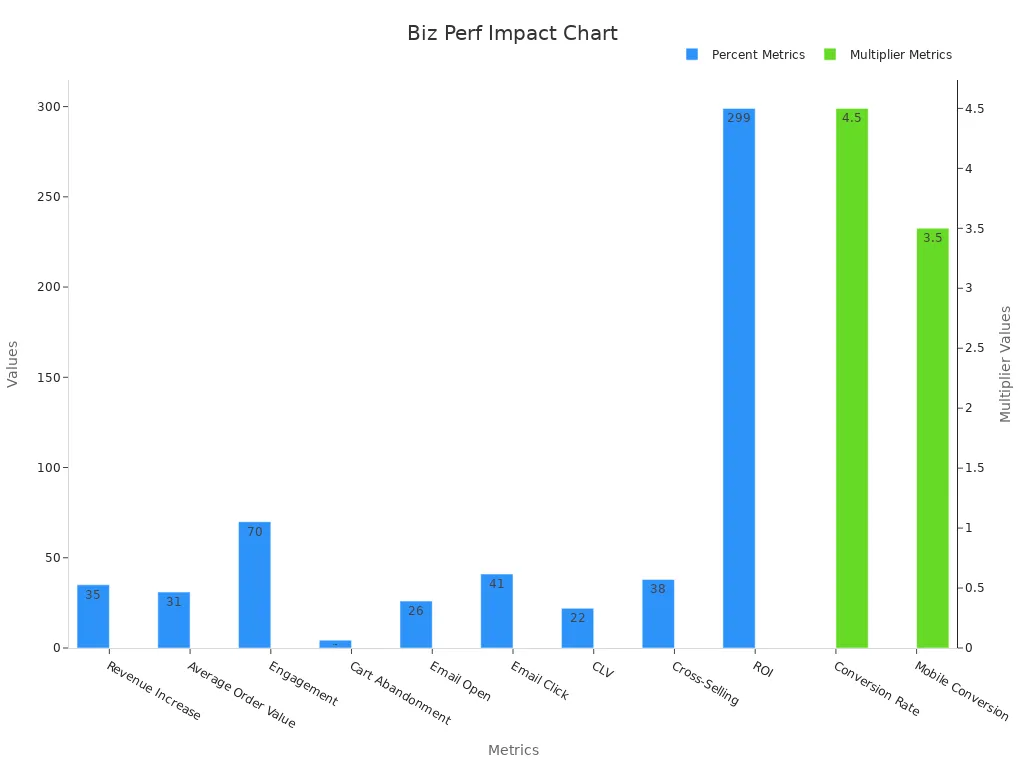

Many technology leaders focus on algorithms and automation but often overlook critical elements when evaluating need-based personalized recommendation systems. These gaps can lead to missed revenue, lower conversion rates, and reduced user engagement. A scientific study shows that personalized recommendations can increase firm revenue by up to 29%, with relevance driving an additional 30%. The following table highlights the business impact:

Metric | Impact/Statistic | Source |

|---|---|---|

Revenue Increase | Boston Consulting Group (2023) | |

Conversion Rate | Up to 4.5x higher | Monetate (2022) |

Customer Lifetime Value | 22% increase | Accenture (2023) |

ROI | 299% over three years | Forrester (2022) |

When CTOs Miss these critical factors, user trust also suffers. Over 74% of users prefer personalized recommendations, but moderate risk perception can reduce trust and engagement. These oversights directly affect decision-making and long-term business growth.

Key Takeaways

CTOs must clearly distinguish need-based personalization from behavior-based methods to choose the right strategy for their business.

Aligning personalization with business goals and customer experience drives higher revenue, loyalty, and innovation.

Balancing automation with human oversight and ensuring transparency builds user trust and reduces risks from black-box models.

Collecting relevant user data while respecting privacy laws is essential for accurate and ethical personalization.

Cross-functional collaboration and continuous learning improve recommendation quality and help avoid costly mistakes.

What CTOs Miss About Need-Based Personalization

Misunderstanding the Distinction from Behavior-Based Approaches

Many technology leaders confuse need-based personalization with behavior-based methods. Behavior-based personalization uses data like browsing history, purchase patterns, and time spent on site. For example, Netflix uses algorithms to suggest shows based on what users have watched, which helps reduce customer churn by billions each year. Retailers often use popups triggered by exit intent or customize headlines based on search keywords, leading to higher revenue and conversion rates.

Need-based personalization, on the other hand, focuses on static customer needs, demographics, or context. It uses rules to segment users by factors such as location or device type. For instance, a company might show different recommendations to users in different regions or on different devices. Some organizations use a hybrid approach, combining both methods. Apple sometimes overrides algorithmic recommendations with business rules to promote new products, balancing automation with business goals.

Tip: CTOs should clearly define the differences between these approaches. This helps teams choose the right strategy for their business needs.

Overlooking Alignment with Business Goals and Customer Experience

CTOs Miss a key opportunity when they fail to align personalization strategies with business goals and customer experience. Leading companies like Starbucks, Amazon, and Nike show that successful personalization supports objectives such as sales growth, customer loyalty, and innovation. These organizations use CRM systems, AI, and customer feedback to create a 360-degree view of the customer.

Microsoft improved customer satisfaction by acting on feedback and fostering collaboration.

Starbucks increased loyalty through personalized mobile app experiences.

Amazon drives retention with tailored recommendations and fast delivery.

Apple uses feedback to innovate and improve satisfaction.

Nike boosts online sales with data-driven personalization.

Building cross-functional teams, integrating data, and measuring success with clear KPIs help maintain alignment. Leadership plays a vital role by setting the vision and ensuring resources support customer experience strategies.

Key Oversights CTOs Miss in Evaluation

Overreliance on Automation and Black-Box Models

Many organizations trust automated systems to handle personalized recommendations. They often believe that more automation leads to better results. However, this approach can create serious risks. When teams rely too much on black-box models, they lose sight of how decisions are made. This lack of understanding can lead to errors and bias.

The COMPAS algorithm in criminal justice labeled African American defendants as higher risk 44% of the time. This mistake caused fairness concerns and damaged trust in AI systems.

The European Central Bank found that heavy use of AI in finance increases the risk of operational failures and cyberattacks. These problems can disrupt entire markets.

Studies show that 27.7% of students who depend on AI dialogue systems lose decision-making skills. This shows that overreliance can weaken human abilities.

Organizations have seen cascading failures when flawed AI decisions spread across departments.

Excessive trust in AI reduces human oversight. This can cause critical errors and lower team performance, especially in high-stakes situations.

Note: CTOs should balance automation with human judgment. Teams need to understand how models work and keep people involved in important decisions.

Lack of Transparency and Explainability

Many recommendation systems use complex algorithms that are hard to explain. When users do not understand why they receive certain suggestions, they may lose trust in the system. Transparency and explainability help users feel confident in the recommendations.

A recent study showed that adding explainability tools like LIME and SHAP to recommendation systems increased precision by 3%. Users also found it easier to understand and trust the recommendations. This improvement addresses concerns about the opaque nature of AI systems. Researchers used datasets like MovieLens and Amazon reviews to test these methods. They found that user satisfaction and trust grew when recommendations became more transparent. Visualization tools such as PCA and t-SNE also help teams interpret complex data.

Tip: Teams should use explainability techniques to make AI-driven recommendations clearer. This builds trust and helps users make better choices.

Insufficient User Context and Data Depth

Accurate personalization depends on collecting enough user data and understanding the context. Many systems fail because they do not capture the full picture of user needs and preferences. Personalized services work best when they use both personal data and behavioral traces.

Collecting detailed user context is essential for optimizing recommendations and targeted advertising.

Users have different attitudes toward personalization based on the type of data and situation. Systems must capture these differences to tailor experiences effectively.

Many users do not realize how much data is needed for personalization. This lack of awareness affects their acceptance and behavior.

Personalization must respect privacy preferences and follow data minimization rules, such as those in GDPR Article 5. This helps avoid privacy risks and keeps user trust.

Behavioral data can reveal sensitive information beyond what users share directly. Teams must collect relevant data while protecting privacy.

Users often do not take enough steps to protect their privacy, even when they worry about it. Transparency and user empowerment tools are important for ethical data management.

Public opinion shows that personalization should not be used in sensitive areas like political campaigns. Context matters when using data.

Callout: Teams should collect enough relevant data to improve personalization but must always respect user privacy and legal requirements.

Ignoring Cognitive and Behavioral Biases

Many organizations overlook the influence of cognitive and behavioral biases when evaluating personalized recommendation systems. These biases shape how users interact with recommendations and how teams interpret system performance. Research in "The Importance of Cognitive Biases in the Recommendation Ecosystem" highlights several biases, such as the feature-positive effect, Ikea effect, and cultural homophily. These biases can affect user engagement, decision-making, and fairness in recommendations. For example, confirmation bias leads users to favor suggestions that match their existing beliefs, while evaluation bias can skew how users rate recommendations.

A study on preference-inconsistent recommendations found that prior knowledge and mindset, such as cooperation or competition, change how users respond to suggestions. Users with more knowledge or a cooperative mindset may accept recommendations that challenge their preferences, while others may reject them. These findings show that biases do not only affect users but also impact how teams assess the effectiveness of recommendation systems.

Teams should recognize and address these biases at every stage of the recommendation process. This approach helps ensure fair, accurate, and user-friendly systems.

Underestimating Cross-Functional Collaboration Needs

CTOs Miss a critical factor when they underestimate the need for cross-functional collaboration in building and evaluating recommendation systems. Successful personalization requires input from data scientists, engineers, product managers, marketers, and legal experts. Each group brings unique insights that help align technical solutions with business goals and user needs.

Without strong collaboration, teams may develop systems that work well technically but fail to deliver value to users or meet regulatory requirements. For example, engineers may focus on algorithm performance, while marketers prioritize customer experience. Legal teams ensure compliance with privacy laws. When these groups work together, they create balanced solutions that support both innovation and trust.

Cross-functional teams help identify blind spots and reduce the risk of bias.

Collaboration improves communication and speeds up problem-solving.

Diverse perspectives lead to more creative and effective personalization strategies.

Building a culture of collaboration ensures that recommendation systems serve both business objectives and user interests.

Neglecting Ethical and Data Privacy Considerations

Neglecting ethical and data privacy considerations can lead to serious business and legal consequences. Several high-profile cases highlight these risks:

The UK Information Commissioner’s Office ruled that the Royal Free NHS Foundation Trust broke data protection laws by sharing patient data with Google DeepMind without proper consent. This resulted in regulatory action and damaged trust.

The lawsuit Dinerstein v. Google and Project Nightingale raised concerns about improper data sharing in healthcare AI, leading to legal challenges and public scrutiny.

Failing to inform patients about data processing undermines trust, which is essential for successful AI adoption in clinical settings.

Ethical lapses in data privacy can result in lawsuits, regulatory penalties, and loss of privacy rights.

Regulatory frameworks like the EU's GDPR and the upcoming AI Act set strict rules for AI systems. Non-compliance can lead to lawsuits, fines, and reputational harm. Ethical AI development requires informed consent, data anonymization, and clear data tracking. Organizations must implement strong data governance, including responsible data collection, encryption, access controls, and regular audits. Limiting data use to specific purposes reduces security risks and supports compliance.

Regular staff training and incident response planning help maintain ethical standards.

Failing to adopt these safeguards can erode client trust and damage professional reputation.

Organizations that prioritize transparency, accountability, and privacy build stronger relationships with users and reduce legal exposure.

Consequences When CTOs Miss Critical Factors

Business Performance and Scalability Risks

Overlooking critical factors in need-based personalization can significantly hinder business performance and scalability. Systems that rely on incomplete or poorly integrated data often fail to deliver accurate recommendations, leading to reduced customer satisfaction and lower conversion rates. For instance, businesses that neglect to align their recommendation systems with scalability requirements may encounter bottlenecks during peak usage, resulting in slower response times and frustrated users.

Additionally, overreliance on black-box models without proper oversight can lead to cascading failures across departments. These failures not only disrupt operations but also increase costs associated with troubleshooting and system downtime. Companies that fail to address these risks may struggle to maintain competitive advantages in fast-evolving markets.

Tip: CTOs should prioritize robust infrastructure and scalable architectures to ensure recommendation systems can handle growth without compromising performance.

Erosion of User Trust and Regulatory Compliance Issues

Neglecting transparency, ethical considerations, and data privacy can erode user trust and expose businesses to regulatory penalties. Oversight failures often lead to compliance issues, as seen in cases where organizations failed to hire qualified security officers or implement proper data governance. Such lapses result in legal consequences, including fines, lawsuits, and reputational damage.

Oversight prevents financial losses, protects reputation, and reduces regulatory penalties.

Non-compliance risks include class action lawsuits, SEC fines, and personal liability for executives.

For example, a healthcare organization faced public backlash and regulatory scrutiny after sharing patient data without proper consent. This incident not only damaged trust but also caused patients to switch to competitors perceived as more secure.

Callout: Continuous monitoring, leadership commitment, and employee training are essential to maintaining compliance and safeguarding user trust.

Poor Decision-Making and Missed Market Opportunities

Missing critical factors in evaluation often results in poor decision-making and lost opportunities. Incomplete or outdated data leads to targeting errors, irrelevant messaging, and inefficient resource allocation. For instance, Unity Technologies lost $110 million due to an ad targeting error caused by bad data, while Uber faced a $45 million miscalculation in driver payments. These examples highlight how data quality issues can skew metrics and waste resources.

Outdated customer data also prevents effective personalization, causing businesses to miss sales opportunities. Misleading insights from inaccurate data create false trends, leading to flawed strategic planning and increased risks. Companies that fail to address these issues risk falling behind competitors who leverage accurate, actionable insights.

Note: Investing in data quality and governance ensures better decision-making and maximizes market opportunities.

How CTOs Can Avoid These Misses

Demand Explainability and Model Transparency

CTOs must prioritize explainability and transparency when evaluating personalized recommendation systems. Complex algorithms often operate as "black boxes," making it difficult for teams to understand how decisions are made. Transparent systems not only build user trust but also ensure compliance with regulatory frameworks like the EU AI Act and NIST AI Risk Management Framework.

Legal AI systems, such as COMPAS, faced backlash due to opaque decision-making, prompting the adoption of fairness assessments and human oversight.

DeepMind’s Kidney Disease Prediction system successfully incorporated explainability reports, enabling doctors to verify AI predictions before acting.

NASA’s Mars Rover AI Navigation employs ensemble learning to cross-validate decisions, preventing mission-critical failures.

CTOs should adopt tools like LIME, SHAP, and model cards to enhance transparency. Best practices include integrating manual override systems, hybrid human-AI decision-making models, and uncertainty indicators. These measures ensure accountability while improving user confidence in AI-driven recommendations.

Tip: Transparent systems empower teams to identify and address errors early, reducing risks and enhancing system reliability.

Invest in AI and Data Literacy Across Teams

Investing in AI and data literacy equips teams with the skills needed to maximize the potential of recommendation systems. AI systems require significant resources, including high-quality training data, robust infrastructure, and ongoing maintenance. Without proper literacy, teams struggle to interpret data and leverage AI effectively.

In healthcare, data literacy training reduced medical errors and improved patient outcomes by enabling staff to interpret patient data accurately.

Retail managers who underwent data literacy programs optimized inventory management, leading to fewer stockouts and increased profitability.

Lockheed Martin’s data literacy workshops enhanced employees' understanding of data usage, improving accuracy and operational efficiency.

Organizations that integrate domain experts with AI teams foster trust and practical application of AI insights. Collaborative projects, such as NBCUniversal’s forecasting initiative, demonstrate how domain expertise enhances machine-learning recommendations. Gartner emphasizes that data literacy is critical for creating a data-driven culture, which improves agility and digital transformation outcomes.

Callout: Building a data-literate workforce ensures teams can interpret AI outputs effectively, driving better decision-making and innovation.

Foster Cross-Functional Collaboration

Effective recommendation systems require collaboration across diverse teams, including data scientists, engineers, marketers, and legal experts. Each group contributes unique perspectives that align technical solutions with business goals and user needs. Without collaboration, systems may excel technically but fail to deliver value or meet compliance standards.

Cross-functional teams reduce blind spots by combining technical expertise with user-centric insights.

Collaborative efforts improve communication, accelerate problem-solving, and foster innovation.

Examples like Spotify and Netflix highlight how integrating AI with domain expertise enhances user engagement and prevents missed evaluation factors.

CTOs should establish a culture of collaboration by encouraging regular cross-departmental meetings and shared accountability. Techniques like customer segmentation and sentiment analysis can further align teams by providing actionable insights into user preferences.

Note: Collaboration ensures that recommendation systems remain adaptable, user-focused, and aligned with organizational objectives.

Implement Adaptive Feedback and Continuous Learning

Adaptive feedback and continuous learning form the backbone of effective personalized recommendation systems. These systems must adjust to user behavior and preferences in real time. A systematic review of adaptive learning research from 2009 to 2018 confirms that adaptive feedback and continuous learning boost engagement and performance. AI-powered feedback helps users by identifying gaps and offering timely suggestions. This approach keeps users interested and supports their unique needs.

Key components of adaptive systems include:

Real-time feedback that responds to user actions

Data-driven insights that guide recommendations

Adaptive learning pathways that change as users interact

Natural language processing for better communication

Predictive analytics to anticipate user needs

These features make recommendation systems more accessible. They support users with different backgrounds, abilities, and languages. AI feedback works best when combined with human expertise. This blend ensures recommendations remain relevant and trustworthy. Teams that use adaptive feedback and continuous learning see higher user satisfaction and better outcomes.

Adaptive systems help organizations stay ahead by learning from every interaction. This ongoing improvement leads to smarter, more effective recommendations.

Prioritize User-Centric and Business Impact Metrics

Successful recommendation systems focus on both user experience and business results. Companies like Spotify saw a 30% increase in user engagement and more premium subscriptions after launching personalized playlists. This example shows how user-centric personalization drives business growth.

A table of industry leaders highlights the impact of this approach:

Company/Case Study | Key Outcome/Metric | Description |

|---|---|---|

Netflix | 80% of streaming hours influenced by recommendations | Recommendation engine drives business performance |

Amazon | Multiple evolving strategies | Systems align with changing business needs |

European Comic Book Retailer | Personalized newsletters tested on 23,000 customers | Higher engagement through tailored recommendations |

Spotify | 30% increase in engagement, more premium sign-ups | User-centric playlists boost business outcomes |

Teams should track both user and business metrics to measure success:

User-Centric Metrics:

Task success rate

System Usability Scale (SUS)

Error and abandonment rates

Business Metrics:

Conversion rate

Customer lifetime value (CLV)

Net Promoter Score (NPS)

Churn rate

Strategies for balance:

A/B testing and funnel analysis

Iterative design with user feedback

Cross-functional collaboration

Prioritizing features by value and effort

Focusing on these metrics ensures recommendation systems meet user needs and deliver strong business results.

CTOs Miss Checklist and Guiding Questions

Key Questions for Vendors and Internal Teams

A structured set of questions helps technology leaders avoid common pitfalls when selecting or building need-based personalized recommendation systems. These questions guide both vendor conversations and internal evaluations, ensuring alignment with business goals and operational needs.

Core Capabilities and Business Alignment

What business problem does the solution address?

Can the system use proprietary data for deeper insights?

How scalable is the platform as the business grows?

User Experience and Accessibility

Is the interface user-friendly for non-technical staff?

What is the expected learning curve for users?

Is a pilot or demo available for hands-on testing?

Data Management and Ownership

Does the solution connect to current data sources?

How is data stored and protected?

Who owns the model, user input, and output?

Ethical Commitments and Fairness

How does the system prevent bias?

What steps ensure model explainability?

What ethical standards guide development?

Support and Training

What support and training resources are available?

How does the vendor collect and use feedback?

ROI and Cost Transparency

What is the pricing model?

Are there case studies showing success?

Can the vendor estimate ROI for the use case?

Technical Deployment and Integration

What hardware or software is required?

How compatible is the system with current technology?

How is performance monitored after launch?

Internal Skills and Personnel Requirements

What skills are needed for implementation and maintenance?

Are third-party providers recommended for support?

A detailed question list helps CTOs Miss fewer risks and ensures a thorough evaluation process.

Comprehensive Evaluation Checklist

A comprehensive checklist supports a systematic and unbiased evaluation. Industry benchmarks recommend the following steps:

Evaluation Objectives: Define clear goals that match the system’s purpose and stakeholder needs.

Evaluation Design Space: Set guiding principles, choose experiment types (offline, user studies, A/B testing), and decide on evaluation aspects like data quality and bias.

Data Quality and Bias Management: Curate datasets carefully, use sampling or reweighting, and check for rating density and variance.

Evaluation Metrics: Use a range of metrics—accuracy, coverage, utility, robustness, novelty, and confidence.

Baseline Comparisons: Compare the system against strong, well-tuned baselines.

Online Evaluations: Run field experiments or user studies to capture real user behavior and satisfaction.

Using a checklist and guiding questions helps teams avoid distractions from vendor claims and focus on what matters most for business success.

CTOs often overlook key areas when evaluating need-based personalized recommendations:

They struggle with complex security standards and audits, risking compliance gaps.

Automation helps, but human oversight remains essential for evolving requirements.

Focusing only on technical milestones leads to missed innovation and poor market response.

Lack of collaboration with business leaders causes misaligned investments and increased risk.

Netflix’s data-centric model and teacher-focused systems in education show that holistic, user-centric strategies improve outcomes. CTOs who use structured checklists and foster cross-team collaboration drive operational efficiency, trust, and innovation.

FAQ

What is the main difference between need-based and behavior-based recommendations?

Need-based recommendations use user needs, context, or demographics. Behavior-based recommendations rely on past actions, such as clicks or purchases. Need-based systems often provide more relevant suggestions for new users or unique situations.

Why does explainability matter in recommendation systems?

Explainability helps users and teams understand why the system suggests certain items. This builds trust and allows teams to spot errors or bias. Transparent systems also support compliance with laws and industry standards.

How can CTOs ensure data privacy in personalized recommendations?

CTOs should use data encryption, limit data access, and follow privacy laws like GDPR. Regular audits and clear consent processes protect user information. Teams must only collect data needed for recommendations.

What metrics best measure the success of a recommendation system?

Key metrics include conversion rate, customer lifetime value, and user satisfaction scores. Teams should also track error rates and system usability. A balanced approach ensures both business growth and positive user experience.

See Also

Why Need-Focused Recommendations Outperform Common Purchase Suggestions

What Retailers Miss When Analyzing User Behavior In Digital Change

Deciding On Rule Versus Machine Learning Driven Targeted Campaigns

Transforming Customer Insights Into Effective Business Strategies

Techniques And Challenges In Forecasting Short-Term Product Demand