Create Catalog Connection

Catalog Connection is a key component used to manage connection information with third-party Catalogs. Its core function is to provide access authentication for External Catalogs, ensuring that the Lakehouse platform can securely and seamlessly access and manage data resources in storage services.

Supported Catalogs

- Hive Catalog: By connecting to the Hive Metastore, or metadata services compatible with Hive Metastore, Lakehouse can automatically retrieve Hive's database and table information and perform data queries.

- Databricks Unity Catalog: Unity Catalog is Databricks' unified governance solution for data and AI assets. In Unity Catalog, all metadata is registered in the metastore. The hierarchy of database objects in any Unity Catalog metastore is divided into three levels, and when you reference tables, views, volumes, models, and functions, they are represented as a three-level namespace. By connecting to Databricks' Unity Catalog, Lakehouse can automatically retrieve Databricks' database and table information and perform data queries.

Create Hive Catalog Syntax

Parameter Description

- connection_name: The name of the connection, which must be unique.

- type: The type of connection, here it is

hms(Hive Metastore Service). - hive_metastore_uris: The service address of the Hive Metastore, in the format

host:port. The port is usually 9083. - storage_connection: The name of the created storage connection, used to access object storage or HDFS services. For details, refer to CREATE STORAGE CONNECTION.

Steps to Create Hive Catalog Connection

- Create Storage Connection: First, you need to create a storage connection to access the object storage service. The user specifies the location of the hive data storage, currently only object storage oss, cos, s3 are supported.

- Create Catalog Connection: Use the storage connection information and Hive Metastore address to create a Catalog Connection.

Create Storage Connection

Create Catalog Connection

Case Studies

Case Study 1: Hive ON OSS

- Create a storage connection

- Create Catalog Connection Please ensure that the network between the server where HMS is located and the Lakehouse is connected. For specific connection methods, please refer to Creating Alibaba Cloud Endpoint Service

Case 2: Hive ON COS

- Create Storage Connection

Parameters:

* TYPE: The object storage type, for Tencent Cloud, fill in COS (case insensitive)

* ACCESS_KEY / SECRET_KEY: The access keys for Tencent Cloud, refer to: Access Keys

* REGION: Refers to the region where Tencent Cloud Object Storage COS data center is located. When Singdata Lakehouse accesses Tencent Cloud COS within the same region, the COS service will automatically route to internal network access. For specific values, please refer to Tencent Cloud documentation: Regions and Access Domains.

- Create Catalog Connection Please ensure the network between the server where HMS is located and Lakehouse is connected. For specific connection methods, refer to Creating Tencent Cloud PrivateLink Service

Case Three: Hive ON S3

- Create a storage connection

Parameters:

- TYPE: The object storage type, AWS should be filled in as S3 (case insensitive)

- ACCESS_KEY / SECRET_KEY: The access key for AWS, refer to: Access Keys for how to obtain it

- ENDPOINT: The service address for S3, AWS China is divided into Beijing and Ningxia regions. The service address for S3 in the Beijing region is

s3.cn-north-1.amazonaws.com.cn, and for the Ningxia region iss3.cn-northwest-1.amazonaws.com.cn. Refer to: China Region Endpoints to find the endpoints for the Beijing and Ningxia regions -> Amazon S3 corresponding endpoints - REGION: AWS China is divided into Beijing and Ningxia regions. The region values are: Beijing region

cn-north-1, Ningxia regioncn-northwest-1. Refer to: China Region Endpoints

- Create Catalog Connection

Create Databricks Unity Catalog

Create Databricks Unity Catalog Connection

Overview

This document details how to create a Databricks Unity Catalog connection using SQL statements. Through this connection, users can integrate external systems with the Databricks Unity Catalog to achieve data management and sharing. This document covers syntax, parameter descriptions, and configuration requirements.

Syntax

Parameter Description

connection_name: The name of the connection, used to identify the Databricks Unity Catalog connection. The name must be unique and follow naming conventions.TYPE databricks: Specifies the connection type as Databricks Unity Catalog.HOST: The URL address of the Databricks workspace. The usual format ishttps://<workspace-url>. Example:https://dbc-12345678-9abc.cloud.databricks.comCLIENT_ID: The client ID used for OAuth 2.0 machine-to-machine (M2M) authentication. Refer to the Databricks OAuth M2M Authentication Documentation to create an OAuth 2.0 application and obtain theCLIENT_ID.CLIENT_SECRET: The client secret used for OAuth 2.0 machine-to-machine (M2M) authentication. Refer to the Databricks OAuth M2M Authentication Documentation to create an OAuth 2.0 application and obtain theCLIENT_SECRET.ACCESS_REGION: The region where the Databricks workspace is located, such asus-west-2oreast-us.

Example

step1: Databricks Preparation

- Create a service principal. Refer to the Databricks Documentation to obtain the principal and its client id/client secret.

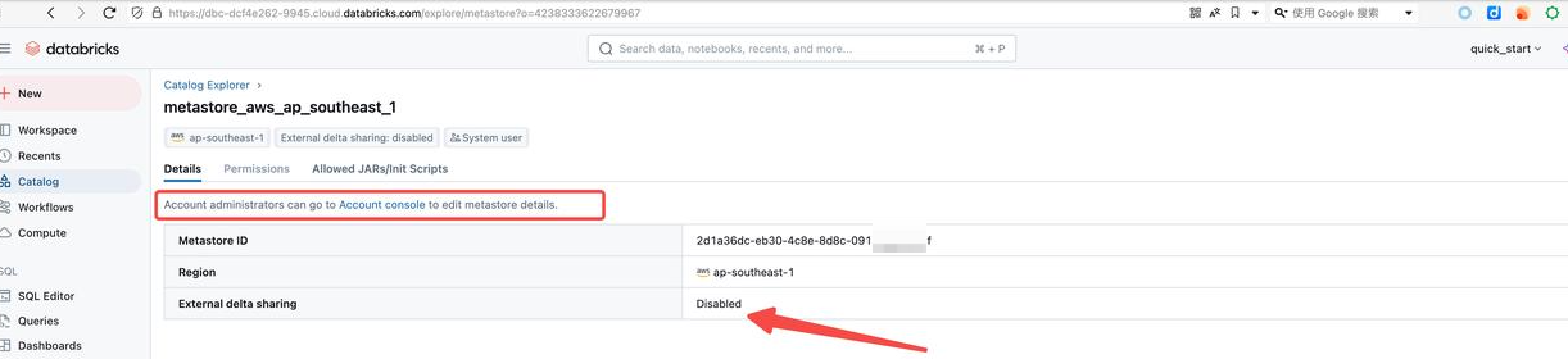

- Enable external data access in Metastore.

- Authorize the service principal.

Below is a complete example showing how to create a Databricks Unity Catalog connection:

step2 : Lakehouse The following is a complete example showing how to create a Databricks Unity Catalog connection:

Frequently Asked Questions

Q1: How to verify if the connection is successful?

- After creating the connection, you can verify the connection status by querying the Schema or table data under the Catalog.

- Example:

Q2: Possible reasons for connection failure?

HOSTaddress is incorrect or inaccessible.CLIENT_IDorCLIENT_SECRETis invalid or lacks sufficient permissions.ACCESS_REGIONdoes not match the Databricks workspace region.

Q3: How to update connection configuration?

- Delete the existing connection and recreate it: