Complete Guide to Importing Data into Singdata Lakehouse

Data Ingestion: Loading Local Files via Singdata Lakehouse Studio

Overview

You will use Singdata Lakehouse Studio to load local data into Singdata Lakehouse tables through a web interface, without any coding.

Use Cases

Suitable for directly uploading smaller local files (not larger than 2GB) such as CSV, TXT, Parquet, AVRO, ORC into Singdata Lakehouse tables without programming, making it the simplest method.

Implementation Steps

Upload File

Navigate to Data -> Data Directory, click "Upload Data" to import local files (CSV files generated in the test data generation section) into the table.

Import Data

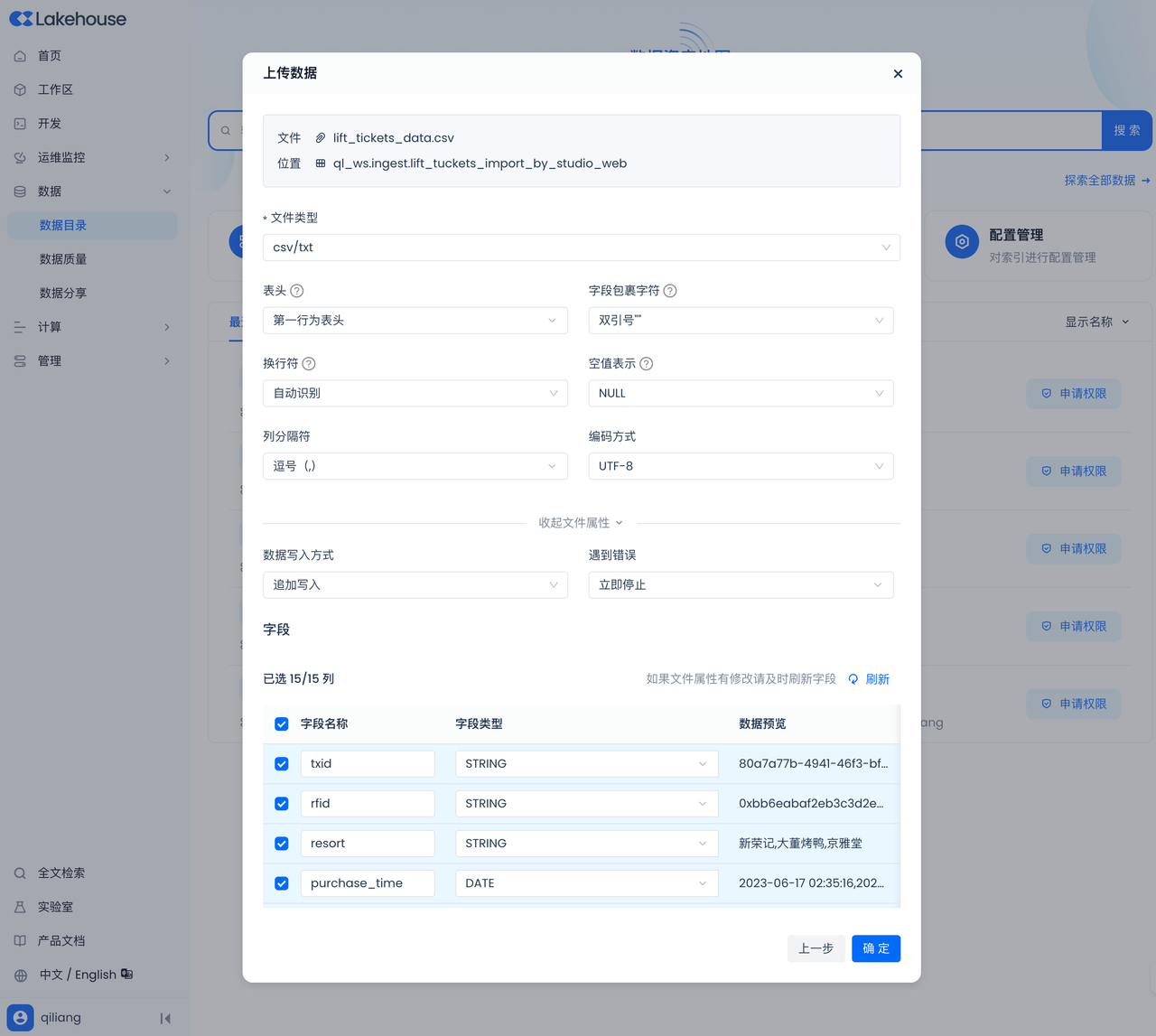

Click "Upload Data":

- Test data generated in the test data generation section

- Schema created in the Singdata Lakehouse setup section

- Select "Create New Table", table name: lift_tuckets_import_by_studio_web

- Virtual compute cluster created in the Singdata Lakehouse setup section

After clicking "Next", check if the automatic settings for the uploaded data are correct. If the data preview meets expectations, the automatic settings are correct. Click "Confirm" to complete the data upload.

Result Verification

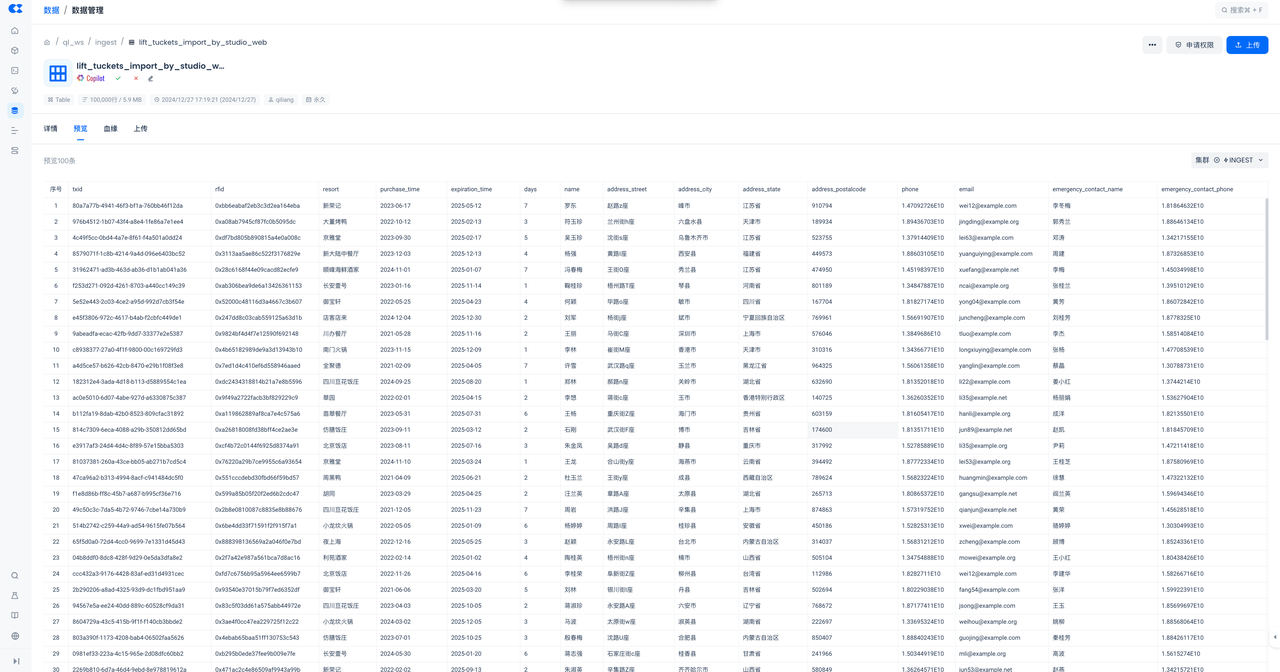

Go to "Data" to check the import status and data:

You can see that the number of rows written in the import result is "100,000", which is consistent with the number generated in the "Test Data Generation" step.

You can further "Preview Data" to confirm the data was loaded successfully:

At this point, we have loaded local files into the table via Singdata Lakehouse Studio.

Next Steps

- Continue loading data into the same table or other tables. By clicking "Upload" on the previous page, you can upload more data into the same table.

- Use Singdata Lakehouse's built-in DataGPT for visual data exploration and data analysis through Q&A.

- Develop SQL tasks in the IDE of Singdata Lakehouse Studio to further clean, transform, and analyze data.