Create Amazon Cloud Storage Connection

The goal of this step is to allow the Lakehouse cluster to access Amazon Cloud (AWS) object storage S3. To achieve this goal, two authentication methods provided by AWS's Identity and Access Management (IAM) product can be used: Access Keys and Role Authorization.

Based on Access Keys

Parameters:

TYPE: The object storage type, for AWS, fill in S3 (case insensitive)ACCESS_KEY / SECRET_KEY: The access key for AWS, refer to: Access Keys for how to obtain itENDPOINT: The service address for S3, AWS China is divided into Beijing and Ningxia regions. The service address for S3 in the Beijing region iss3.cn-north-1.amazonaws.com.cn, and for the Ningxia region iss3.cn-northwest-1.amazonaws.com.cn. Refer to: China Region Endpoints to find the endpoints for the Beijing and Ningxia regions -> Amazon S3 corresponding endpointsREGION: AWS China is divided into Beijing and Ningxia regions, the region values are: Beijing regioncn-north-1, Ningxia regioncn-northwest-1. Refer to: China Region Endpoints

Role-Based Authorization

You need to create a and a in AWS IAM to which the target cloud object storage S3 belongs: The permission policy represents the rules for accessing AWS S3 data, and this policy is authorized to the created role. Singdata Lakehouse achieves read and write operations with the data in S3 by assuming this role.

STEP1: Create a Permission Policy (LakehouseAccess) on AWS:

- Log in to the AWS cloud platform and enter the Identity and Access Management (IAM) product console

- In the IAM page's left navigation bar, go to Account Settings, in the Security Token Service (STS) section's Endpoints list, find the region corresponding to the current instance's Singdata Lakehouse. If the STS Status is not enabled, please enable it.

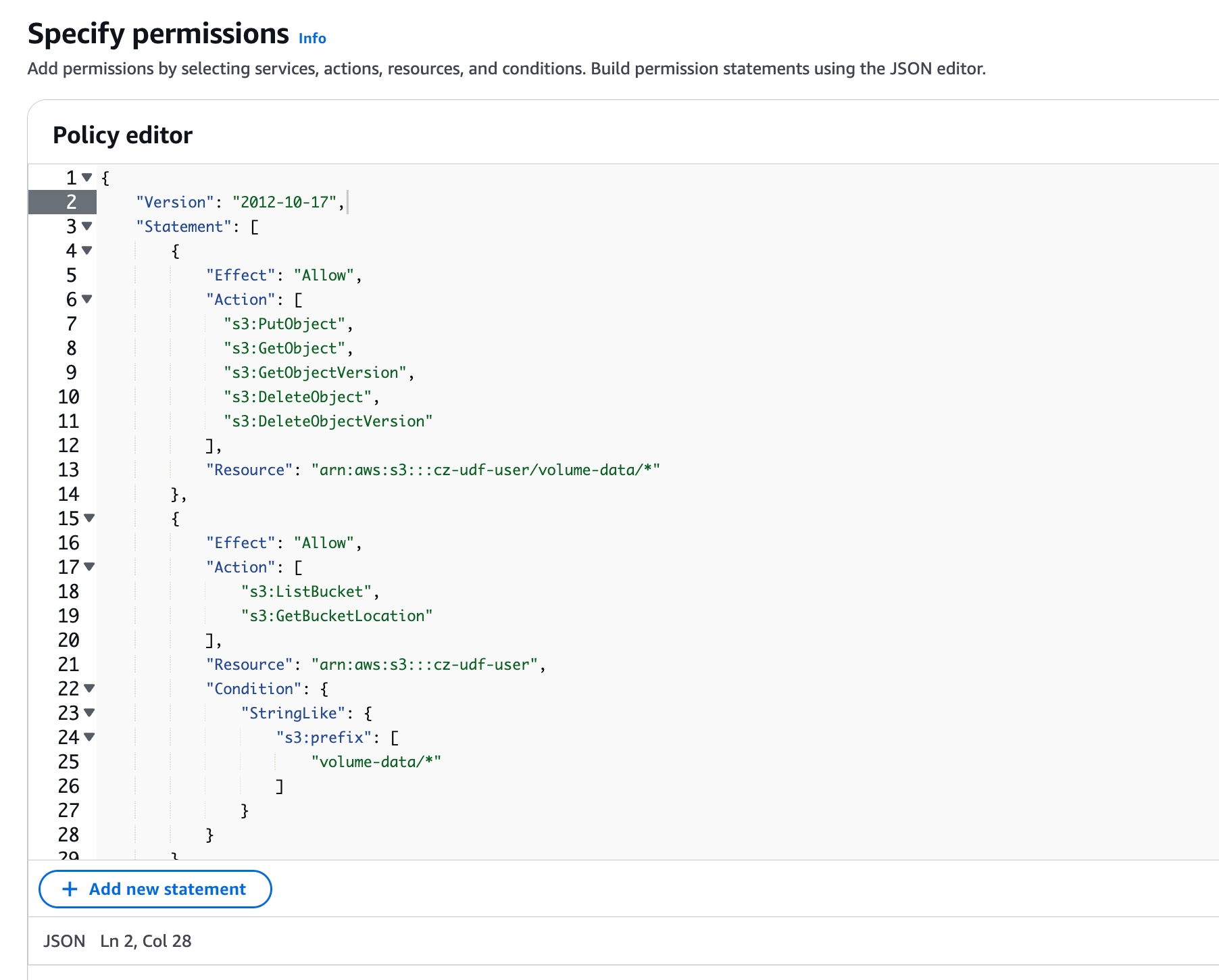

- In the IAM page's left navigation bar, go to Policies, in the Policies interface, select Create Policy, and choose the JSON method in the policy editor

- Add the policy that allows Singdata Lakehouse to access the S3 bucket and directory. Below is a sample policy, please replace

<bucket>and<prefix>with the actual bucket and path prefix names, e.g. bucket iscz-udf-user, prefix isvolume-data

- Select Next, enter the policy name (e.g.,

cz-udf-user-rw-policy) and description (optional) - Click Create Policy to complete the policy creation

STEP2: Create Role on AWS Side:

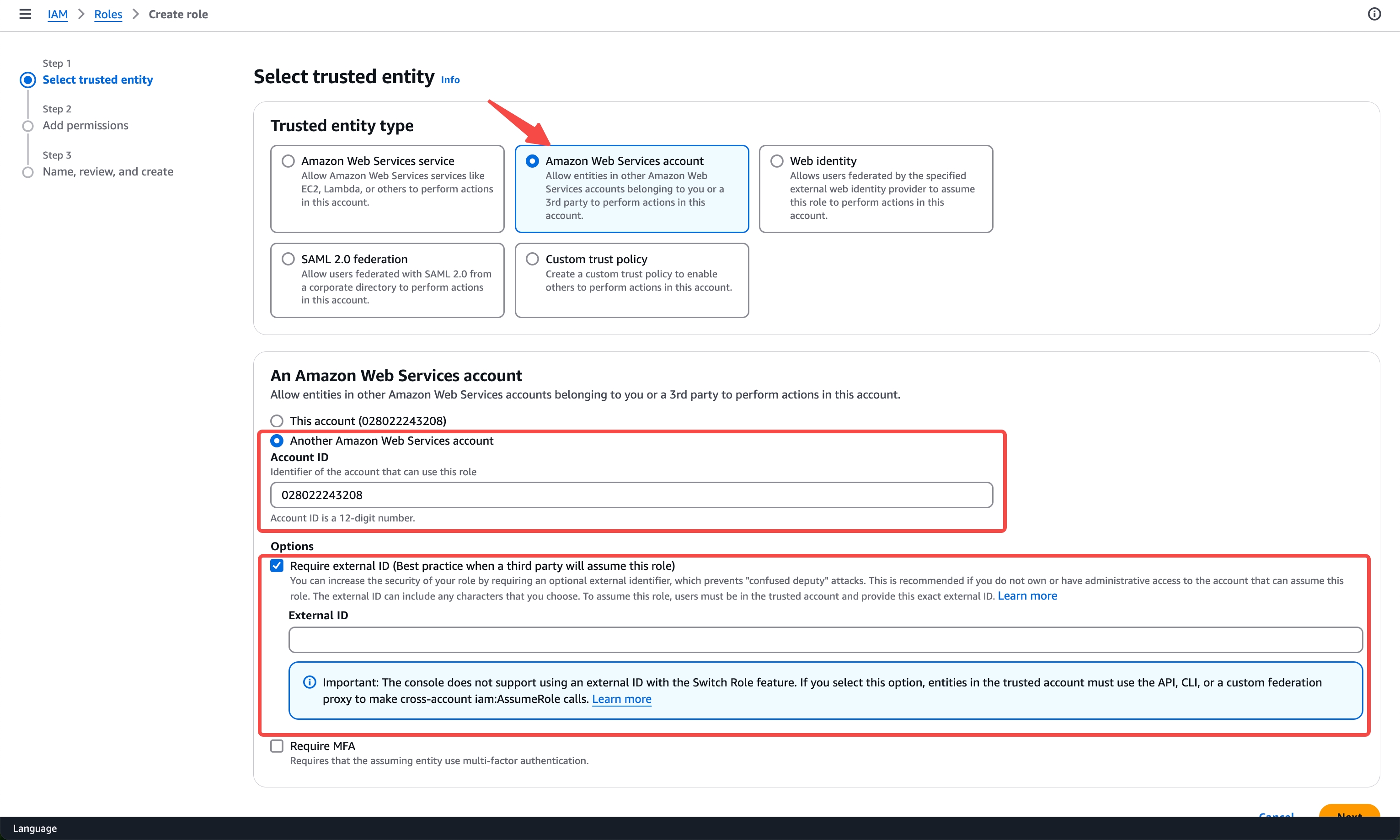

- Log in to the AWS cloud platform and go to the Identity and Access Management (IAM) product console

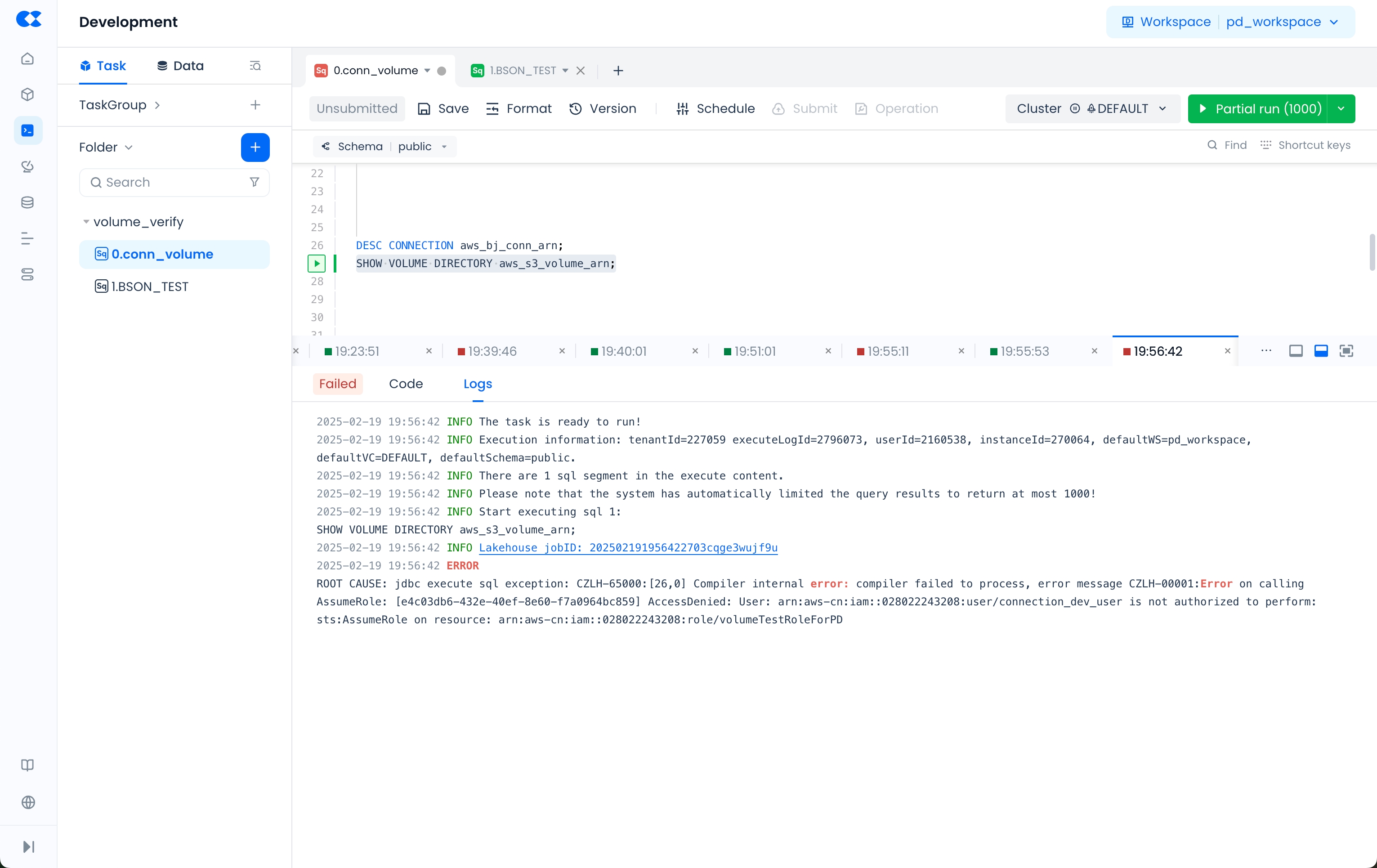

- In the IAM page, navigate to Roles -> Create Role -> AWS Account, select Another AWS Account, and enter

028022243208in the Account ID

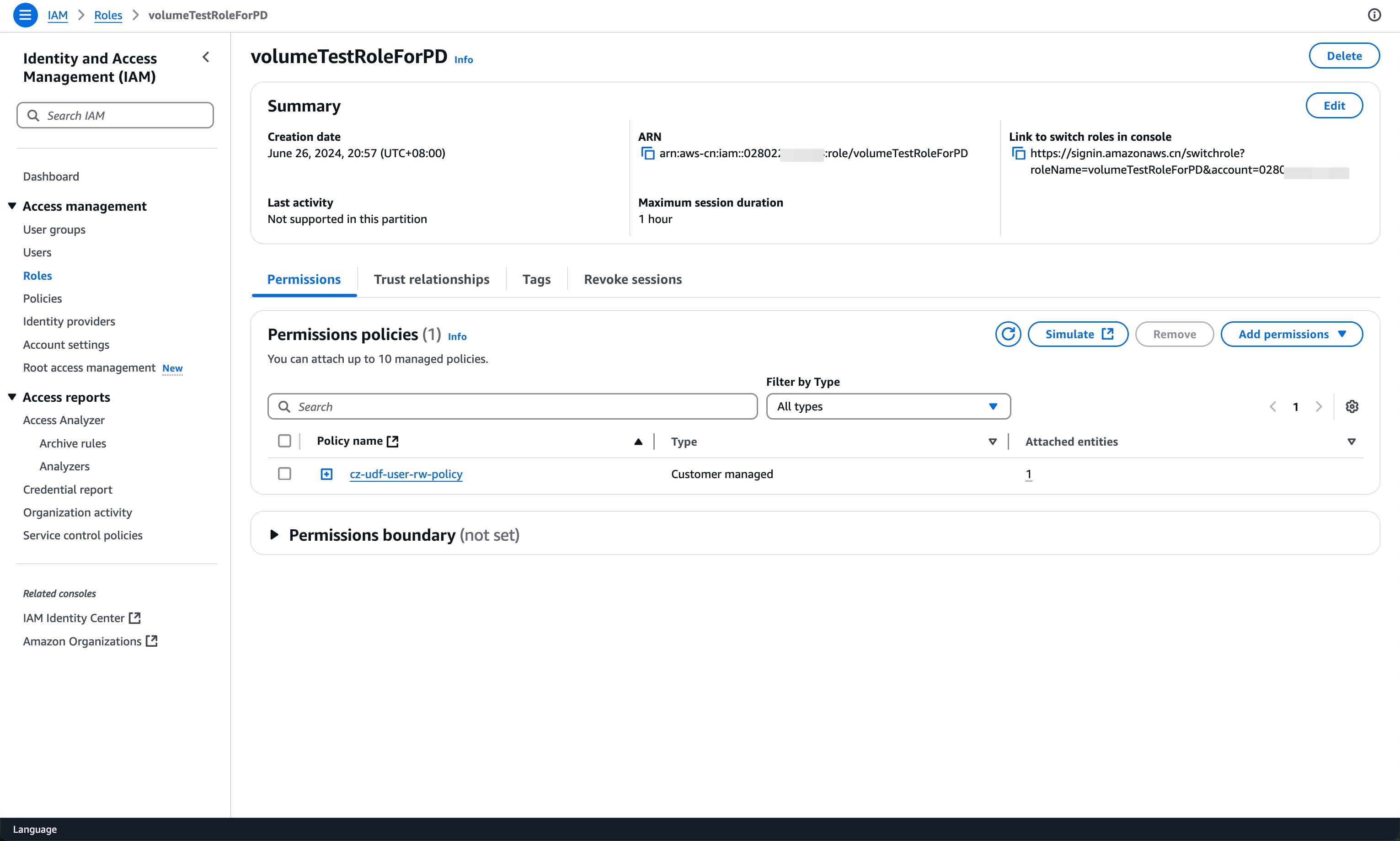

- Select Next, on the Add permissions page, choose the policy created in STEP1

cz-udf-user-rw-policy, then select Next - Fill in the Role name (e.g.,

volumeTestRoleForPD) and description, click Create Role to complete the role creation - In the role details page, obtain the value of Role ARN to create the STORAGE CONNECTION

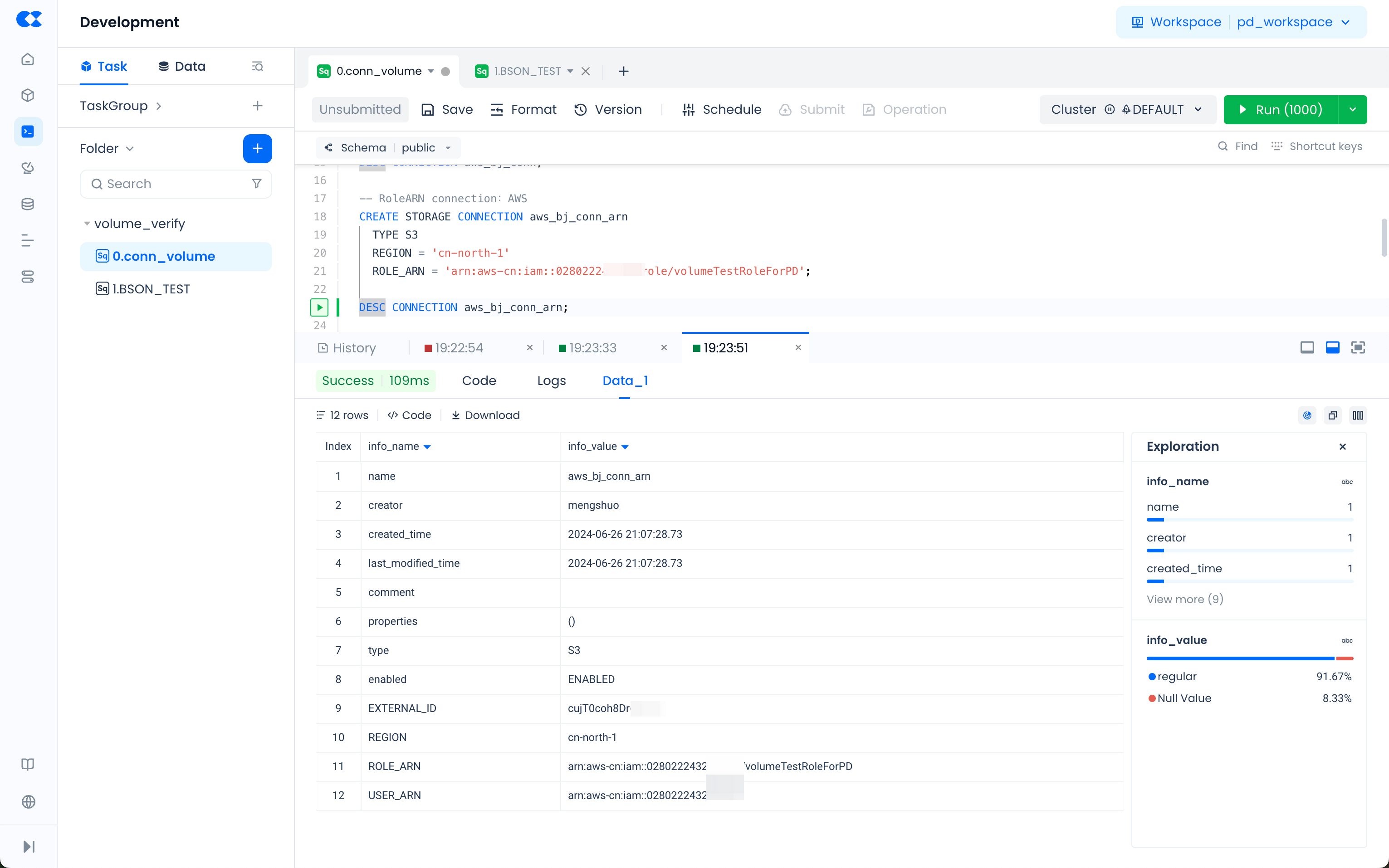

STEP2: Create STORAGE CONNECTION on Singdata Lakehouse Side:

- Execute the following commands in Studio or Lakehouse JDBC client:

- During the process of creating a storage connection, Lakehouse will generate this EXTERNAL ID. You can configure this EXTERNAL ID into the Trust Policy of the AWS IAM role (

volumeTestRoleForPD) created in STEP2 to achieve additional access control:

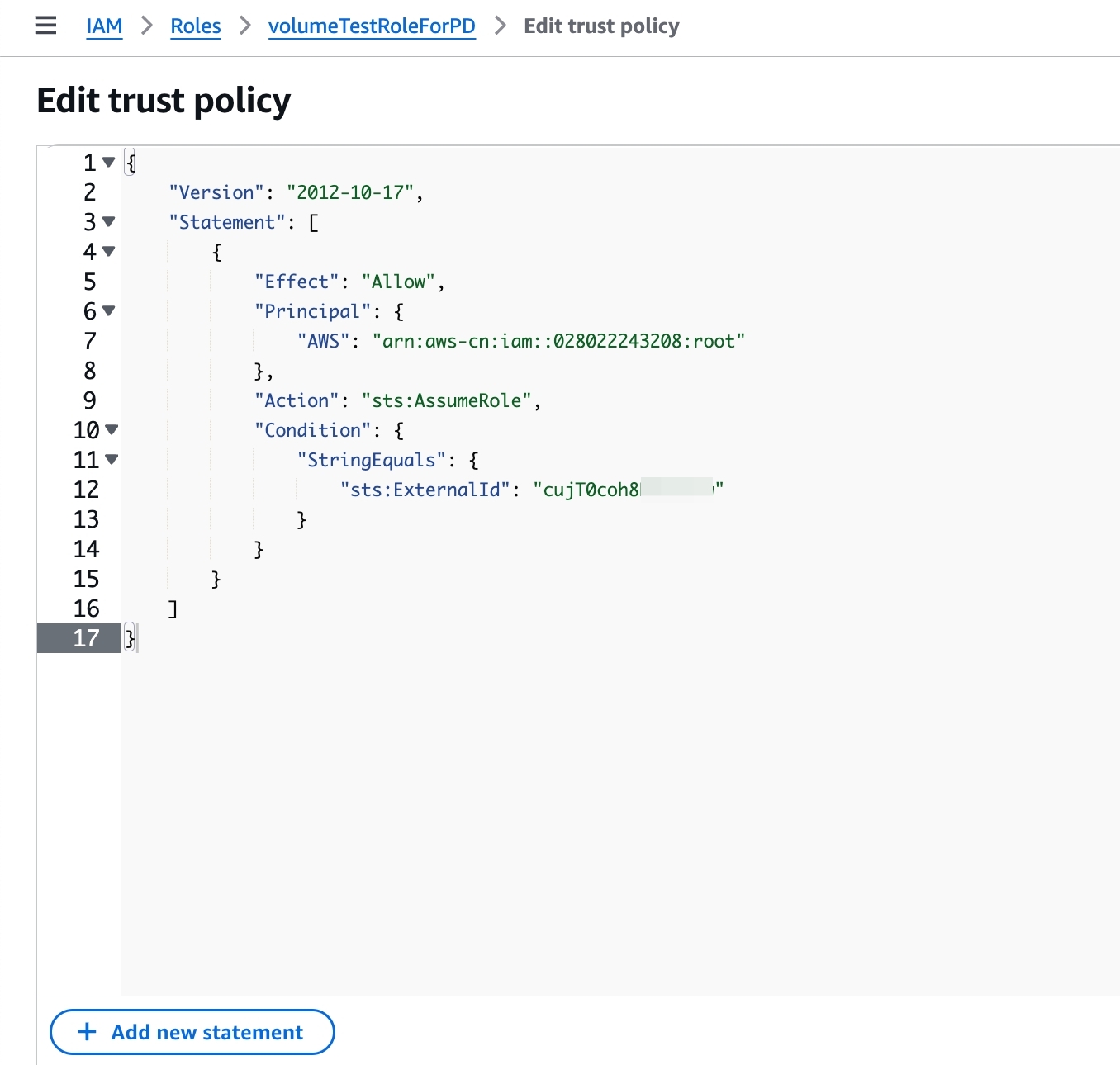

- In the AWS IAM console, navigate to Roles in the left sidebar, find the role created in STEP2 and go to the role details page. In Trust relationships, replace the value of

sts:ExternalId000000withEXTERNAL_IDfrom the DESC result. Click Update to complete the role policy update.

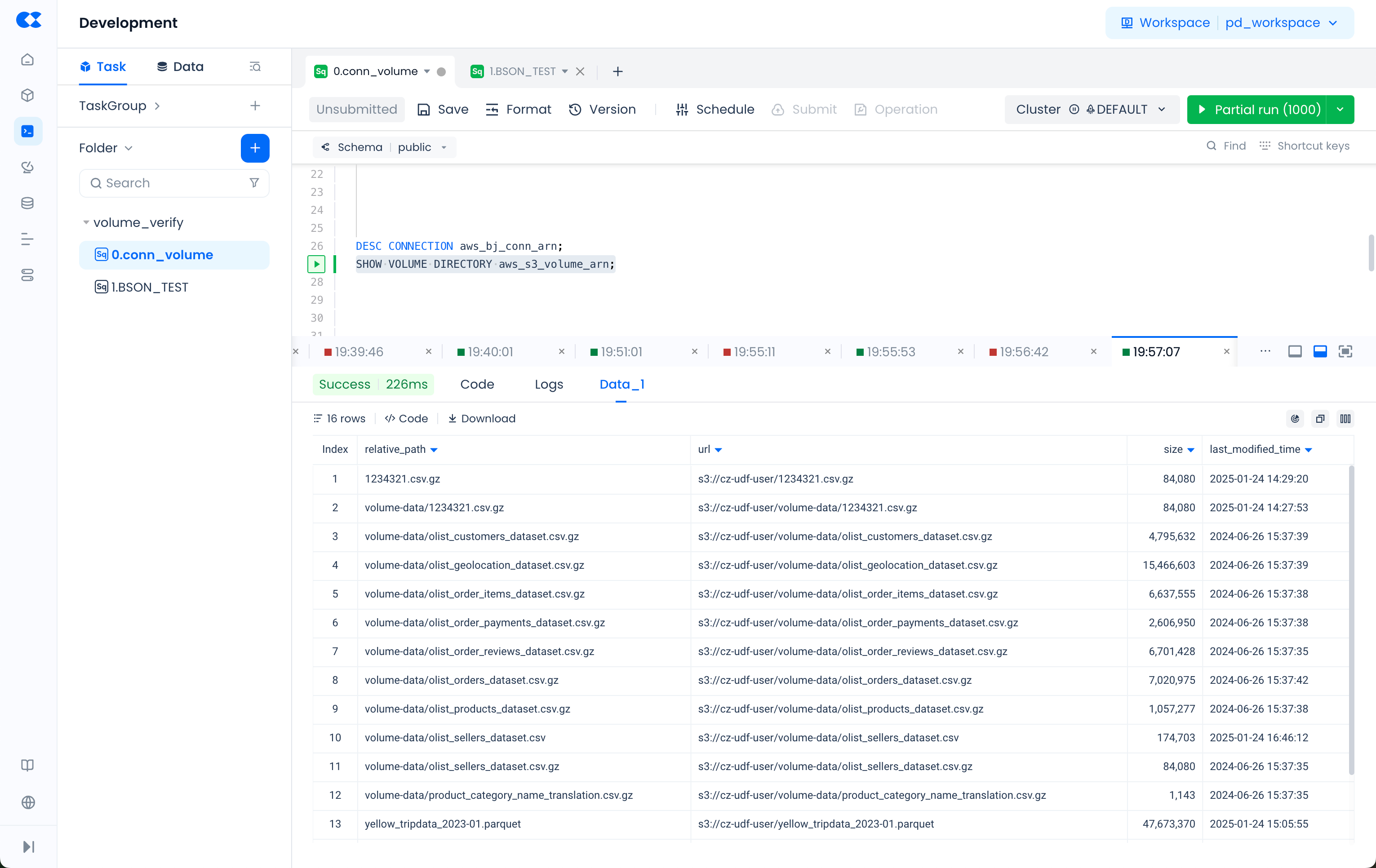

- After the configuration, the VOLUME object created based on this CONNECTION will be able to access the files in S3 smoothly: