Using Hugging Face Image Recognition Model to Process Image Data

1. Applicable Scenarios:

This article creates a Remote Function based on Alibaba Cloud container images. This scenario is applicable for:

- If the image parsing program's Python + dependency package is larger than 500M, you need to use the method introduced in this article, which is to create based on the container image service (if the function's program file package is smaller than 500M, it can be directly uploaded to object storage for automated creation).

- Regardless of the program file size, if you need to use GPU resources of the cloud function computing service, you need to create based on the container image service.

2. Process Demonstration:

2.1 Preparation:

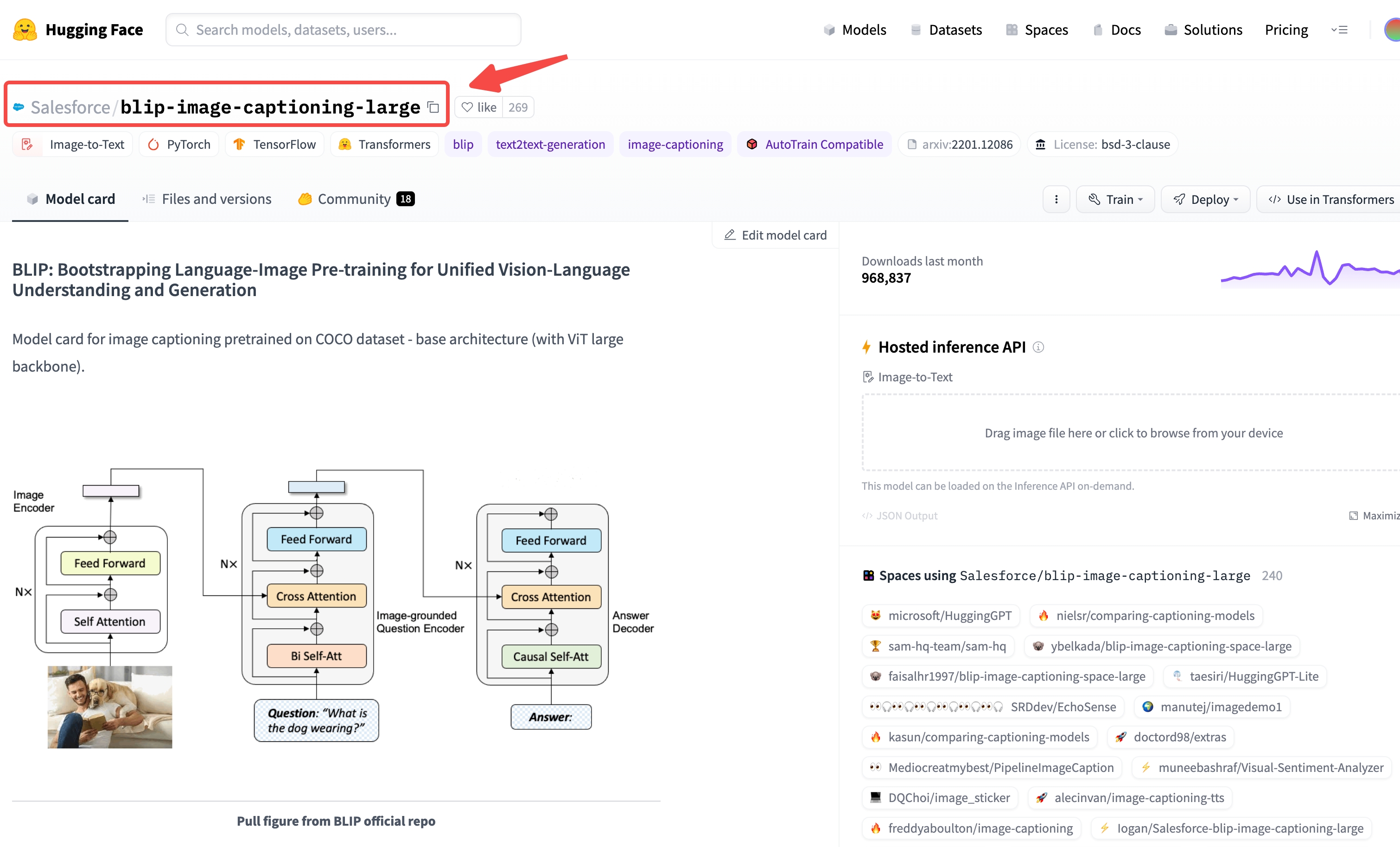

- Scenario: Use Hugging Face's image to text offline model to parse image content

- Model: Hugging Face's image recognition model, please refer to link

- Code: (see appendix)

2.2 Download Model and Dependency Libraries:

(Recommended to run in x86_64 Linux host environment)

2.2.1 Download Model

- Install huggingface_hub model download tool:

-

Download the model file to the model directory, and execute the following script with Python/ipython

- repo_id is the model name: refer to the model website:

- local_dir: Local folder, the destination for model download

- local_dir_use_symlinks: Whether the local folder is a mount point

2.2.2 Download Dependencies:

Download the dependencies to the lib_ directory (a specific version of Python is required, it is recommended to use Docker)

Create a lib_ folder locally, mount it to Docker's /root/lib_, and download the dependencies into lib_

Executing in Docker environment:

2.3 Writing Code:

Create a code file at the same directory level as model and lib_, such as hgf_image2text.py. Refer to the appendix for the code.

2.4 Testing Code:

Execute the test in the Docker quay.io/pypa/manylinux2014_x86_64:2022-10-25-fbea779.

Executing in Docker environment:

After successful testing, package the image and upload it to Alibaba Cloud ACR service

2.5 Package and upload the image:

2.5.1 Prepare the image:

Create a Dockerfile in the same directory as model, lib_, and hgf_image2text.py, with the following content:

Add the Singdata Lakehouse bootstrap program (you can contact Singdata support for assistance) and extract it to the current directory. At this point, the current directory should contain:

model, lib_, hgf_image2text.py, Dockerfile folders and files, as well as bootstrap, lib, cz extracted from the Singdata bootstrap program.

2.5.2 Prepare the Cloud Image Repository (requires logging into the Alibaba Cloud console):

-

- Go to Container Image Service -> Instance List, enter personal instance

- In the personal instance interface, on the left side Repository Management -> Namespace -> Click Create Namespace: enter the namespace name, click create

- On the left side Repository Management -> Image Repository -> Click Create Image Repository: select namespace, enter repository name, repository type select "Private" -> Next, Code Source select Local Repository, click create image repository

- In the image repository list, enter the repository details page. The operation guide contains steps for uploading images, image version number is custom, for example: login:

2.5.3 Upload Image (Local Execution):

Package the image:

Uploading Image:

2.5.4 Testing Image (Local Execution):

2.6 Create Function (Login to Alibaba Cloud Console Required):

- Go to Function Compute FC 2.0 -> Services and Functions, select the region you want to use at the top of the page, enter the service name in the pop-up page on the right, keep the rest as default; click Show Advanced Options at the bottom, in the Service Role select AliyunFCDefaultRole, keep the rest as default (Public Network and VPC access policies can be chosen as needed).

- In the Service List, enter the service you just created, and click Create Function.

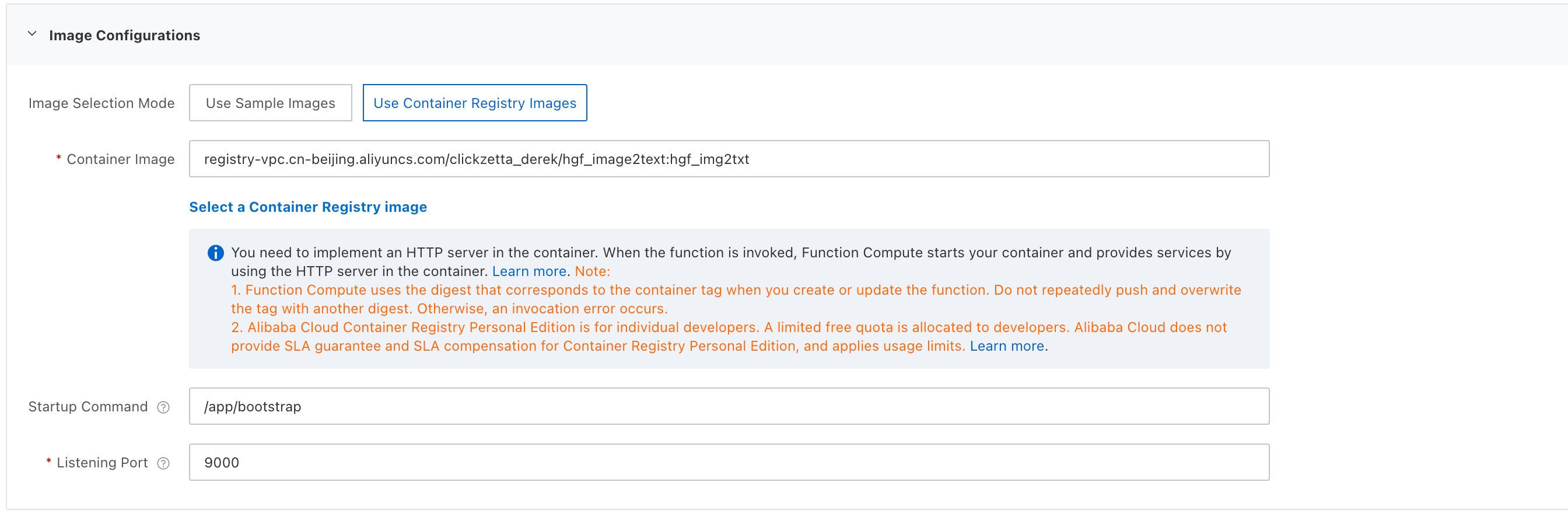

- In the Create Function interface, select Use Container Image, in Basic Settings: enter Function Name, for example: hgf_image2txt, Web Server Mode: Yes, Request Handler Type: Handle HTTP Requests.

- In Image Configuration, select Use Image from ACR, choose the image from ACR, Startup Command:

/src/bootstrap, Listening Port: 9000

- In Advanced Configuration, it is recommended to adjust the vCPU and memory to 8 cores, 16G.

- Keep other configurations as default, and click Create.

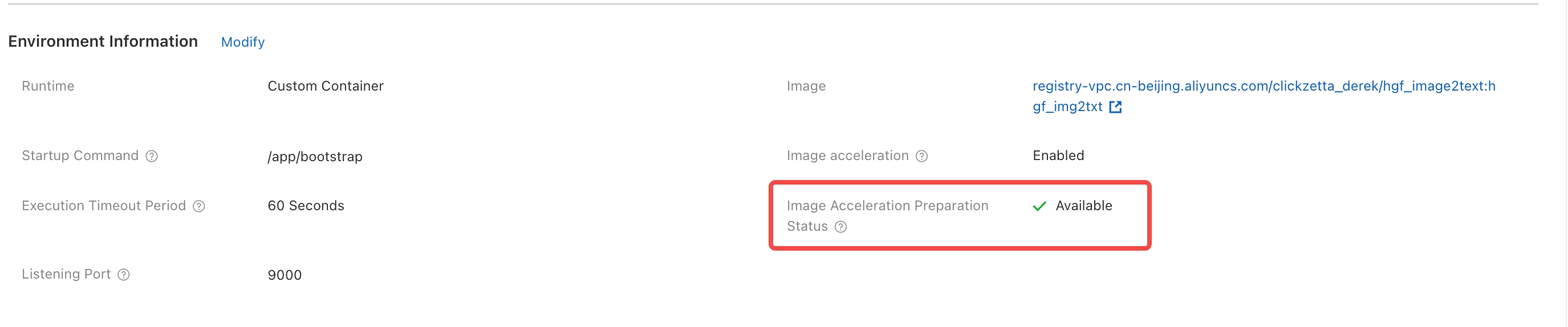

- In the Function List, enter the created function -> Function Configuration, Check Image Acceleration Preparation Status, wait until it shows "Available"

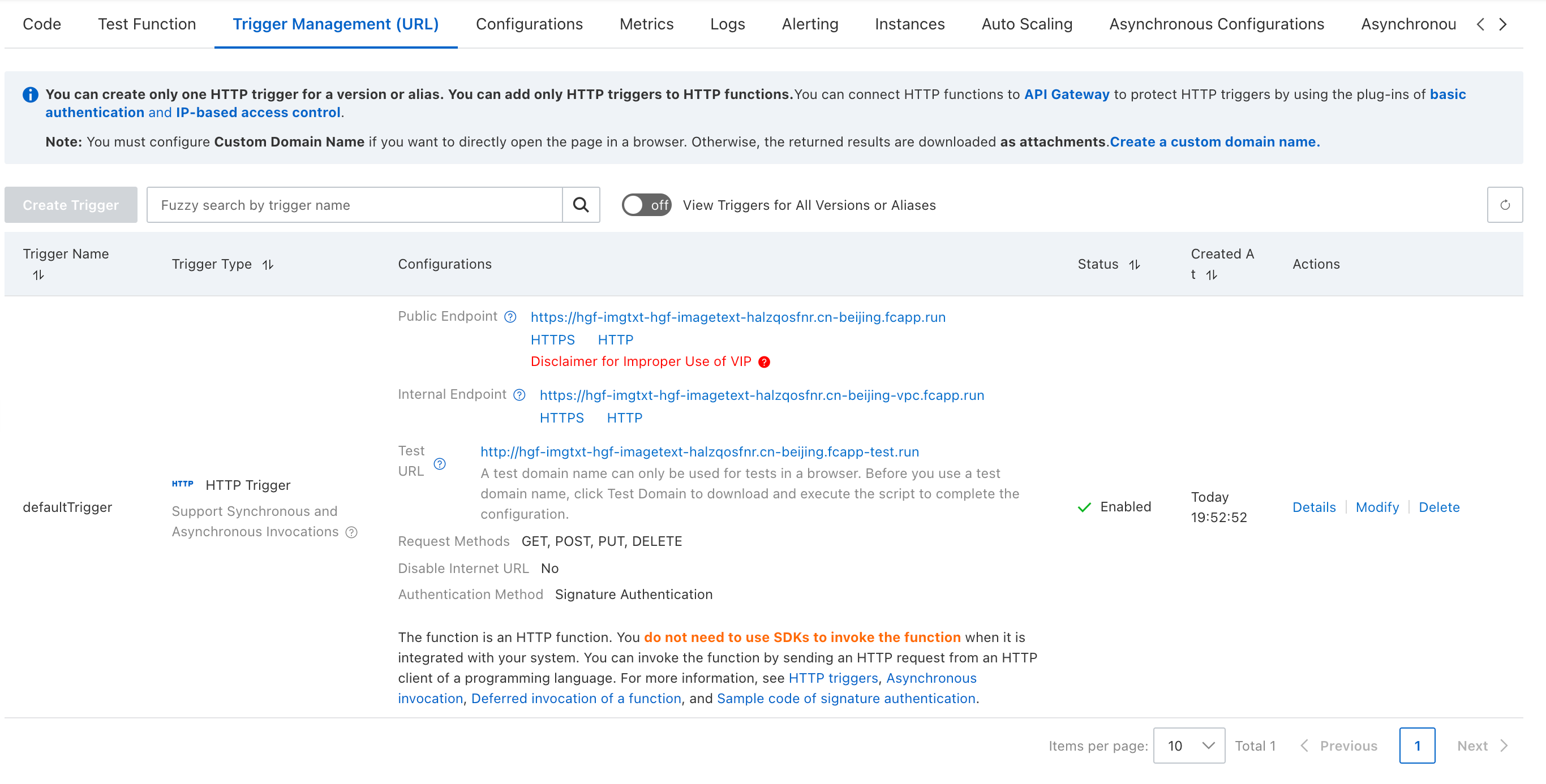

- Go to Trigger Management, obtain the HTTP link for public network access

2.7 Create Remote Function in Singdata Lakehouse (Singdata Lakehouse Side Operation):

Create Function:

Using the function (image URLs only recognize the https protocol):

3. Appendix

Code: