Table Stream Change Data Capture

Table Stream is a feature of Singdata Lakehouse used to capture changes in table objects. By defining a Table Stream object, you can record and track data changes based on an existing table. This object leverages the multi-version history feature of Lakehouse Table, recording the specified version (or the latest version) of the source table as the initial read position when created. When you query the Table Stream using SQL, it returns all change records from the initial point to the current latest version.

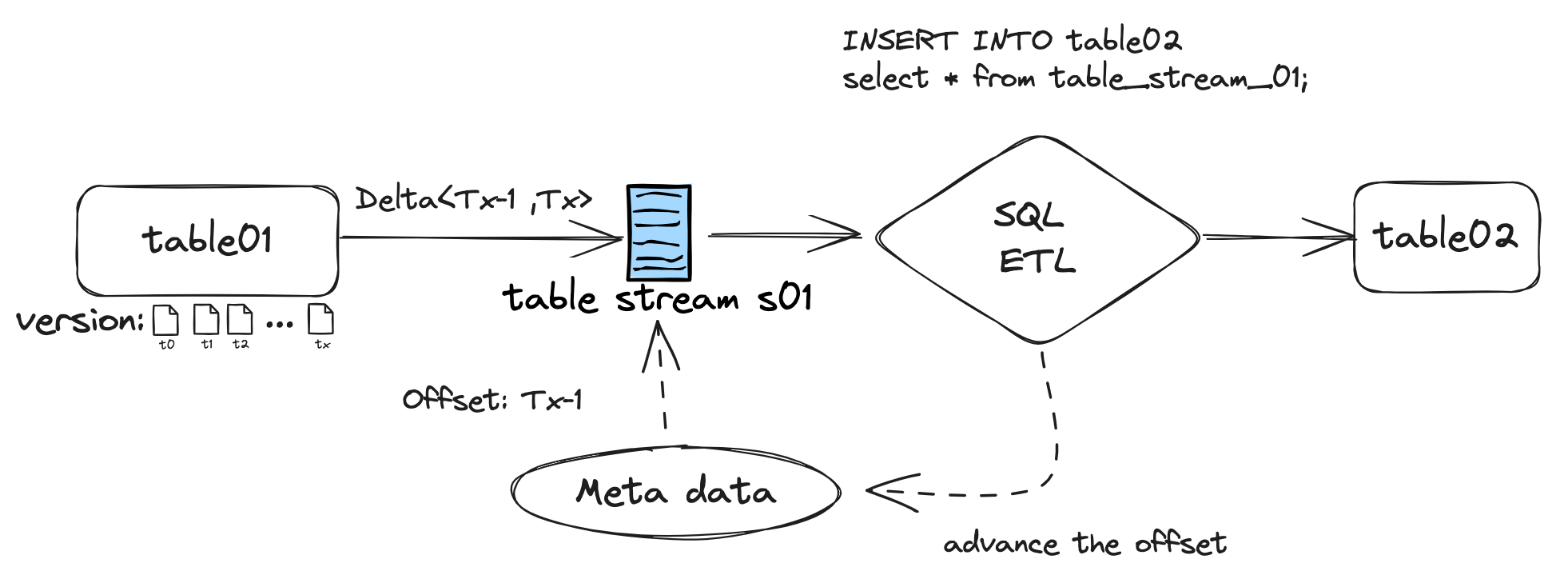

Below is a schematic diagram of how Table Stream works:

Table Stream does not store actual data; it only records and maintains the data version points of the source table. The points are updated to the latest data version only when DML statements such as INSERT, DELETE, UPDATE, MERGE, etc., are used to operate on the Table Stream.

Application Scenarios

- SQL ETL Tasks: Using Table Stream can simplify the identification of incremental data and improve the processing efficiency of ETL tasks. Traditional ETL tasks need to filter incremental data through partition filtering or WHERE condition filtering, which may generate additional system pressure and resource consumption in real-time scenarios. Table Stream identifies change data based on data versions, capturing incremental data without additional operations and calculations, thereby improving ETL job efficiency and saving computational overhead.

- Real-time Data Provision: Similar to database CDC (Change Data Capture), the Table Stream of the Lakehouse data platform can capture change data and continuously synchronize these data as detailed records to downstream systems.

Table Stream Types

Table Stream has two modes:

- STANDARD Mode: Tracks all DML changes of the source object, including inserts, updates, and deletes (including table truncation). This mode provides row-level changes by connecting and processing all delta data changes to provide row-level increments. Delta changes in Table Stream refer to data changes that occur between two transaction time points. For example, if a row is inserted and then updated after the offset of the Table Stream, the delta change is a new row. If a row is inserted and then deleted after the offset of the Table Stream, the delta change is no row. In other words, delta changes reflect the latest state of the source object, not historical changes.

- APPEND_ONLY Mode: Records only the INSERT operations of the object. Update and delete operations are not recorded. For example, if 10 rows are initially inserted into the table and then a delete operation is performed to delete 5 rows without moving the point, the Table Stream still records 10 row operations.

Using Table Stream

Creating a Table Stream

You can use the CREATE TABLE STREAM command to create a Table Stream. Here is an example:

Delete Table Stream

You can use the DROP TABLE STREAM command to delete a Table Stream. Here is an example:

Table Stream Capturing Data Change Timeliness

Table Stream senses data changes based on the metadata modification submission time of the object. The specific timeliness is as follows:

- DML method to modify data: After the DML task is successfully completed, the changed data will be visible in the Table Stream object.

- Bulkload: After the bulkload task is successfully completed, the changed data will be visible in the Table Stream object.

- Streaming ingestion: When writing data through the Ingestion Service streaming API, changes are submitted by default every 1 minute, and the changed data will be visible in the Table Stream object. Note: When performing SQL queries on the target table itself for streaming writes, the data is visible in real-time. This only constrains the timeliness of the Table Stream visibility based on the target table.