Incremental Computing Overview

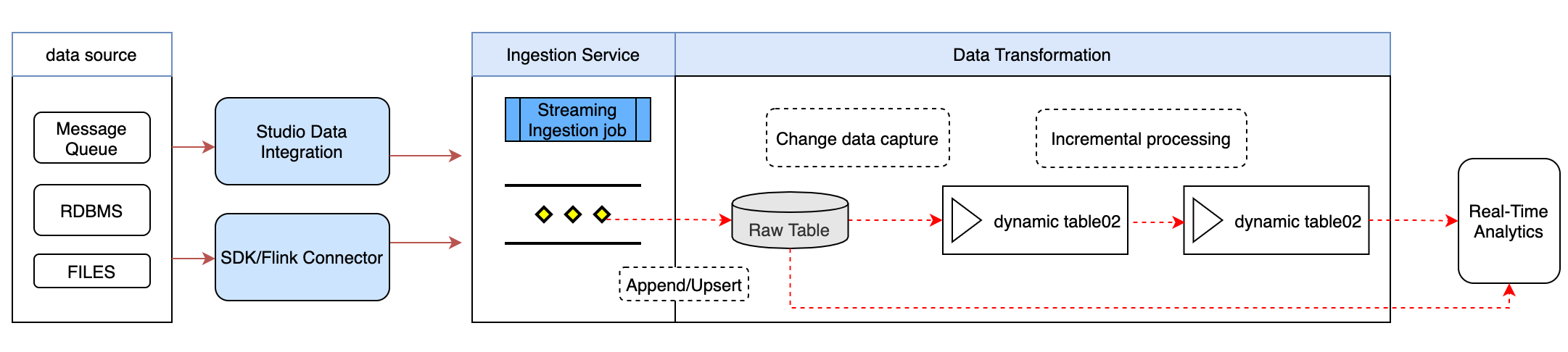

Streaming data pipeline is a set of tasks aimed at continuously collecting, processing, and transforming real-time data to obtain result data that meets business timeliness requirements. Streaming data processing is the foundation for analysts and business applications to conduct real-time insights or real-time decision-making.

Unlike periodic offline processing (Batch data pipeline), streaming data processing continuously produces real-time updated result data by orchestrating real-time data collection tasks and supporting incremental data processing SQL tasks. Incremental processing technology is used during the process to improve processing efficiency and reduce costs.

In the Singdata Lakehouse streaming data processing, the overall processing flow is as follows:

The product features of streaming data processing include:

| Feature | Description |

|---|---|

| Real-time Data Loading | Real-time Data Loading Service: Provides Streaming API through SDK/Connector, supporting real-time append/update writes and system-managed services with second-level visibility. You can use SDK or Flink Connector to call the interface to write data to the target table. Studio Data Integration Service: Built-in data integration tool that supports real-time extraction from various real-time data sources (such as Kafka, database CDC, etc.) and writes to the Lakehouse target table through the Streaming API. |

| Incremental Data Processing | Dynamic Table: Defines data processing logic declaratively through dynamic tables, supporting any SQL syntax and operators. The system automatically reads incremental changes from the Base table and adaptively optimizes data processing efficiency through incremental/full algorithms. Users can create one or more dynamic tables to set scheduling strategies. The system automatically identifies dependencies and continuously schedules execution, greatly simplifying the development process of real-time data processing. Note: The minimum supported scheduling interval is currently 1 minute. |

| Change Data Capture | Table Stream: Table Stream is a built-in SQL object type in the system, created on a specified table, and can record the data change (CDC) information of that table. Table Stream supports SQL queries to obtain change record data between two specified data versions, such as the change records from 5 minutes ago to the current time. Through table stream, downstream SQL ETL can easily read and process table change data; or synchronize the change results to external systems. |

| Continuous Scheduling | The system provides two scheduling methods: Dynamic Table Self-Setting Scheduling Cycle: When defining through materialized view DDL, you can set the scheduling cycle by specifying the INTERVAL keyword. Using Studio Scheduling Task Scheduling: Create SQL tasks in the Web IDE and set the task scheduling cycle. This method supports scheduling of both dynamic tables and SQL ETL jobs using Table Stream. Note: Using the Studio scheduling system provides better task execution observability and operational alert capabilities. |