Quick Start Query Analysis Using Sample Data

Tutorial Overview

Through this tutorial, you will learn how to use the built-in sample datasets of the Lakehouse platform to quickly perform query analysis using SQL without the need to prepare data in advance, thereby evaluating SQL functionality and performance.

The sample datasets are shared by the Singdata platform under the dataset named CLICKZETTA_SAMPLE_DATA, available for query by all accounts. This tutorial will use the TPC-H 100gb dataset as an example to demonstrate how to quickly complete TPC-H test set queries in Lakehouse and evaluate processing performance.

The tutorial will be completed through the following steps:

- Environment Preparation: Check the raw data using the sample dataset and create a compute cluster for testing

- Initiate Queries: Use the Studio Web environment to create SQL queries and complete 22 TPC-H SQL queries

- Change Cluster Size: Adjust the cluster size, expanding it to 4 times the previous size

- Initiate Queries: Use the resized cluster to complete the 22 TPC-H SQL queries again

- Observe the changes in query latency under different cluster specifications

Step01. Preparation

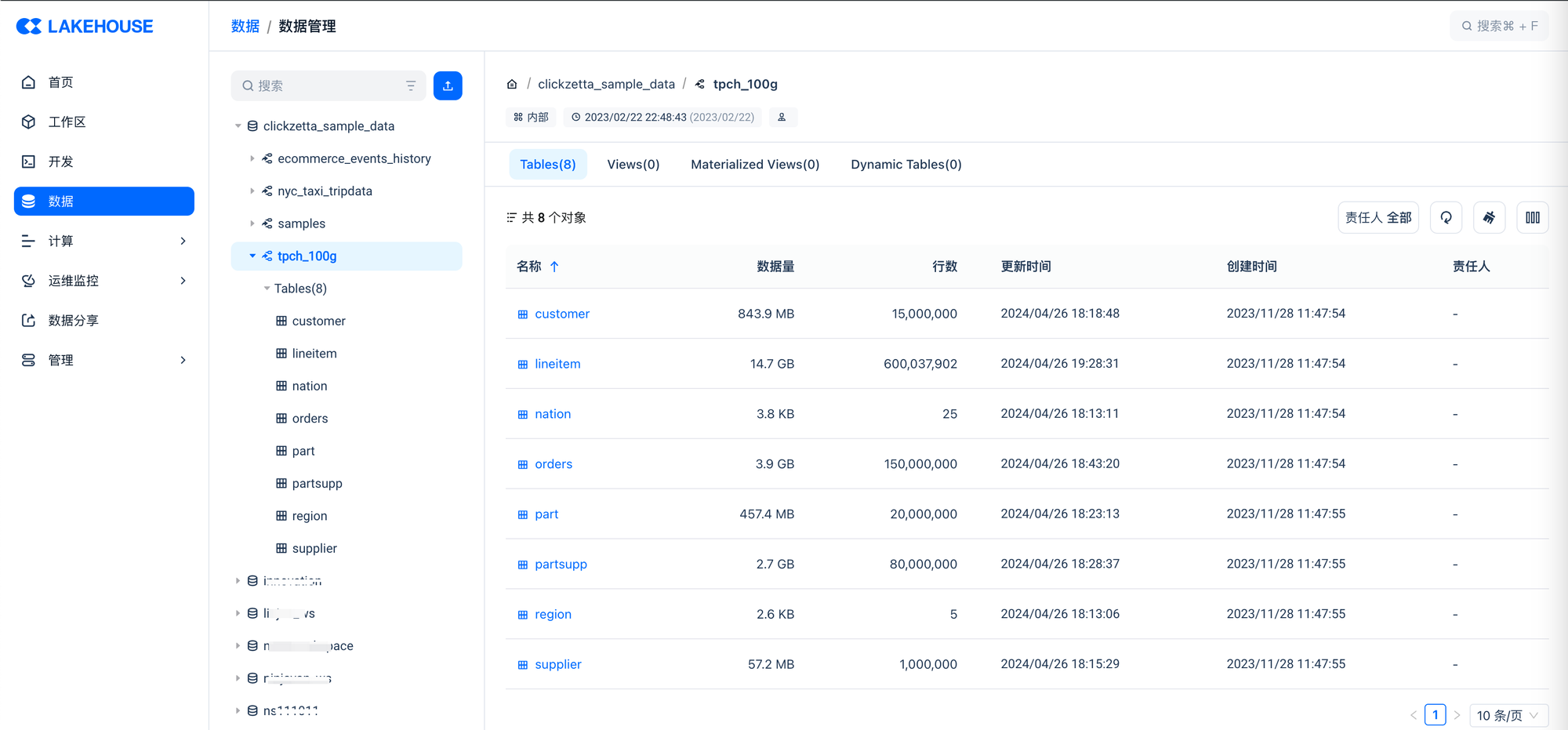

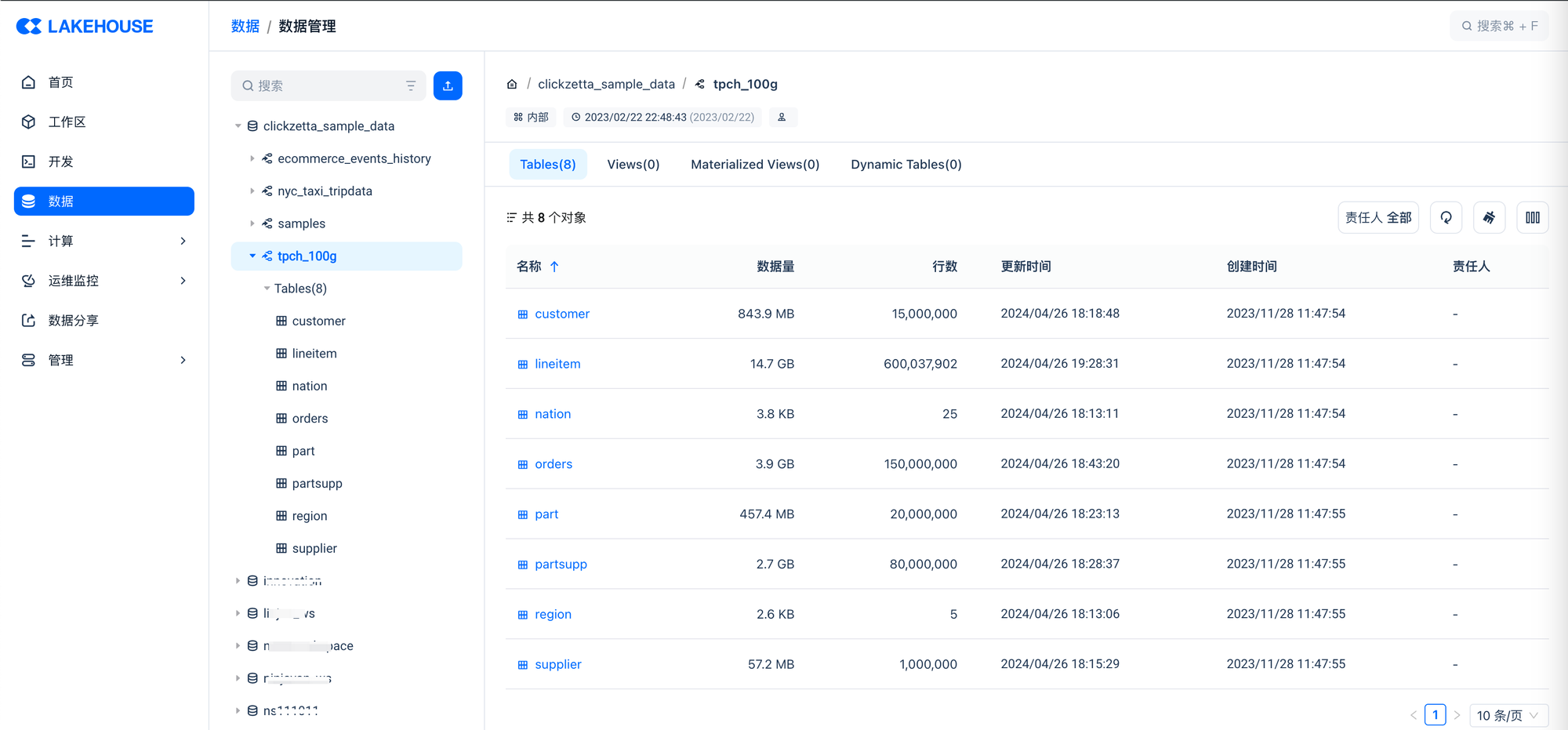

First, after logging into the Lakehouse Web console and entering the specified workspace, you can access the "Data" module to check if the relevant tables under "clickzetta_sample_data.tpch_100gb" exist in the data object list under data management.

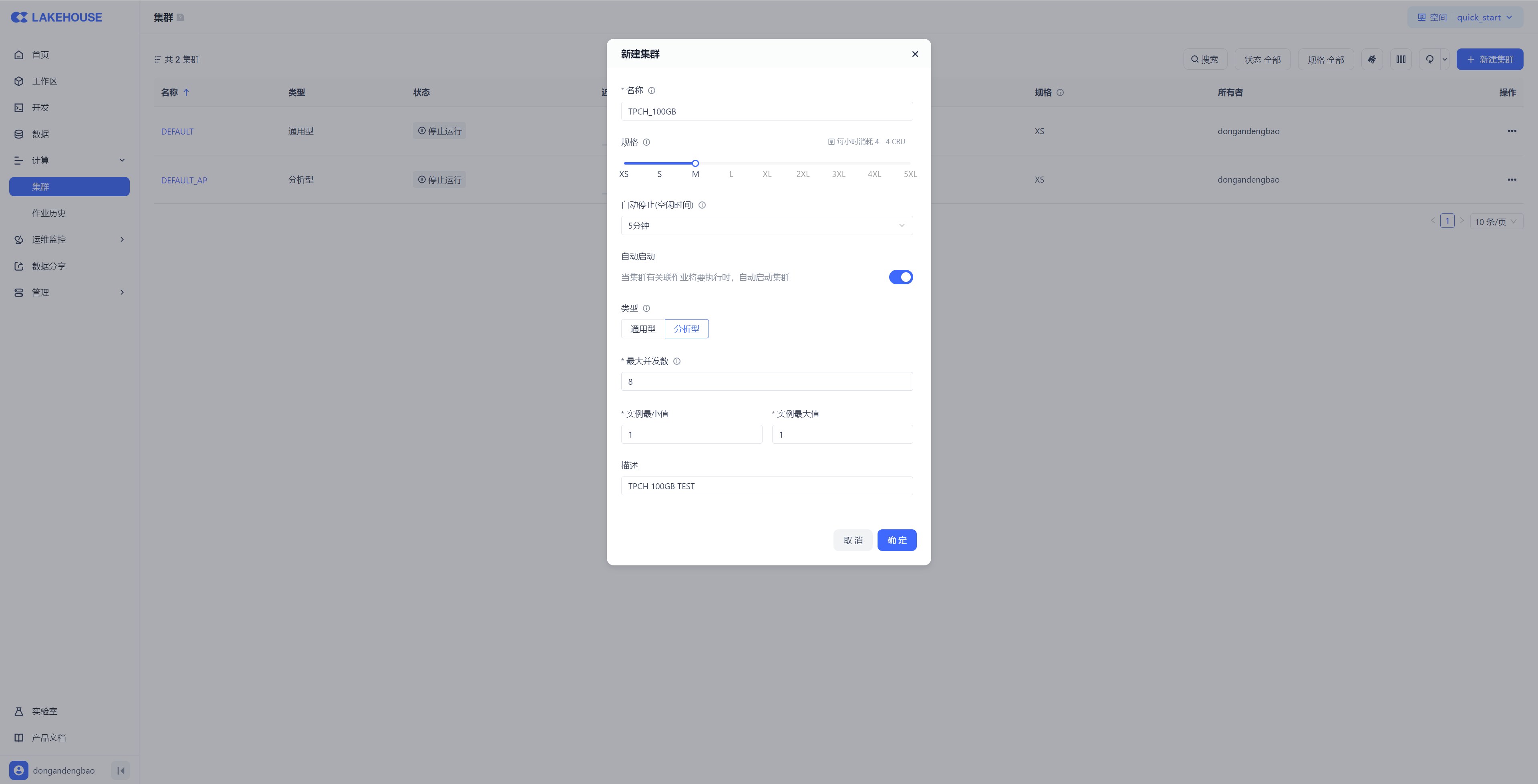

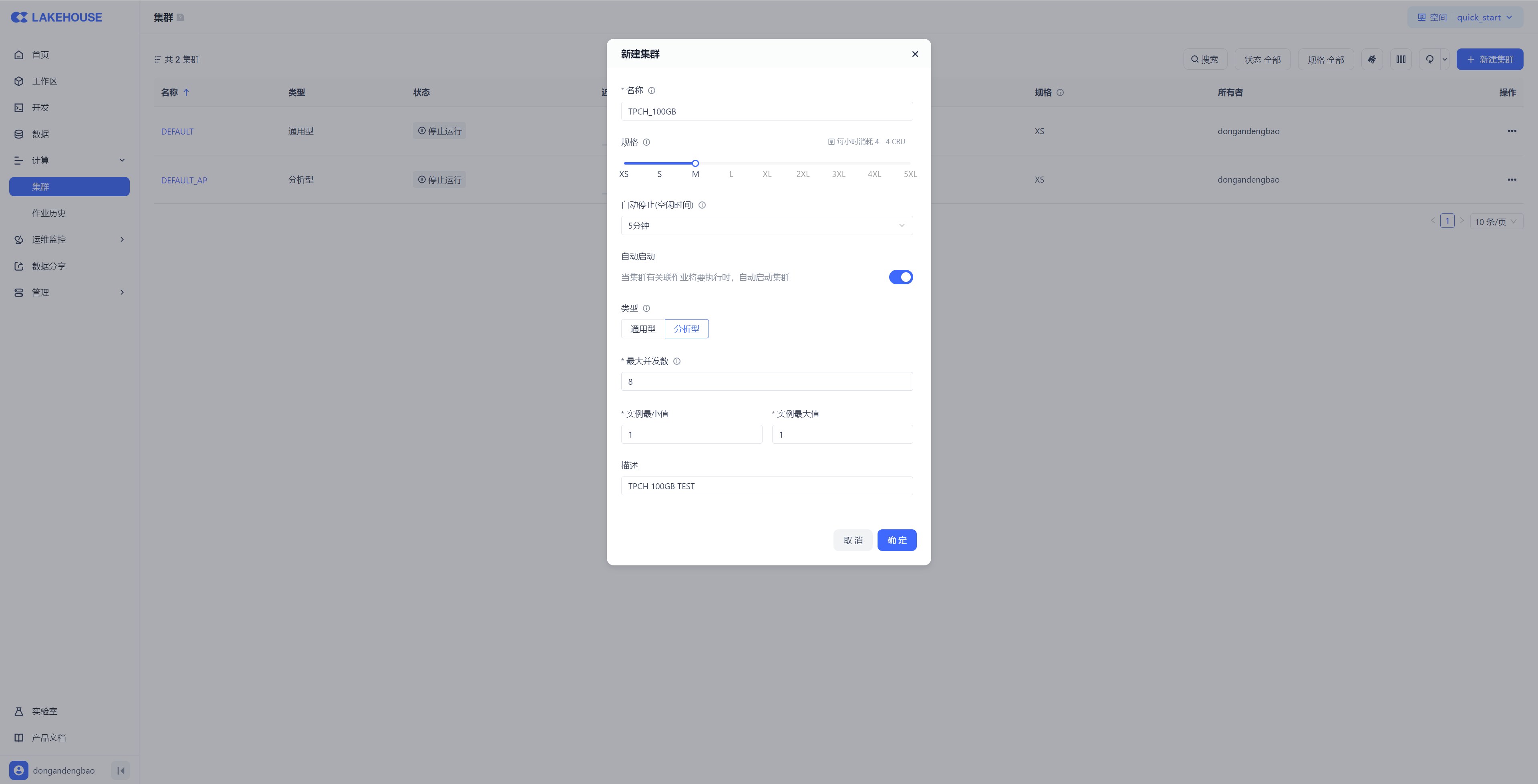

Next, we will temporarily create an independent compute cluster for query analysis for this test. You can create a new cluster through the "Compute → Clusters" menu in the Lakehouse Web console using the page wizard.

At the same time, you can also create a cluster using SQL commands. When operating with SQL commands, you can stay within the SQL development context and control cluster creation, scaling, pausing/resuming, and destruction actions through SQL commands during Ad-hoc or ETL development processes, often improving the efficiency of computing resource operations.

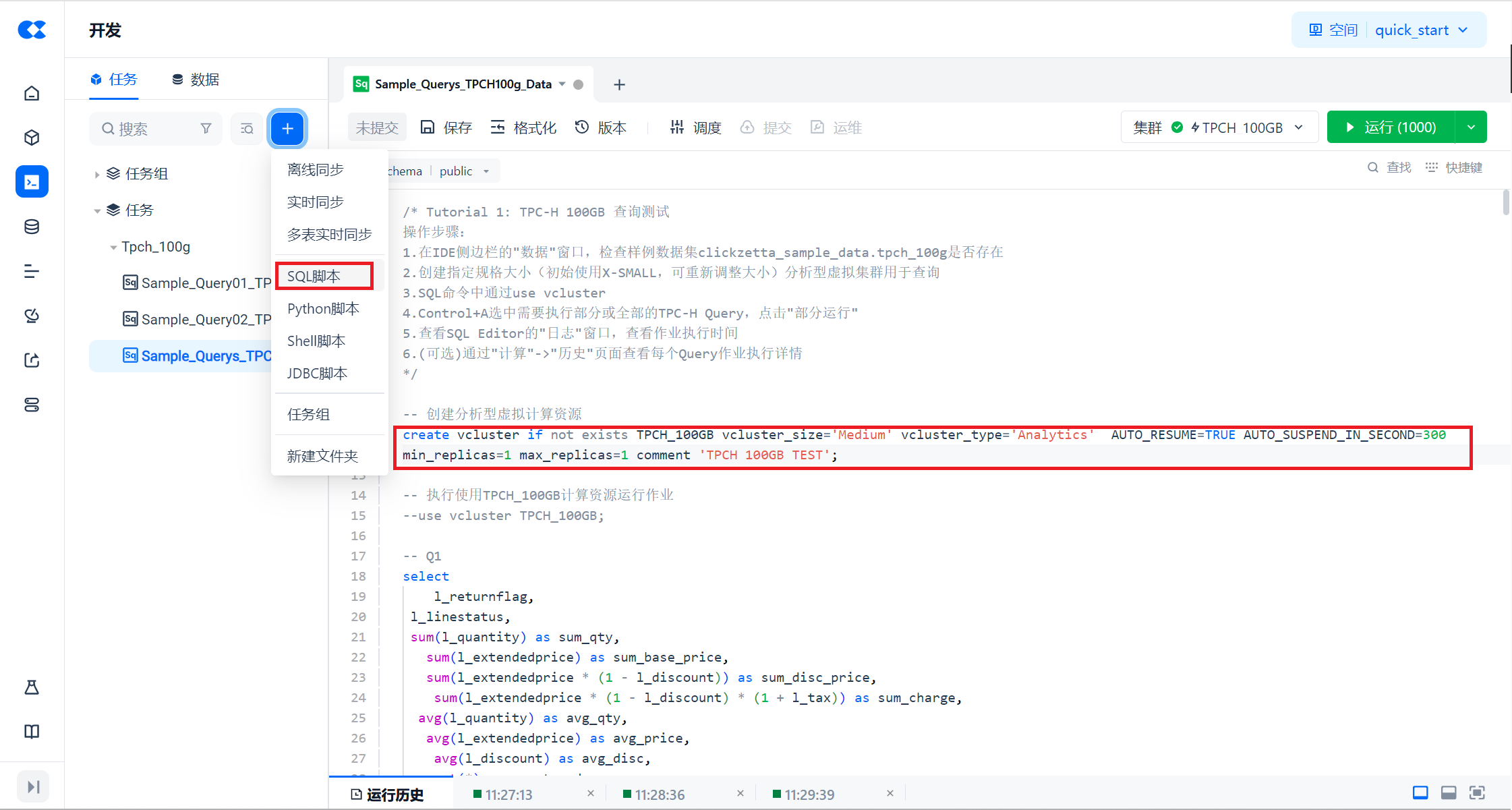

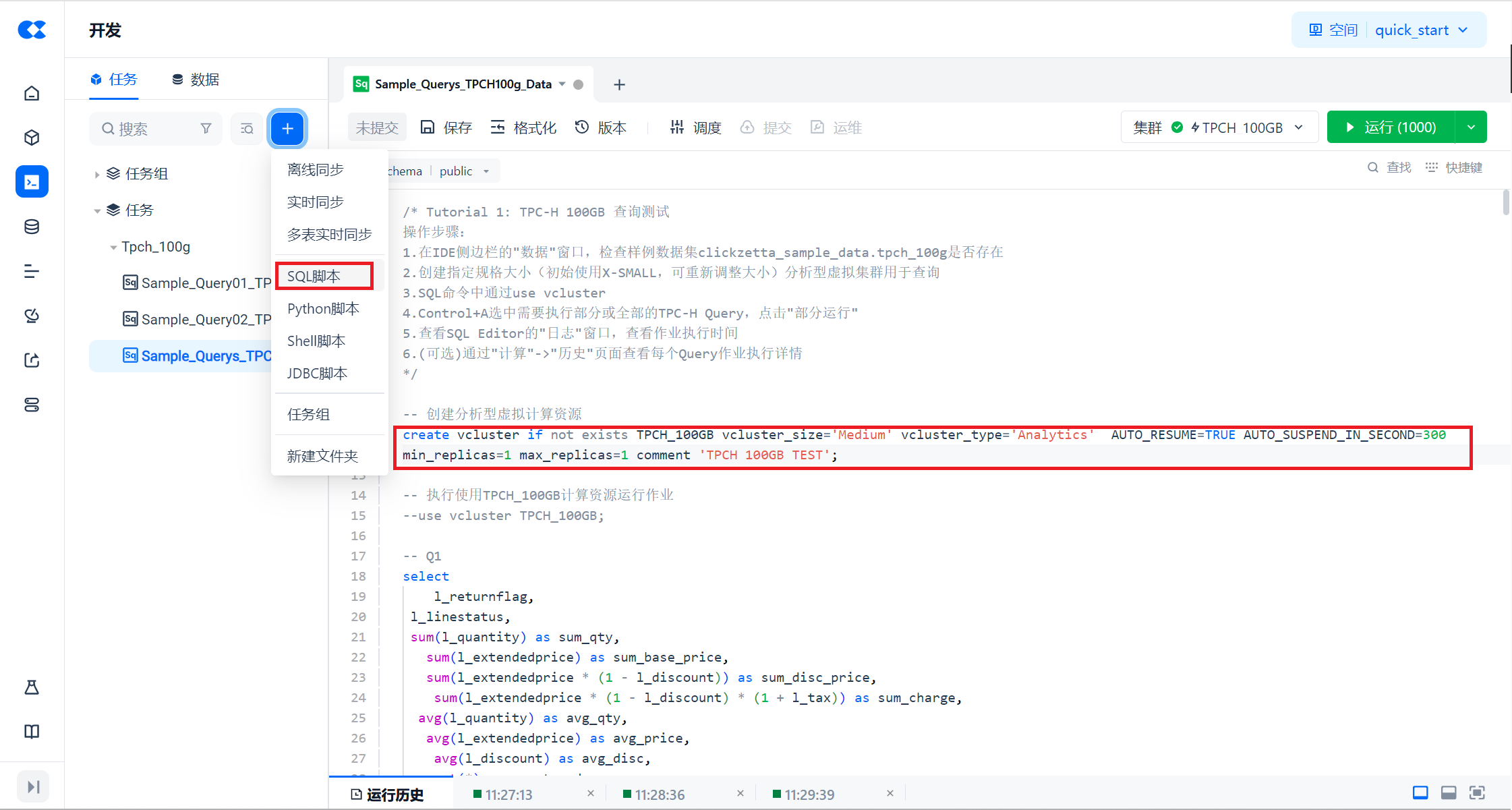

In this tutorial, you can quickly create a cluster by creating a new SQL script task in the "Development" module and executing the following script.

-- Create analytical virtual computing resources

create vcluster if not exists TPCH_100GB vcluster_size='Medium' vcluster_type='Analytics' AUTO_RESUME=TRUE AUTO_SUSPEND_IN_SECOND=300 min_replicas=1 max_replicas=1 comment 'TPCH 100GB TEST';

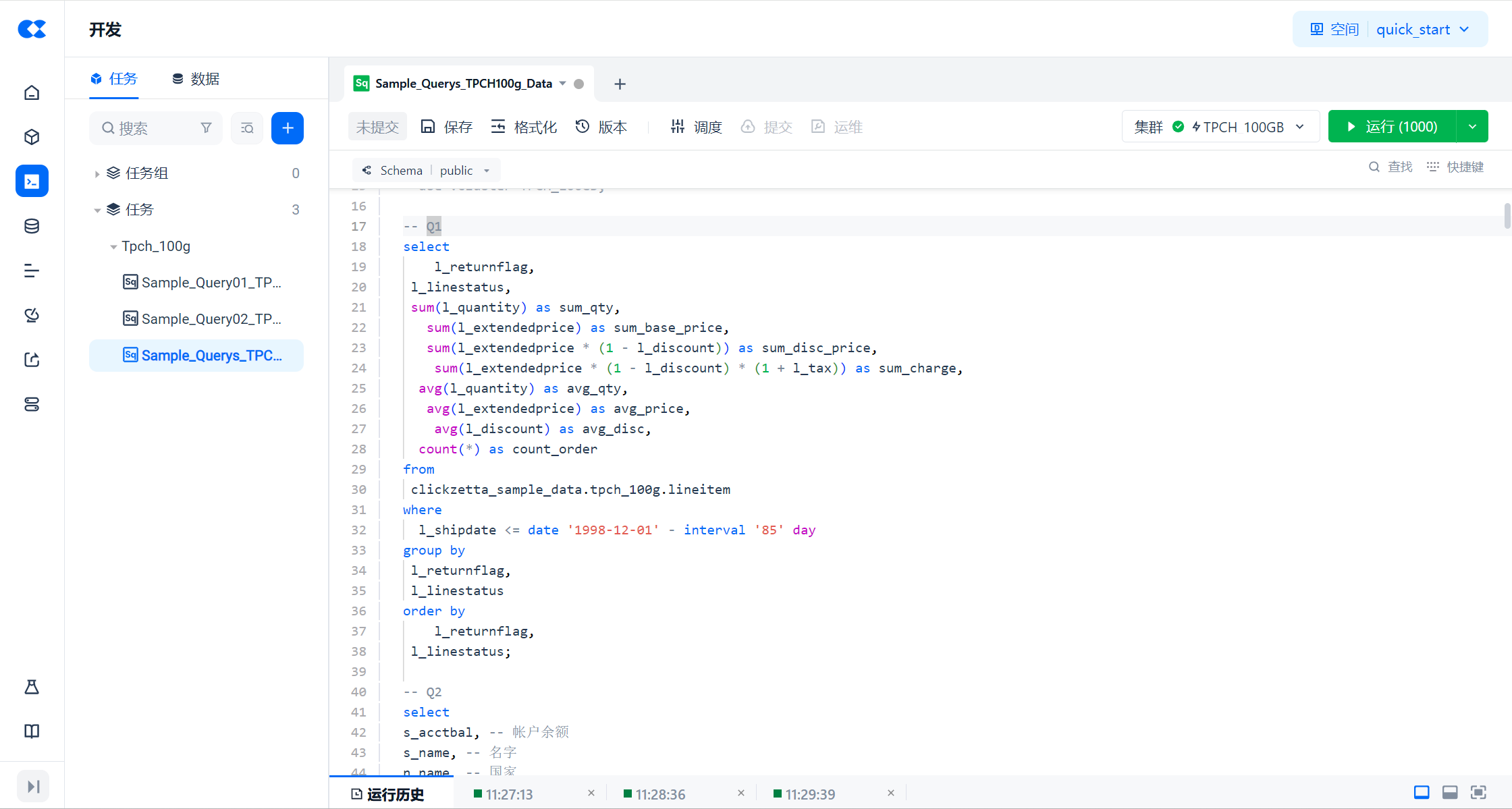

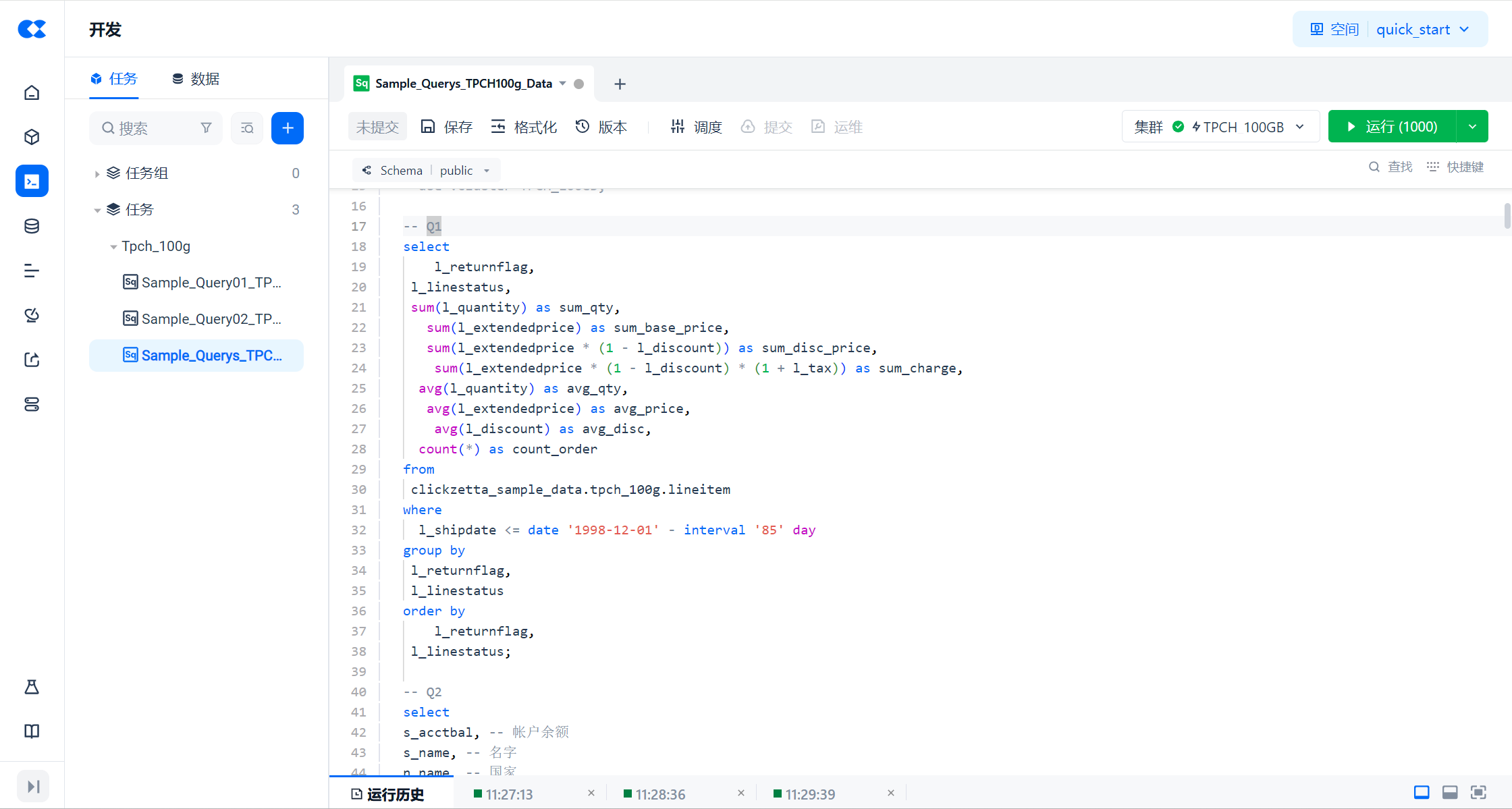

In the "Development" module, create a new SQL script task, enter the 22 TPC-H query statements, select the test cluster you just created from the "Cluster" dropdown list, then select all scripts in the task and click the "Run" button to perform serial queries.

The query script is as follows:

-- Execute the job using TPCH_100GB computing resources

use vcluster TPCH_100GB;

-- Set the query tag

set query_tag='tpch100g_benchmark';

-- Q1

select

l_returnflag,

l_linestatus,

sum(l_quantity) as sum_qty,

sum(l_extendedprice) as sum_base_price,

sum(l_extendedprice * (1 - l_discount)) as sum_disc_price,

sum(l_extendedprice * (1 - l_discount) * (1 + l_tax)) as sum_charge,

avg(l_quantity) as avg_qty,

avg(l_extendedprice) as avg_price,

avg(l_discount) as avg_disc,

count(*) as count_order

from

clickzetta_sample_data.tpch_100g.lineitem

where

l_shipdate <= date '1998-12-01' - interval '85' day

group by

l_returnflag,

l_linestatus

order by

l_returnflag,

l_linestatus;

-- Q2

select

s_acctbal, -- Account balance

s_name, -- Name

n_name, -- Country

p_partkey, -- Part number

p_mfgr, -- Manufacturer

s_address, -- Supplier address

s_phone, -- Phone number

s_comment -- Comment

from

clickzetta_sample_data.tpch_100g.part,

clickzetta_sample_data.tpch_100g.supplier,

clickzetta_sample_data.tpch_100g.partsupp,

clickzetta_sample_data.tpch_100g.nation,

clickzetta_sample_data.tpch_100g.region

where

p_partkey = ps_partkey

and s_suppkey = ps_suppkey

and p_size = 15 -- Specified size, randomly chosen within the range [1, 50]

and p_type like '%BRASS' -- Specified type, randomly chosen within the range specified by TPC-H standard

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'EUROPE' -- Specified region, randomly chosen within the range specified by TPC-H standard

and ps_supplycost = (

select

min(ps_supplycost) -- Aggregate function

from -- Overlapping tables with the parent query

clickzetta_sample_data.tpch_100g.partsupp,

clickzetta_sample_data.tpch_100g.supplier,

clickzetta_sample_data.tpch_100g.nation,

clickzetta_sample_data.tpch_100g.region

where

p_partkey = ps_partkey

and s_suppkey = ps_suppkey

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'EUROPE'

)

order by

s_acctbal desc,

n_name,

s_name,

p_partkey

limit 100;

-- Q3

select

l_orderkey,

sum(l_extendedprice * (1 - l_discount)) as revenue, -- Potential revenue, aggregate operation

o_orderdate,

o_shippriority

from

clickzetta_sample_data.tpch_100g.customer,

clickzetta_sample_data.tpch_100g.orders,

clickzetta_sample_data.tpch_100g.lineitem

where

c_mktsegment = 'BUILDING' -- Randomly chosen within the range specified by TPC-H standard

and c_custkey = o_custkey

and l_orderkey = o_orderkey

and o_orderdate < '1995-03-15' -- Specified date range, randomly chosen within [1995-03-01, 1995-03-31]

and l_shipdate > '1995-03-15' -- Specified date range, randomly chosen within [1995-03-01, 1995-03-31]

group by

l_orderkey, -- Order identifier

o_orderdate, -- Order date

o_shippriority -- Shipping priority

order by

revenue desc, -- Descending order, placing the highest potential revenue at the top

o_orderdate

limit 10;

-- Q4

select

o_orderpriority,

count(*) as order_count

from

clickzetta_sample_data.tpch_100g.orders

where

o_orderdate >= date '1993-10-01'

and o_orderdate < date '1993-10-01' + interval '3' month

and exists (

select

*

from

clickzetta_sample_data.tpch_100g.lineitem

where

l_orderkey = o_orderkey

and l_commitdate < l_receiptdate

)

group by

o_orderpriority

order by

o_orderpriority;

-- Q5

select

n_name,

sum(l_extendedprice * (1 - l_discount)) as revenue

from

clickzetta_sample_data.tpch_100g.customer,

clickzetta_sample_data.tpch_100g.orders,

clickzetta_sample_data.tpch_100g.lineitem,

clickzetta_sample_data.tpch_100g.supplier,

clickzetta_sample_data.tpch_100g.nation,

clickzetta_sample_data.tpch_100g.region

where

c_custkey = o_custkey

and l_orderkey = o_orderkey

and l_suppkey = s_suppkey

and c_nationkey = s_nationkey

and s_nationkey = n_nationkey

and n_regionkey = r_regionkey

and r_name = 'ASIA' -- Specified region, randomly chosen within the range specified by TPC-H standard

and o_orderdate >= '1994-01-01' -- DATE is randomly chosen from a year between 1993 and 1997, January 1st

and o_orderdate < date '1996-01-01' + interval '1' year

group by

n_name -- Group by name

order by

revenue desc; -- Sort by revenue in descending order, note the difference between group and sort clauses

-- Q6

select

sum(l_extendedprice * l_discount) as revenue -- Potential revenue increase

from

clickzetta_sample_data.tpch_100g.lineitem

where

l_shipdate >= '1994-01-01' -- DATE is randomly chosen from a year between 1993 and 1997, January 1st

and l_shipdate < date '1996-01-01' + interval '1' year -- Within a year

and l_discount between 0.06 - 0.01 and 0.06 + 0.01

and l_quantity < 24; -- QUANTITY is randomly chosen within the range [24, 25]

-- Q7

select

supp_nation, -- Supplier nation

cust_nation, -- Customer nation

l_year,

sum(volume) as revenue -- Annual shipping revenue

from

(

select

n1.n_name as supp_nation,

n2.n_name as cust_nation,

extract(year from l_shipdate) as l_year,

l_extendedprice * (1 - l_discount) as volume

from

clickzetta_sample_data.tpch_100g.supplier,

clickzetta_sample_data.tpch_100g.lineitem,

clickzetta_sample_data.tpch_100g.orders,

clickzetta_sample_data.tpch_100g.customer,

clickzetta_sample_data.tpch_100g.nation n1,

clickzetta_sample_data.tpch_100g.nation n2

where

s_suppkey = l_suppkey

and o_orderkey = l_orderkey

and c_custkey = o_custkey

and s_nationkey = n1.n_nationkey

and c_nationkey = n2.n_nationkey

and ( -- Different values for NATION2 and NATION1, indicating cross-country shipping

(n1.n_name = 'FRANCE' and n2.n_name = 'GERMANY')

or (n1.n_name = 'GERMANY' and n2.n_name = 'FRANCE')

)

and l_shipdate between '1995-01-01' and '1996-12-31'

) as shipping

group by

supp_nation,

cust_nation,

l_year

order by

supp_nation,

cust_nation,

l_year;

-- Q8

select

o_year, -- Year

sum(case

when nation = 'BRAZIL' then volume -- Specified country, randomly chosen within the range specified by TPC-H standard

else 0

end) / sum(volume) as mkt_share -- Market share: percentage of revenue from a specific type of product; aggregate operation

from

(

select

extract(year from o_orderdate) as o_year, -- Extract year

l_extendedprice * (1 - l_discount) as volume, -- Revenue from a specific type of product

n2.n_name as nation

from

clickzetta_sample_data.tpch_100g.part,

clickzetta_sample_data.tpch_100g

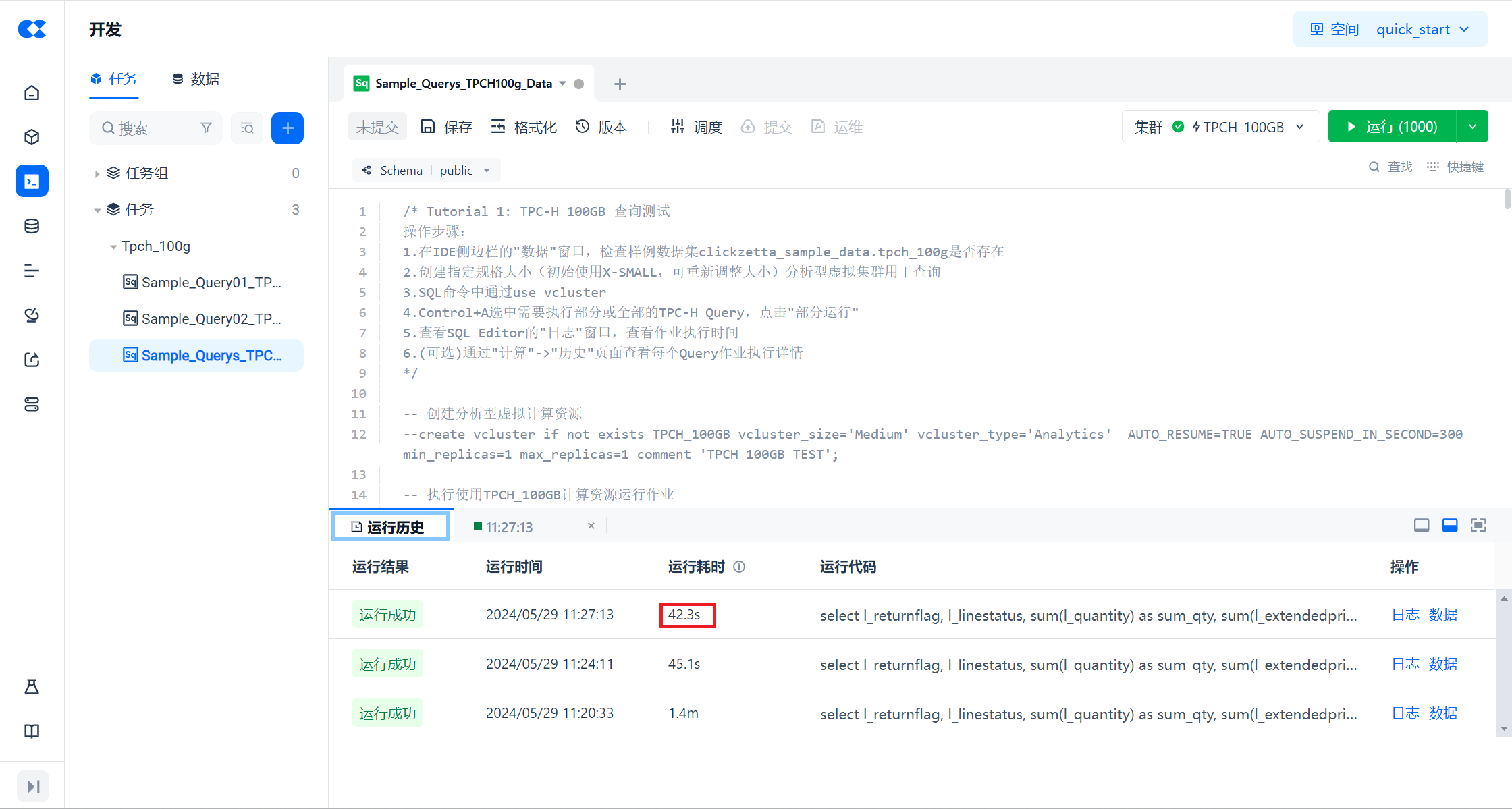

After execution, you can view the runtime of this task through the current SQL Editor's execution history.

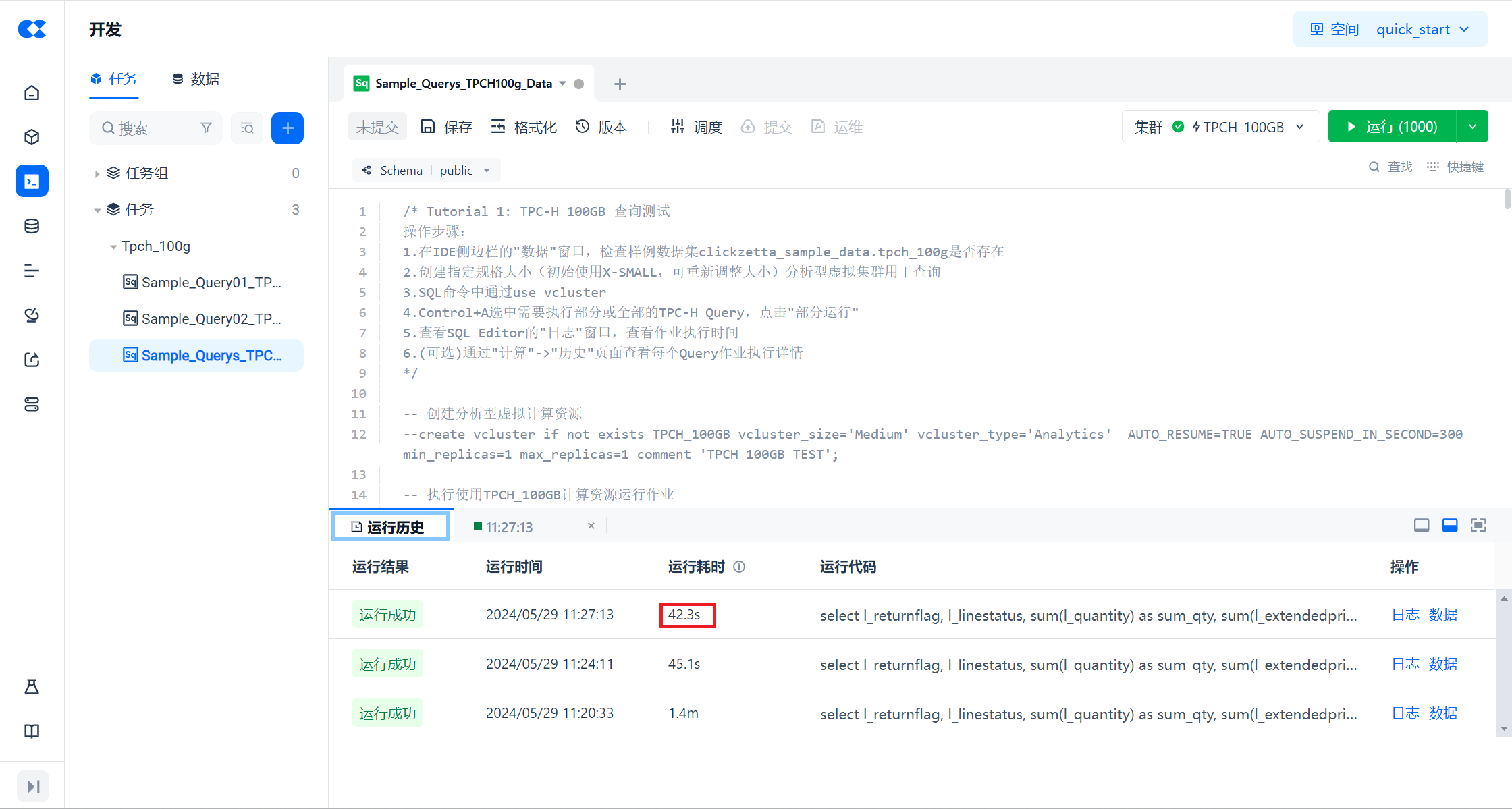

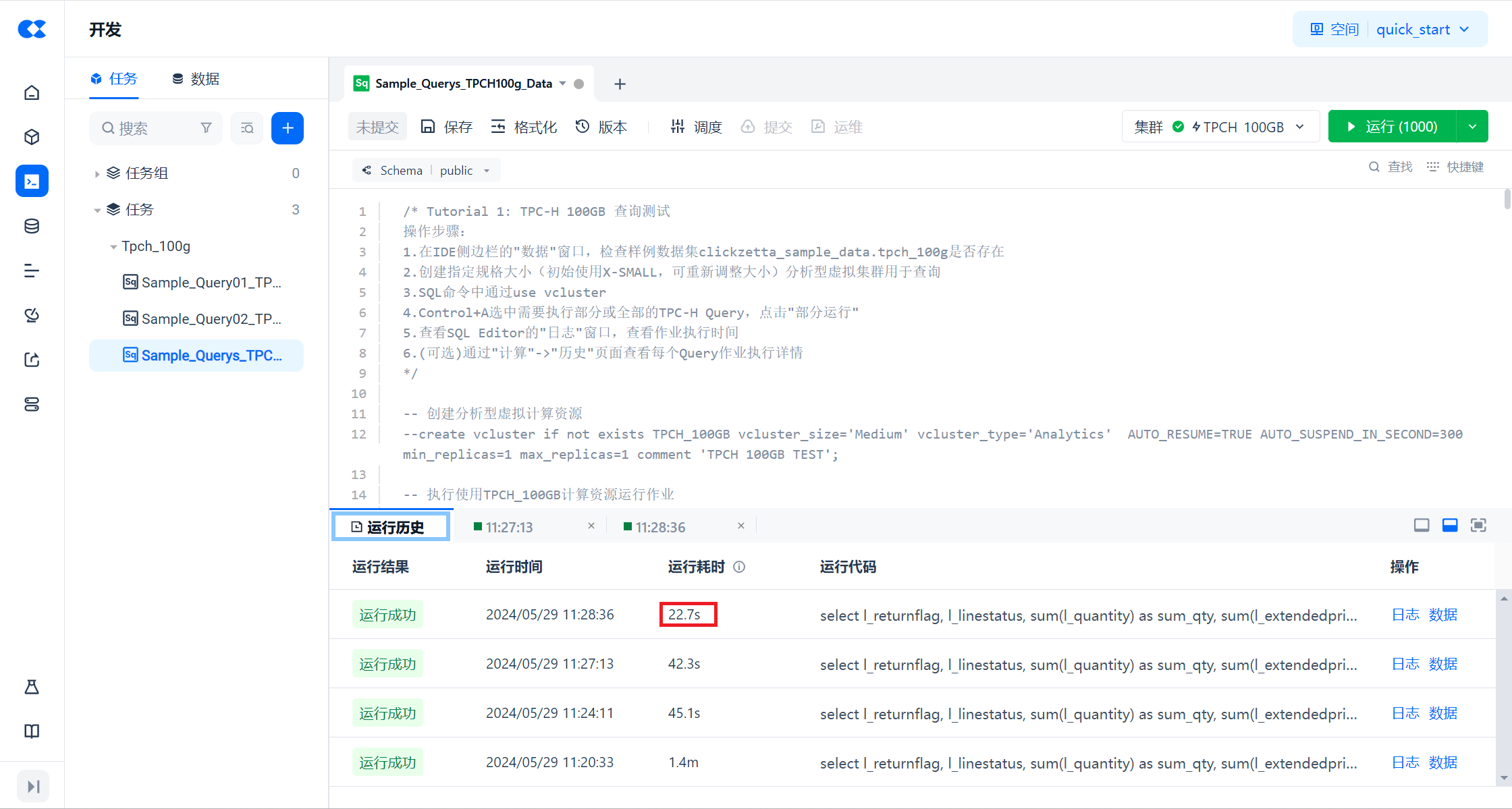

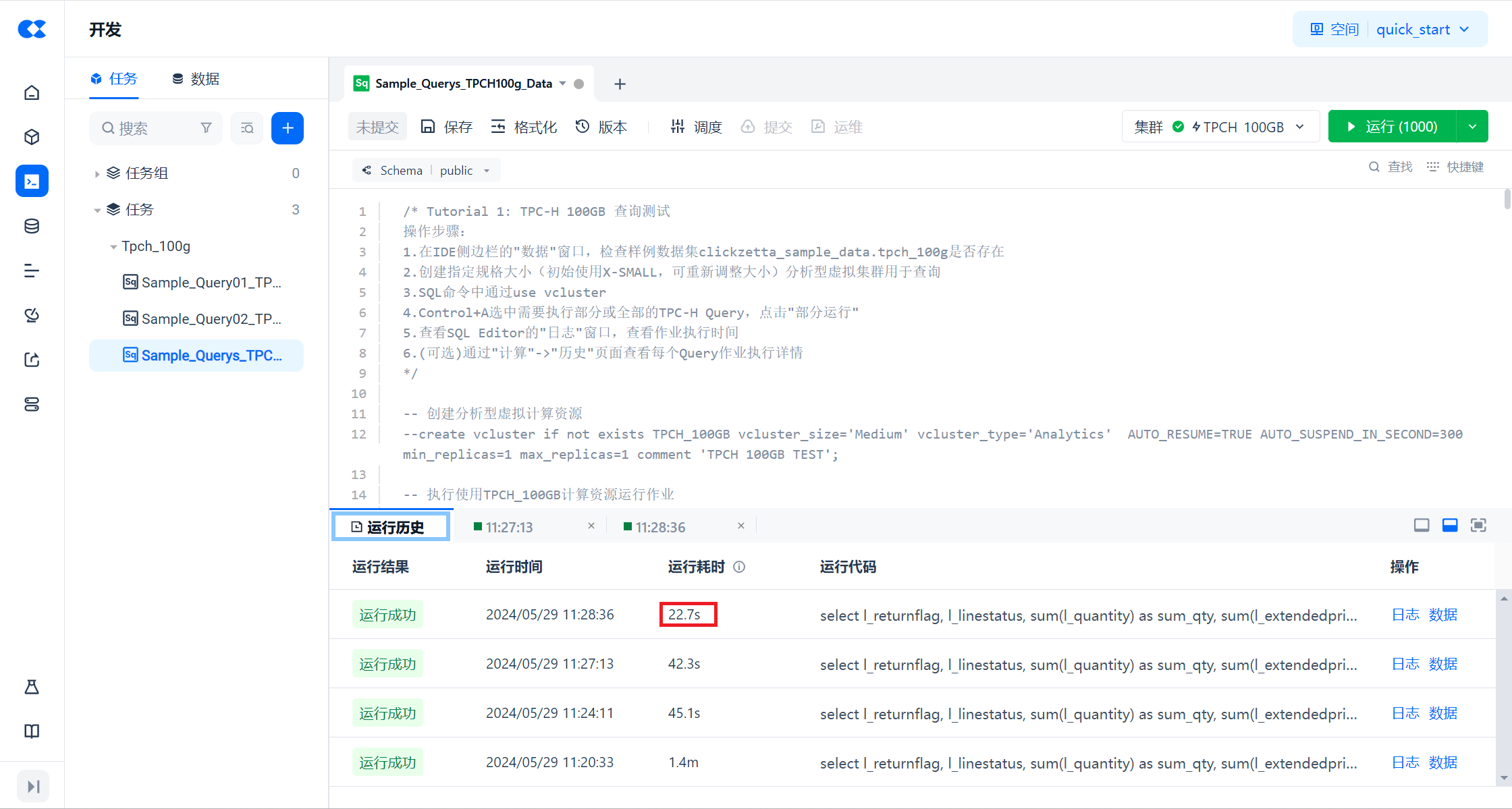

If you want to perform performance testing, you can execute the query more than twice consecutively so that the computing cluster can fully cache the data for optimal performance. At the same time, Singdata Lakehouse also provides an active system caching feature, which is not covered in this tutorial. Below is the result of the second run, showing the performance improvement after the computing cluster caches the data compared to the first run without caching.

If you want to see the execution details of each of the 22 queries, you can access "Compute → Job History" and filter the query history by the query tag "tpch100g_benchmark".

## Step3. Expand the cluster size and query the sample data with TPC-H again

Through the "Compute → Cluster" management page, you can modify the size of the test cluster you just created from M to L, where the L size is twice that of M.

Or execute the following command in the SQL script to modify:

```sql

-- Modify cluster size

alter vcluster TPCH_100GB SET VCLUSTER_SIZE = 'LARGE';

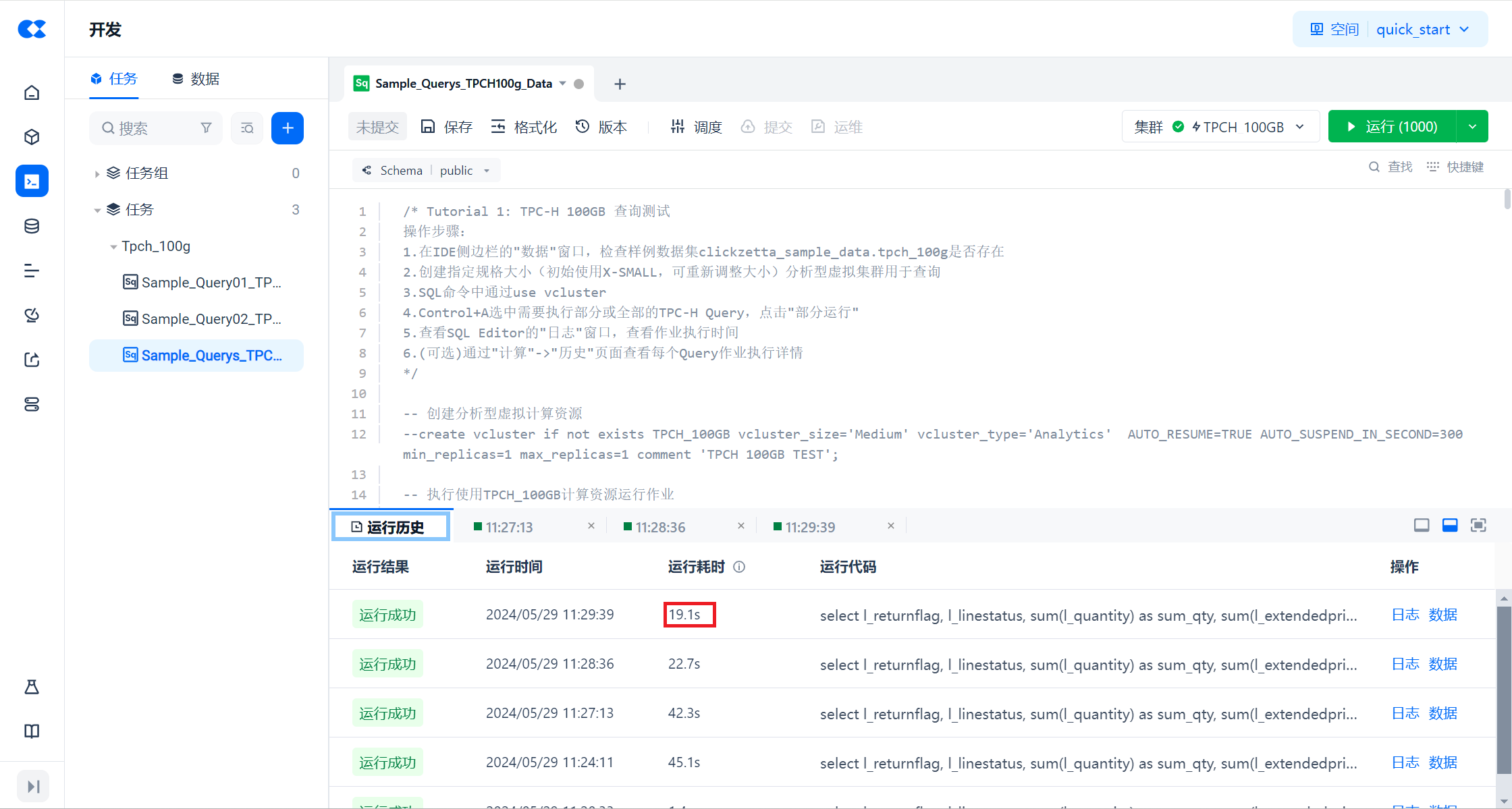

After modification, use the resized cluster to perform the query test again.

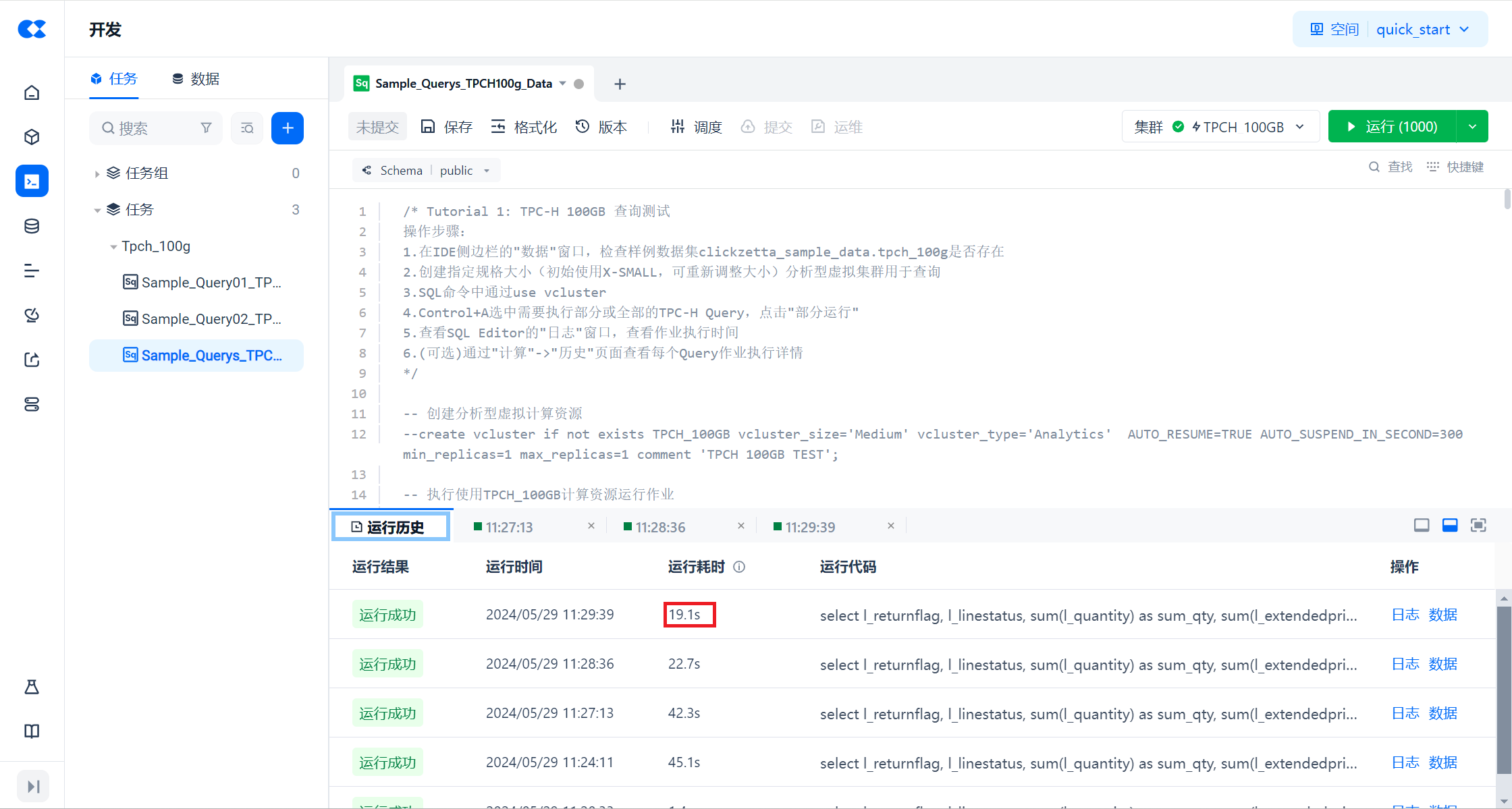

After the new computing nodes are fully cached, the performance will continue to improve.

By observing the running time of the job, it can be seen that with the same data scale and query tasks, the overall running time of the task is greatly reduced by expanding the size of the computing cluster. After two executions, as the data is cached, query performance can be improved.