A Comprehensive Guide to Importing Data into Singdata Lakehouse

Data Ingestion: Loading Files from the Web into the Lakehouse via Singdata Lakehouse Studio's Built-in Python Node

Overview

Singdata Lakehouse Studio comes with a built-in Python node that allows you to develop and run Python code.

Use Case

This is suitable for scenarios where third-party Python libraries need to be called to process files during data ingestion. For example, in this case, the Python library for Alibaba Cloud Object Storage Service (OSS) is called.

Implementation Steps

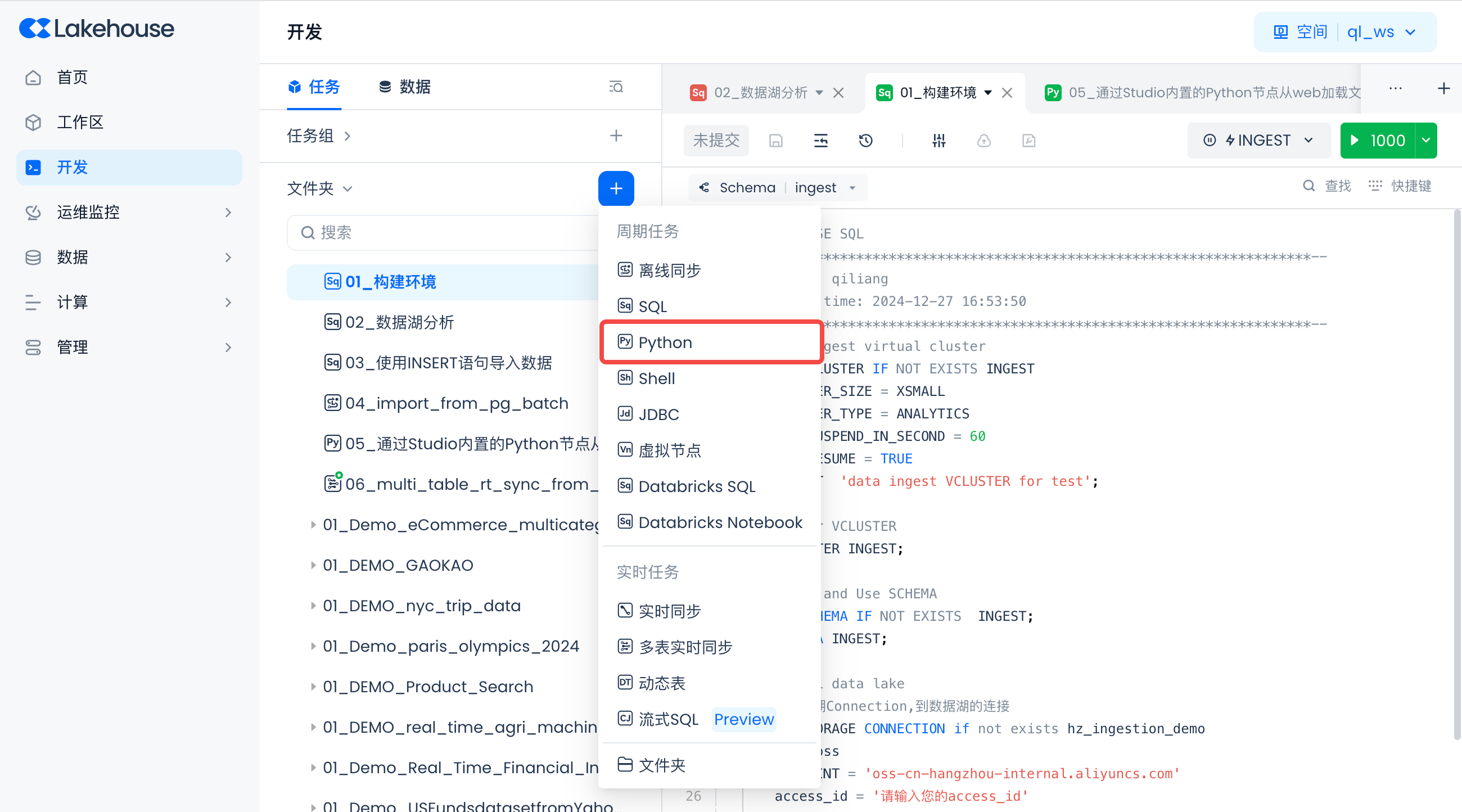

Create a New Python Task

Navigate to Development -> Tasks, click "+", and create a new Python task.

Task Name: 05_Loading Files from the Web into the Lakehouse via Studio's Built-in Python Node.

Develop Python Task Code

Paste the following code into the code editor of the newly created Python task:

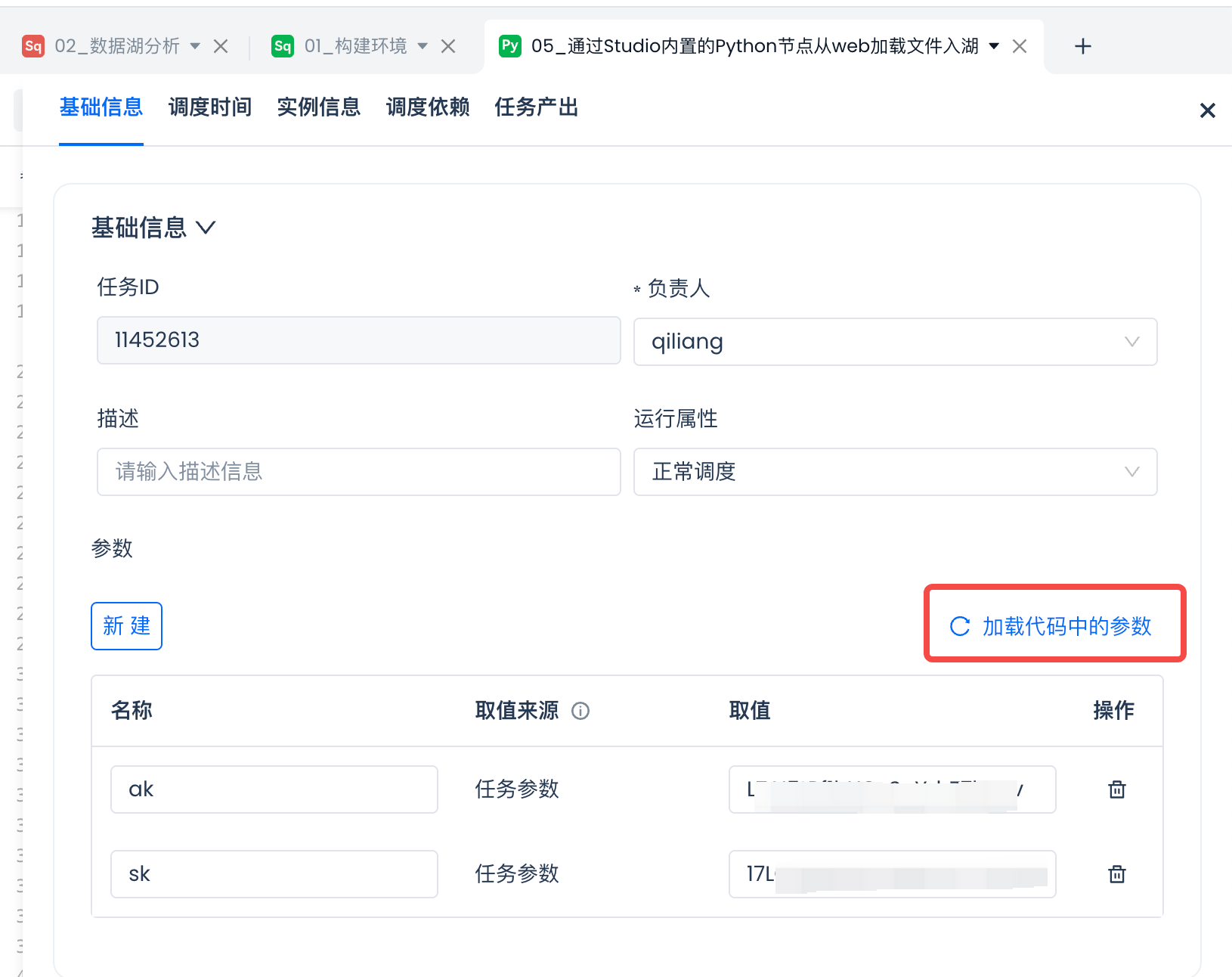

Configure Parameters for the Task

There are two parameters:

ACCESS_KEY_ID = '${ak}'ACCESS_KEY_SECRET = '${sk}'

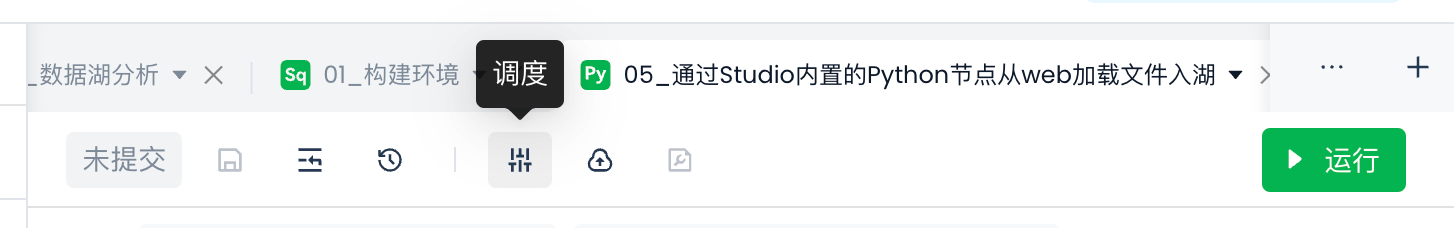

Click on the schedule to fill in the default values for the parameters:

Click "Load Parameters from Code" and fill in the corresponding values:

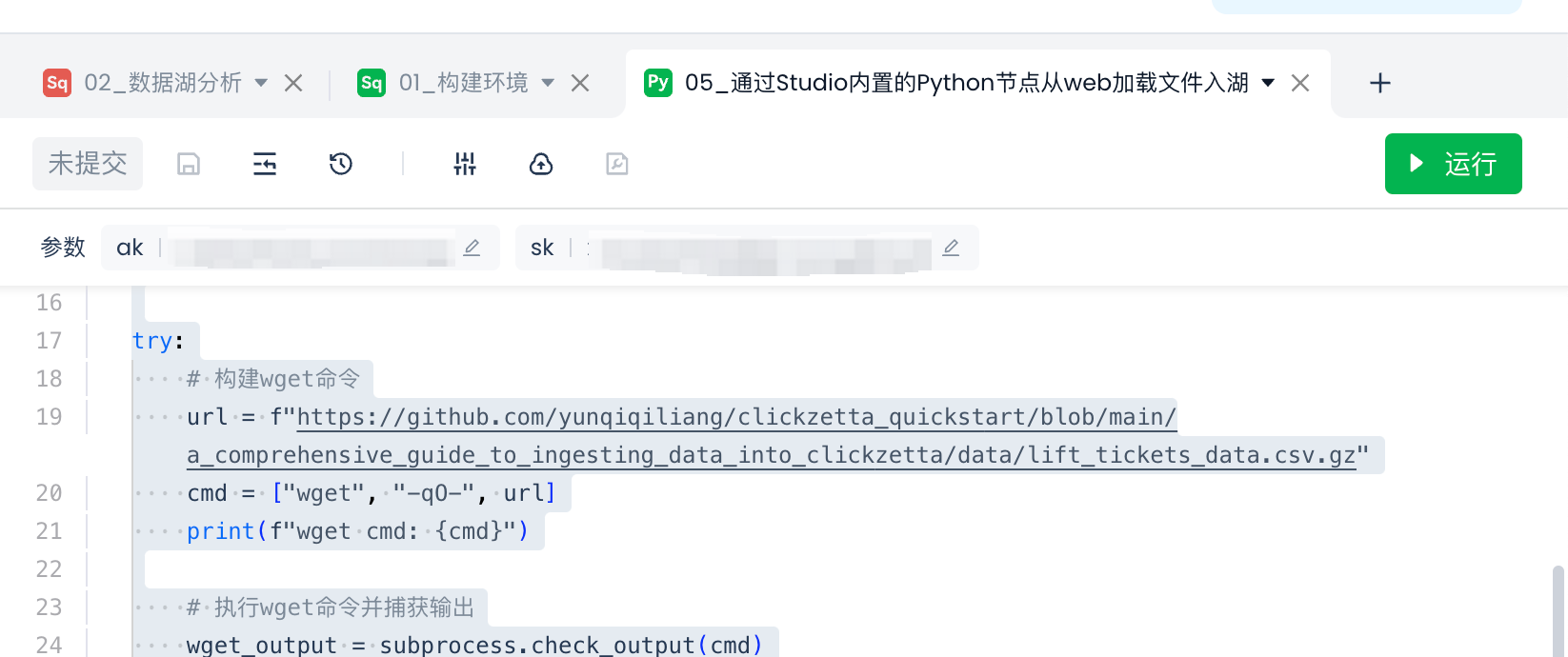

Run the Test

Click "Run" to execute the Python code.

Check the Upload Results

Log in to Alibaba Cloud Object Storage to view the uploaded files.

Next Steps

- Schedule Python tasks to achieve periodic data lake ingestion

- Analyze the files loaded into the data lake using SQL

- Form a complete ELT workflow with other tasks