(SaaS)²: Singdata Lakehouse + Zilliz, Make Data Ready for BI and AI

Solution Overview

-

(SaaS)²: Singdata Lakehouse and Zilliz both provide SaaS services based on mainstream cloud services. By combining SaaS services, you can maximize the benefits of fully managed and pay-as-you-go SaaS models.

-

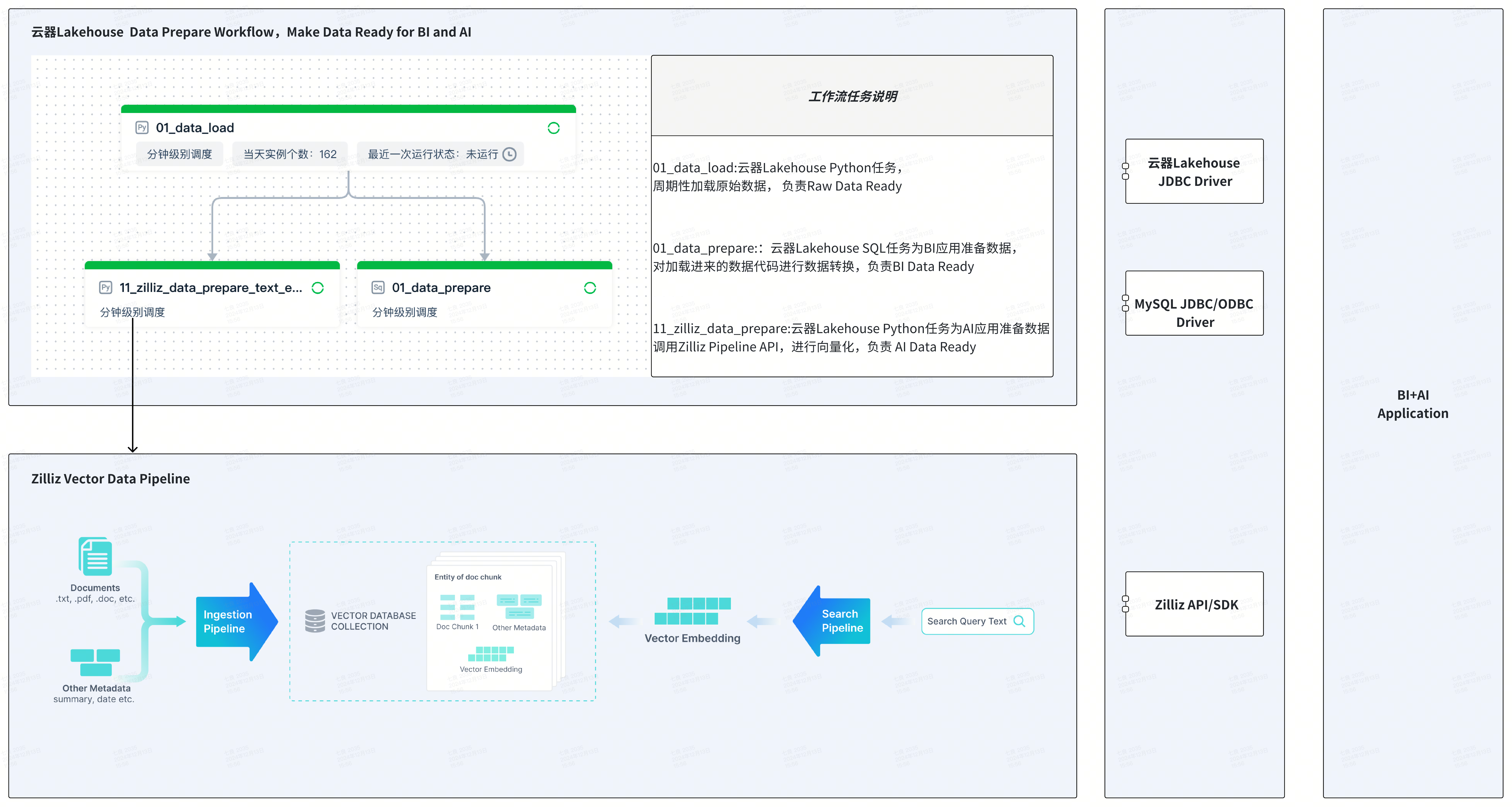

Make Data Ready for BI and AI: Singdata Lakehouse's data warehouse focuses on storing, processing, and analyzing scalar data for BI applications, while Zilliz vector database focuses on enhanced data analysis for AI. By integrating Singdata Lakehouse and Zilliz vector database, a complete production-level BI+AI solution is provided to address the asymmetry between BI and AI:

- Asymmetry in data freshness between BI and AI: Zilliz Vector Data Pipeline provides batch data embedding services, reducing embedding time by 10x+ compared to non-batch, significantly improving the freshness of AI data.

- Asymmetry in data scale between BI and AI: Zilliz can still provide stable and fast response and concurrency at the scale of tens of billions of vector data, making vector data no longer a supplementary "niche" data, achieving parity in scale between BI data and AI data.

-

Business Upgrade: Upgrade traditional data analysis to enhanced analysis with the simplest solution, achieving BI+AI integration.

Solution Components

- Singdata Lakehouse Platform: Provides management of data lakes and data warehouses, including data management, data integration, task development, task execution, workflow orchestration, task monitoring, and maintenance.

- Singdata Zettapark: Implements csv file loading through Python+Dataframe programming.

- Zilliz Vector Database: A high-performance, cost-effective vector database.

- Zilliz Data Pipeline: Vectorizes and retrieves data such as text, images, and files, supports Chinese and English embedding models, rerank models, and provides an extremely simplified and developer-friendly vector processing method.

Application Scenario Example: Enhancing Text Search with Semantic Retrieval

- Scalar Retrieval: Singdata Lakehouse provides text-based like fuzzy matching and keyword search based on text inverted index.

- Vector Retrieval: Zilliz provides semantic retrieval based on vector data and result reranking with rerank models.

Combining scalar retrieval and vector retrieval improves retrieval performance and accuracy, suitable for product search, product recommendation, and other scenarios.

Task 1: Load Raw Data into Singdata Lakehouse

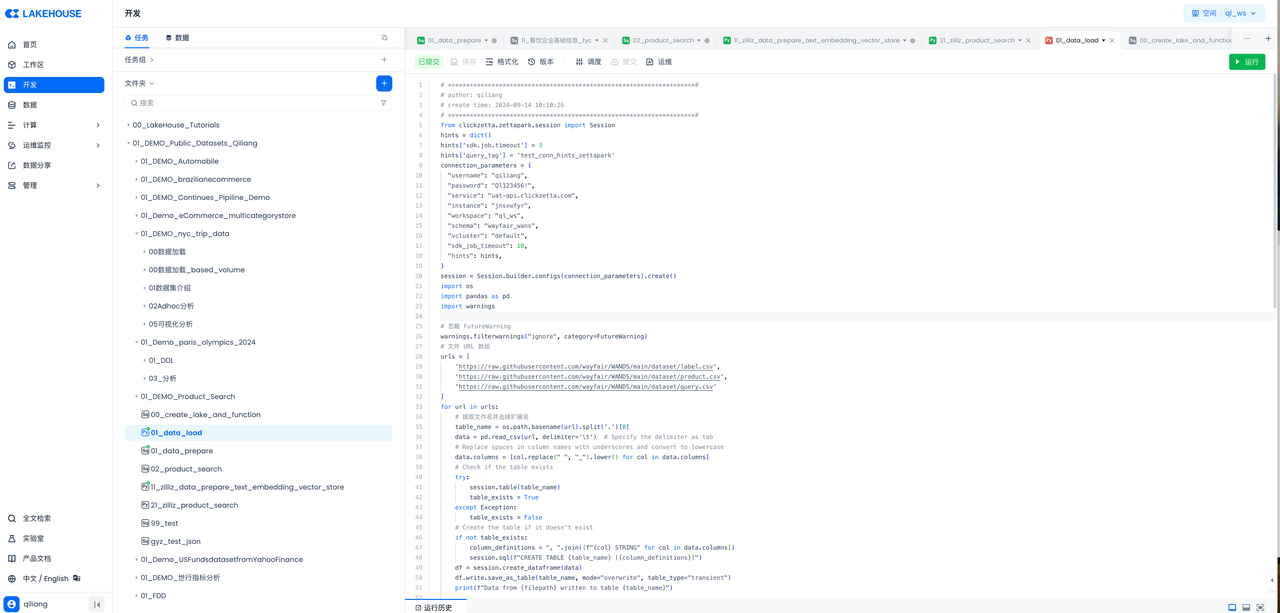

Singdata Lakehouse provides multiple ways to load csv data, including offline data synchronization via WEB, loading csv through data lake, etc. This article uses Singdata Zettapark to implement data loading, with Python code running in Singdata Lakehouse's Python task node.

The code is as follows:

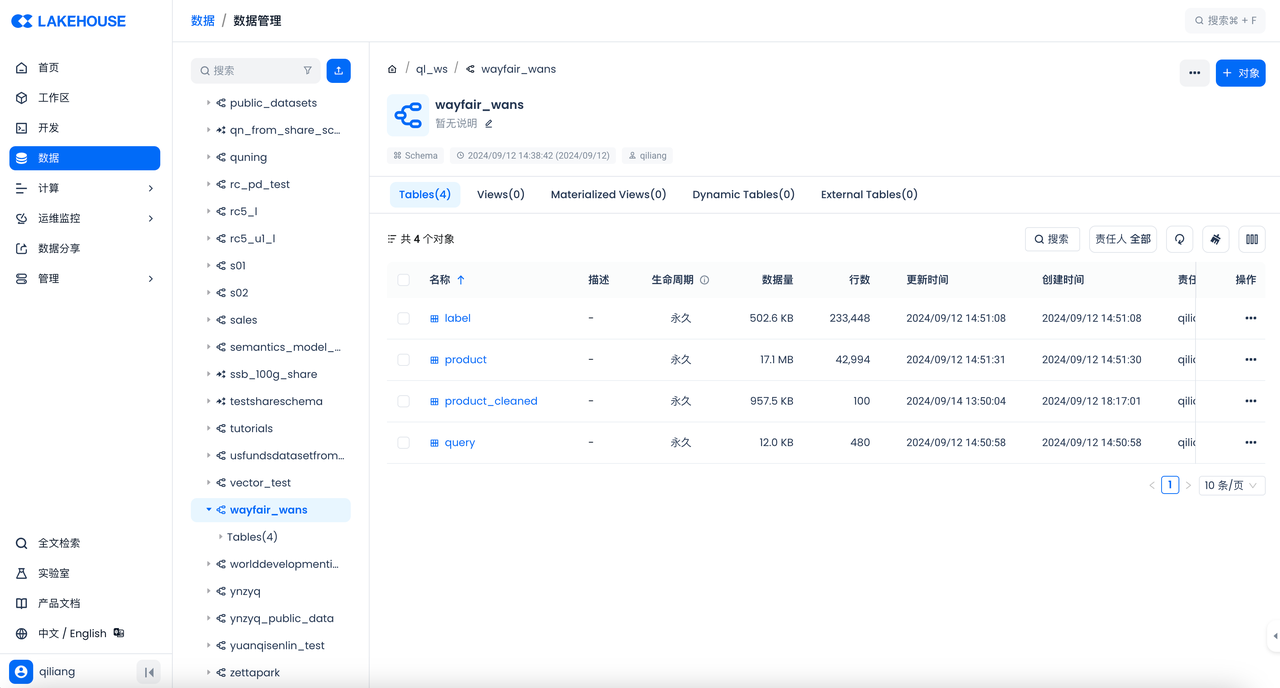

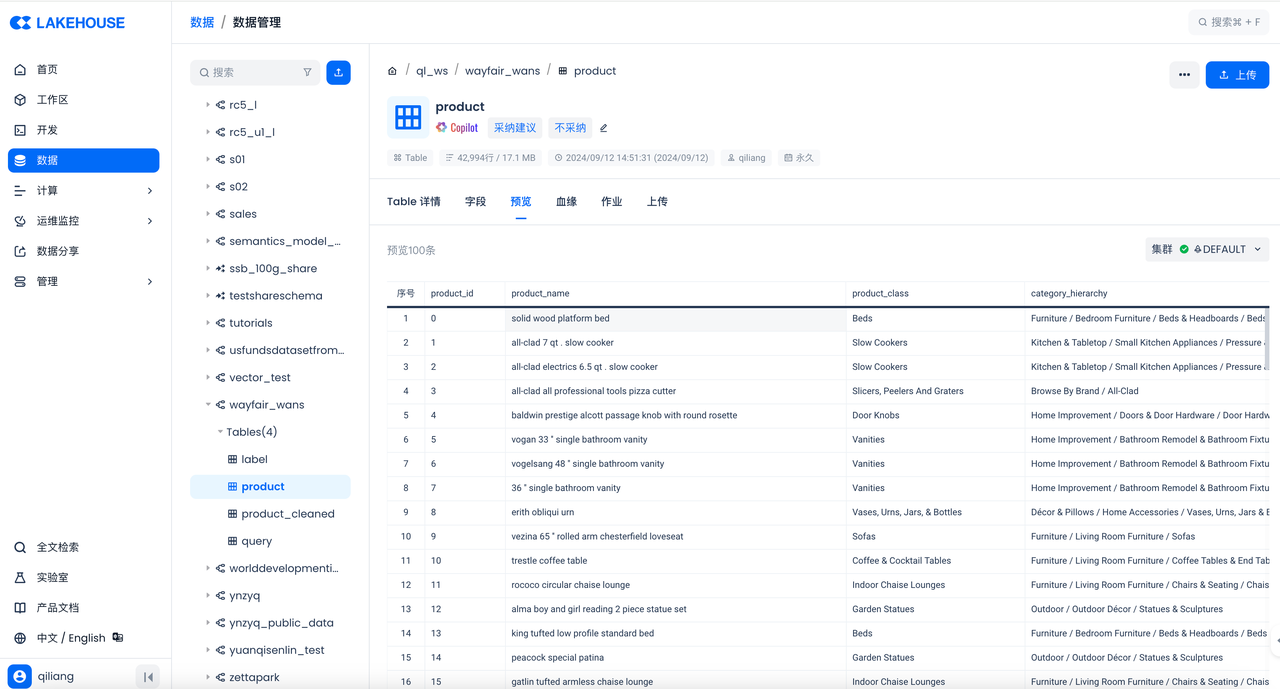

Then check the results in the Singdata Lakehouse console:

Task 2: Develop SQL tasks to prepare data for BI

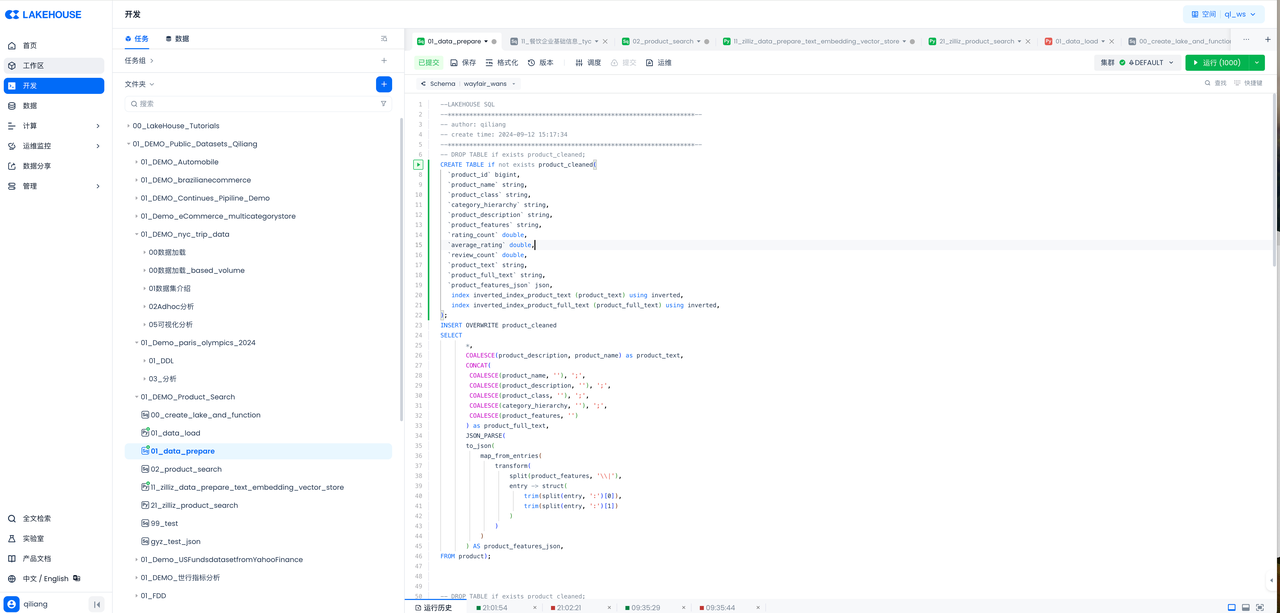

The code is as follows:

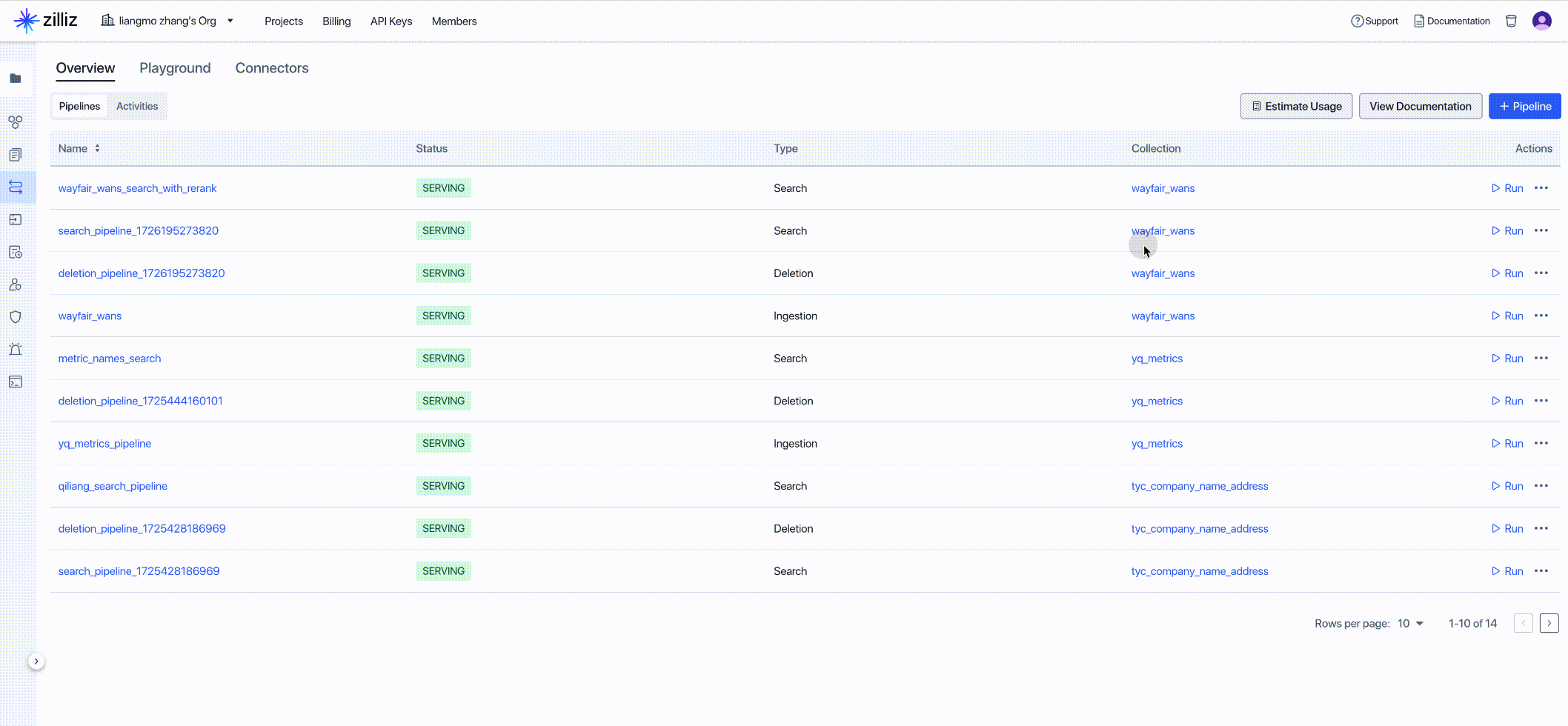

Task Three: Create Zillize Data Ingestion Pipeline

Zilliz Cloud Pipelines can simplify the process of converting unstructured data into embedding vectors and interfacing with the Zilliz Cloud vector database to store vector data, achieving efficient vector indexing and retrieval. Developers often face complex unstructured data conversion and retrieval issues when dealing with unstructured data, which can slow down development speed. Zilliz Cloud Pipelines addresses this challenge by providing an integrated solution that helps developers easily convert unstructured data into searchable vectors and interface with the Zilliz Cloud vector database to ensure high-quality vector retrieval.

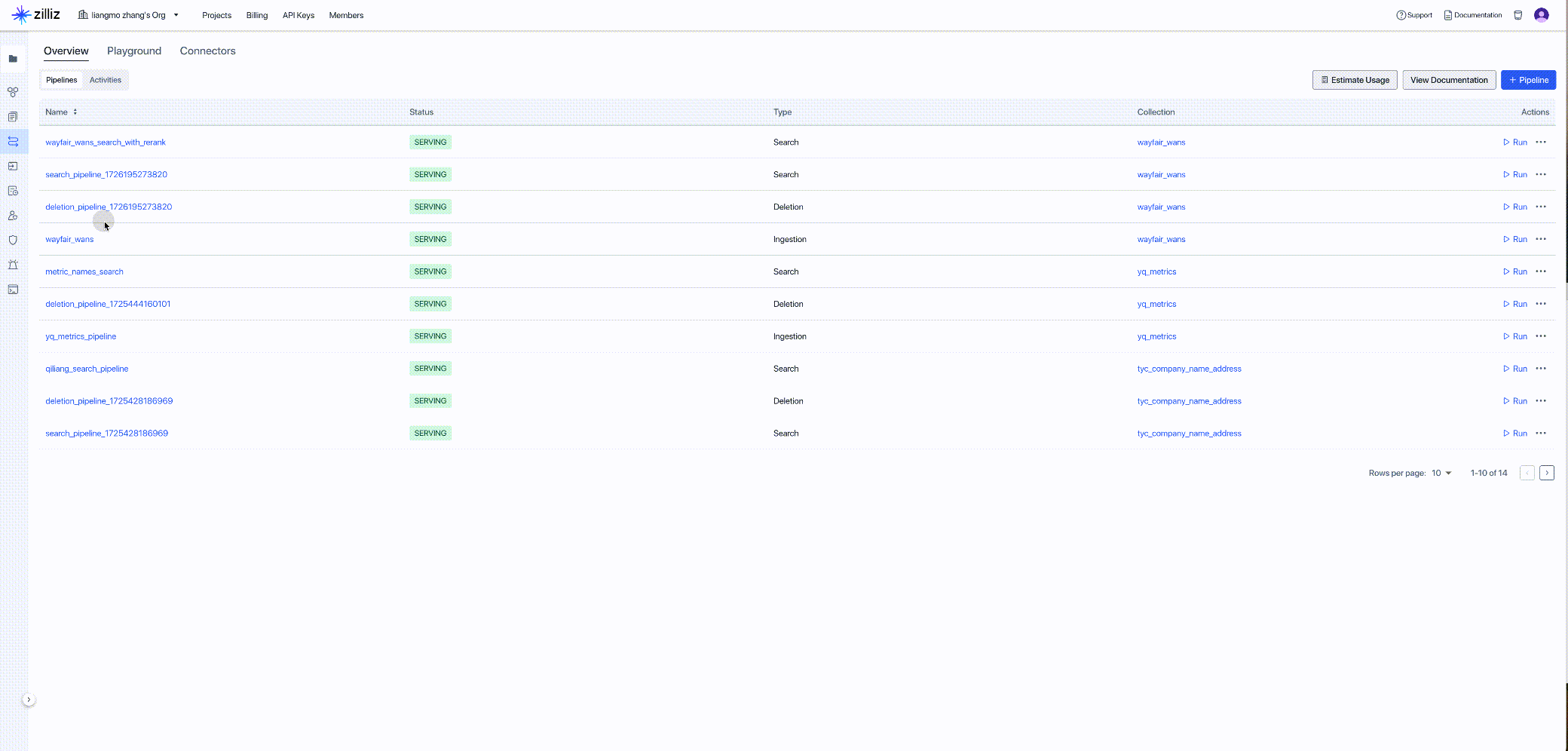

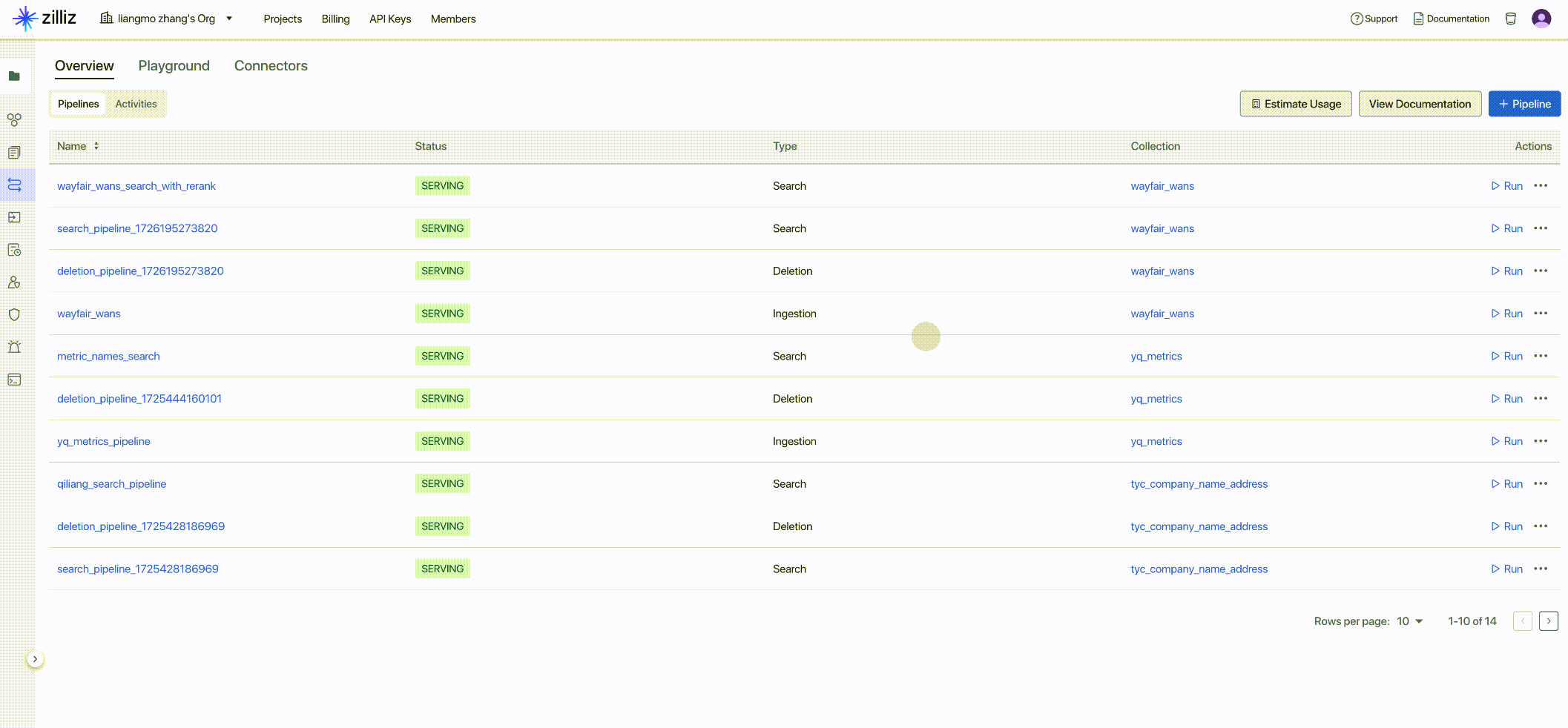

Obtain the client code of the newly created Pipeline as the input for the next step:

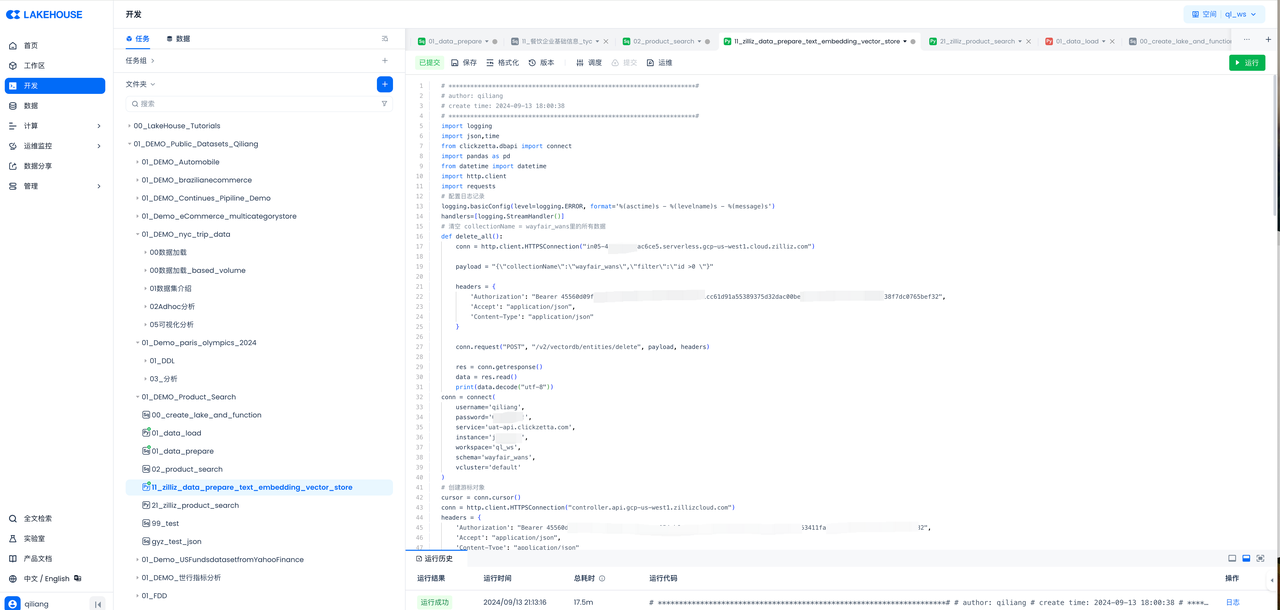

Task Four: Develop a Python Task to Call the Zilliz Data Ingestion Pipeline API in the Workflow, Preparing Data for AI-Enhanced Analysis and Automating Vector Data ETL

Send the text information of the table named product in Singdata Lakehouse to Zilliz, first perform embedding on the text data, and then store it as vectors.

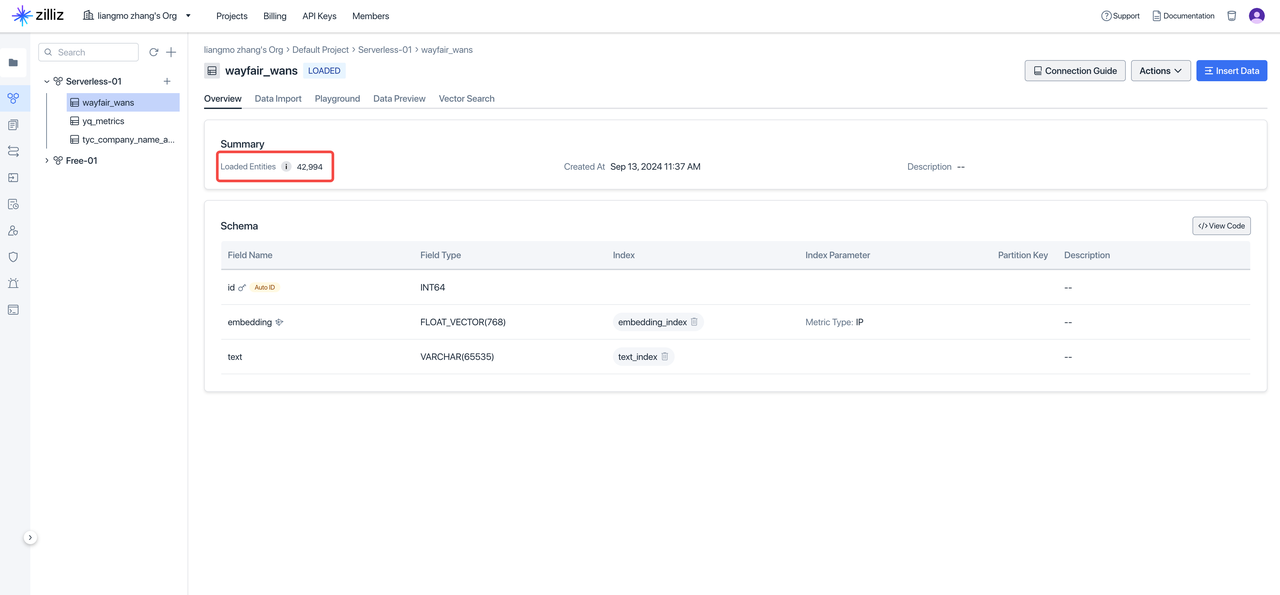

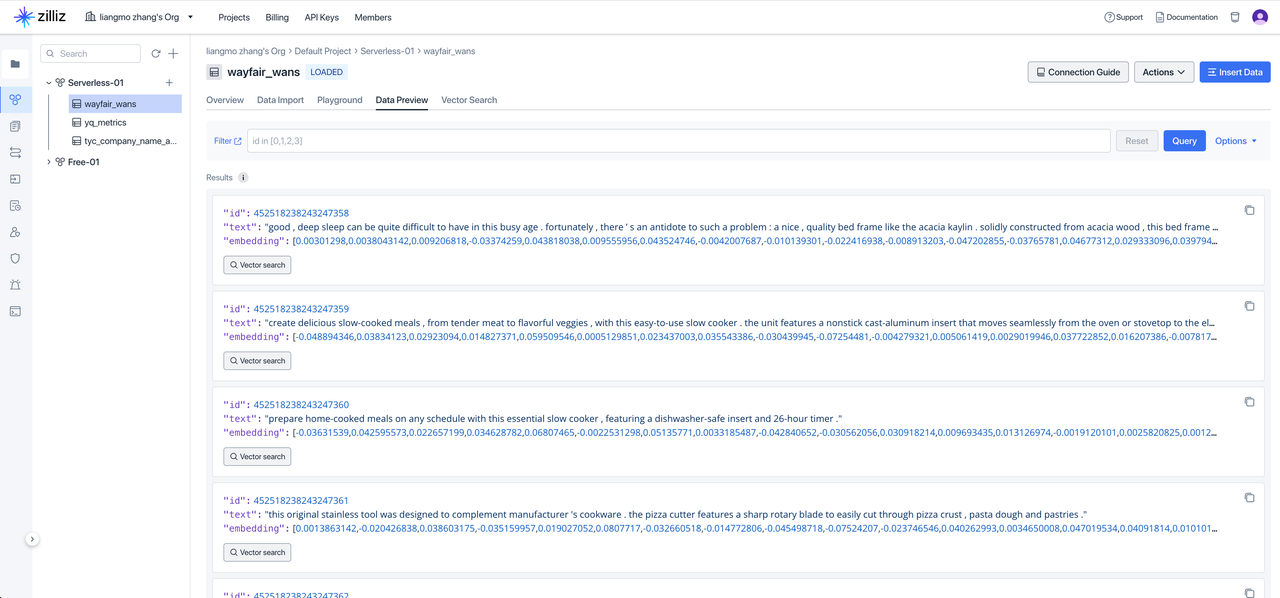

After executing the above code in Singdata Lakehouse, go to the Zilliz console to check and verify the vectorization results:

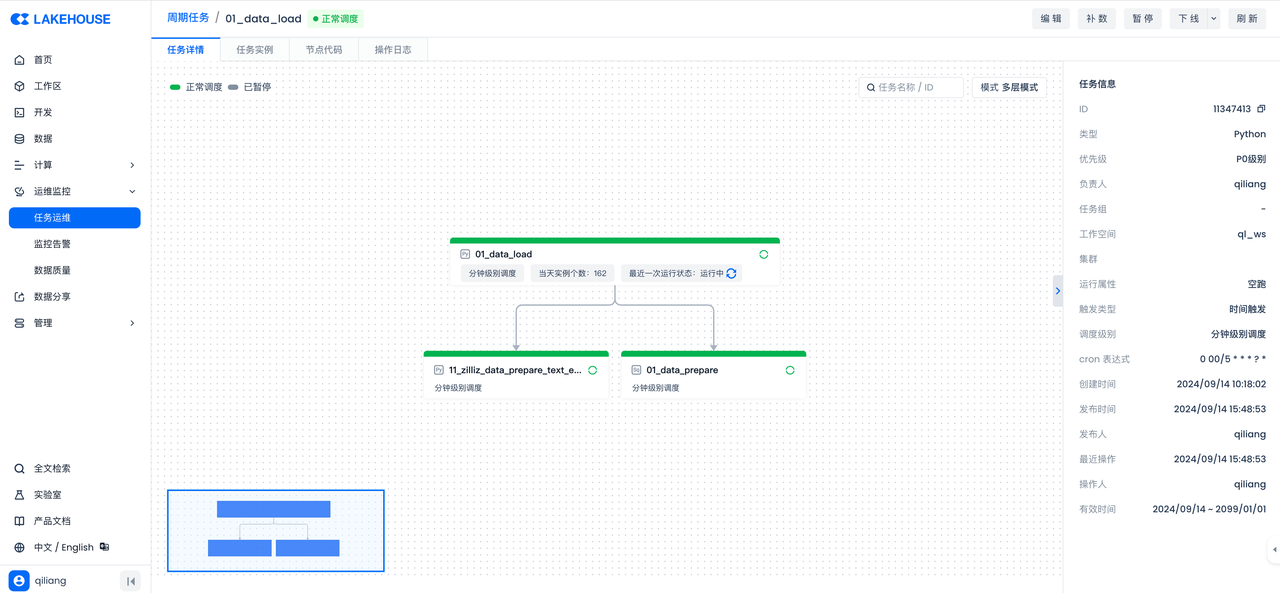

Task Five: Define a Complete Data Flow Through Singdata Lakehouse Workflow Orchestration

Set scheduling properties for the above tasks and submit them to build a data workflow:

Task Six: Create Zilliz Data Search Pipeline

Using the Zillize Data Search Pipeline can quickly and efficiently convert query text into embedding vectors, returning the most relevant top-K document blocks (including text and metadata), effectively gaining data insights from search results.